When we finished Broken Sword, the managing director of Virgin [Interactive] called me into his office and showed me a game from Argonaut [Software] called Creature Shock. He said, “These are the games you should be writing, not adventure games. These are the games. This is the future.”

— Charles Cecil, co-founder of Revolution Software

Broken Sword, Revolution Software’s third point-and-click adventure game, was released for personal computers in September of 1996. Three months later, it arrived on the Sony PlayStation console, so that it could be enjoyed on television as well as monitor screens. And therein lies a tale in itself.

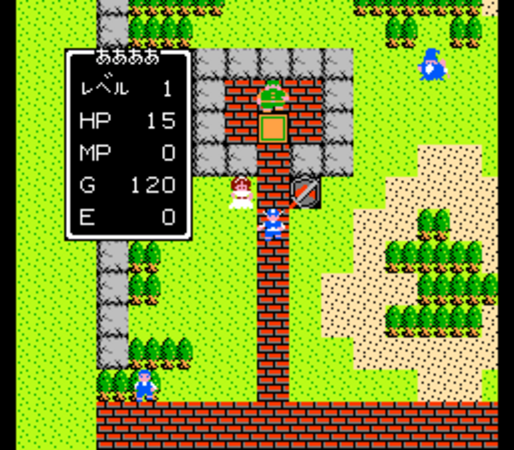

Prior to this point, puzzle-based adventure games of the traditional stripe had had a checkered career on the consoles, for reasons as much technical as cultural. They were a difficult fit with the Nintendo Entertainment System (NES), the console du jour in the United States during the latter 1980s, thanks to the small capacity of the cartridges that machine used to host its games, its lack of means for easily storing state so that one could return to a game where one had left off after spending time away from the television screen, and the handheld controllers it used that were so very different from a mouse, joystick, and/or keyboard. Still, these challenges didn’t stop some enterprising studios from making a go of it, tempted as they were by the huge installed base of Nintendo consoles. Over the course of 1988 and 1989, ICOM Simulations managed to port to the NES Deja Vu, Uninvited, and Shadowgate; the last in particular really took off there, doing so well that it is better remembered as a console than a computer game today. In 1990, LucasArts[1]LucasArts was actually still known as Lucasfilm Games at the time. did the same with their early adventure Maniac Mansion; this port too was surprisingly playable, if also rather hilariously Bowdlerized to conform to Nintendo’s infamously strict censorship regime.

But as the 1990s began, “multimedia” was becoming the watchword of adventure makers on computers. By 1993, the era of the multimedia “interactive movie” was in full swing, with games shipping on CD — often multiple CDs — and often boasting not just voice acting but canned video clips of real actors. Such games were a challenge of a whole different order even for the latest generation of 16-bit consoles. Sierra On-Line and several other companies tried mightily to cram their adventure games onto the Sega Genesis,[2]The Genesis was known as the Mega-Drive in Japan and Europe. a popular console for which one could purchase a CD drive as an add-on product. In the end, though, they gave it up as technically impossible; the Genesis’s color palette and memory space were just too tiny, its processor just too slow.

But then, along came the Sony PlayStation.

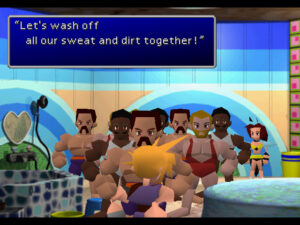

For all that the usual focus of these histories is computer games, I’ve already felt compelled to write at some length about the PlayStation here and there. As I’ve written before, I consider it the third socially revolutionary games console, after the Atari VCS and the Nintendo Entertainment System. Its claim to that status involves both culture and pure technology. Sony marketed the PlayStation to a new demographic: to hip young adults rather than the children and adolescents that Nintendo and its arch-rival Sega had targeted. Meanwhile the PlayStation hardware, with its built-in CD-drive, its 32-bit processor, its 2MB of main memory and 1MB of graphics memory, its audiophile-quality sound system, and its handy memory cards for saving up to 128 K of state at a time, made ambitious long-form gaming experiences easier than ever before to realize on a console. The two factors in combination opened a door to whole genres of games on the PlayStation that had heretofore been all but exclusive to personal computers. Its early years brought a surprising number of these computer ports, such as real-time strategy games like Command & Conquer and turn-based strategy games like X-COM. And we can also add to that list adventure games like Broken Sword.

Their existence was largely thanks to the evangelizing efforts of Sony’s own new PlayStation division, which seldom placed a foot wrong during these salad days. Unlike Nintendo and Sega, who seemed to see computer and console games as existing in separate universes, Sony was eager to bridge the gap between the two, eager to bring a wider variety of games to the PlayStation. And they were equally eager to push their console in Europe, where Nintendo had barely been a presence at all to this point and which even Sega had always treated as a distant third in importance to Japan and North America.

Thus Revolution Software got a call one day while the Broken Sword project was still in its first year from Phil Harrison, an old-timer in British games who knew everyone and had done a bit of everything. “Look, I’m working for Sony now and there’s this new console going to be produced called the PlayStation,” he told Charles Cecil, the co-founder and tireless heart and soul of Revolution. “Are you interested in having a look?”

Cecil was. He was indeed.

Thoroughly impressed by the hardware and marketing plans Harrison had shown him, Cecil went to Revolution’s publisher Virgin Interactive to discuss making a version of Broken Sword for the PlayStation as well. “That’s crazy, that’s not going to work at all,” said Virgin according to Cecil himself. Convinced the idea was a non-starter, both technically and commercially, they told him he was free to shop a PlayStation Broken Sword elsewhere for all they cared. So, Cecil returned to his friend Phil Harrison, who brokered a deal for Sony themselves to publish a PlayStation version in Europe as a sort of test of concept. Revolution worked on the port on the side and on their own dime while they finished the computer game. Sony then shipped this PlayStation version in December of 1996.

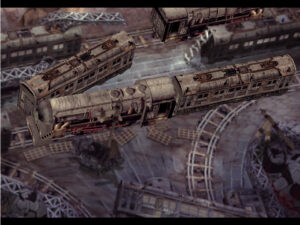

To be sure, it was a compromised creation. Although the PlayStation was a fairly impressive piece of kit by console standards, it left much to be desired when compared to even a mid-range gaming computer. The lovely graphics of the original had to be downgraded to the PlayStation’s lower resolution, even as the console’s relatively slow CD drive and lack of a hard drive for storing frequently accessed data made them painfully sluggish to appear on the television screen; one spent more time waiting for the animated cut scenes to load than watching them, their dramatic impact sometimes being squandered by multiple loading breaks within a scene. Even the voiced dialog could take unnervingly long to unspool from disc. Then, too, pointing and clicking was nowhere near as effortless using a game controller as it was with a mouse. (Sony actually did sell a mouse as an optional peripheral, but few people bought one.) Perhaps most worrisome of all, though, was the nature of the game itself. How would PlayStation gamers react to a cerebral, puzzle-oriented and narrative-driven experience like this?

The answer proved to be, better than some people — most notably those at Virgin — might have expected. Broken Sword‘s Art Deco classicism may have looked a bit out of place in the lurid, anime-bedecked pages of the big PlayStation magazines, but they and their readers generally treated it kindly if somewhat gingerly. Broken Sword sold 400,000 copies on the PlayStation in Europe. Granted, these were not huge numbers in the grand scheme of things. On a console that would eventually sell more than 100 million units, it was hard to find a game that didn’t sell well into the six if not seven (or occasionally eight) figures. By Revolution’s modest standards, however, the PlayStation port made all the difference in the world, selling as it did at least three times as many copies as the computer version despite its ample reasons for shirking side-by-side comparisons. Its performance in Europe was even good enough to convince the American publisher THQ to belatedly pick it up for distribution in the United States as well, where it shifted 100,000 or so more copies. “The PlayStation was good for us,” understates Charles Cecil today.

It was a godsend not least because Revolution’s future as a maker of adventure games for computers was looking more and more doubtful. Multinational publishers like Virgin tended to take the American market as their bellwether, and this did not bode well for Revolution, given that Broken Sword had under-performed there in relation to its European sales. To be sure, there were proximate causes for this that Revolution could point to: Virgin’s American arm, never all that enthused about the game, had given it only limited marketing and saddled it with the terrible alternative title of Circle of Blood, making it sound more like another drop in the ocean of hyper-violent DOOM clones than a cerebral exercise in story-driven puzzle-solving. At the same time, though, it was hard to deny that the American adventure market in general was going soggy in the middle; 1996 had produced no million-plus-selling mega-hit in the genre to stand up alongside 1995’s Phantasmagoria, 1994’s Myst, or 1993’s The 7th Guest. Was Revolution’s sales stronghold of Europe soon to follow the industry’s bellwether? Virgin suspected it was.

So, despite having made three adventure games in a row for Virgin that had come out in the black on the global bottom line, Revolution had to lobby hard for the chance to make a fourth one. “It was frustrating for us,” says Revolution programmer Tony Warriner, “because we were producing good games that reviewed and sold well, but we had to beg for every penny of development cash. There was a mentality within publishing that said you were better off throwing money around randomly, and maybe scoring a surprise big hit, instead of backing steady but profitable games like Broken Sword. But this sums up the problem adventures have always had: they sell, but not enough to turn the publishers on.”

We might quibble with the “always” in Warriner’s statement; there was a time, lasting from the dawn of the industry through the first half of the 1990s, when adventures were consistently among the biggest-selling titles of all on computers. But this was not the case later on. Adventure games became mid-tier niche products from the second half of the 1990s on, capable of selling in consistent but not huge numbers, capable of raking in modest profits but not transformative ones. Similar middling categories had long existed in other mass-media industries, from film to television, books to music, all of which industries had been mature enough to profitably cater to their niche customers in addition to the heart of the mainstream. The computer-games industry, however, was less adept at doing so.

The problem there boiled down to physical shelf space. The average games shop had a couple of orders of magnitude fewer titles on its shelves at any given time than the average book or record store. Given how scarce retail space was, nobody — not the distributors, not the publishers, certainly not the retailers themselves — was overly enthusiastic about filling it with product that wasn’t in one of the two hottest genres in gaming at the time, the first-person shooter and the real-time strategy. This tunnel vision had a profound effect on the games that were made and sold during the years just before and after the millennium, until the slow rise of digital distribution began to open fresh avenues of distribution for more nichey titles once again.

In light of this situation, it’s perhaps more remarkable how many computer games were made between 1995 and 2005 that were not first-person shooters or real-time strategies than the opposite. More dedicated, passionate developers than you might expect found ways to make their cases to the publishers and get their games funded in spite of the remorseless logic of the extant distribution systems.

Revolution Software found a way to be among this group, at least for a while — but Virgin’s acquiescence to a Broken Sword II didn’t come easy. Revolution had to agree to make the sequel in just one year, as compared to the two and a half years they had spent on its predecessor, and for a cost of just £500,000 rather than £1 million. The finished game inevitably reflects the straitened circumstances of its birth. But that isn’t to say that it’s a bad game. Far from it.

Broken Sword II: The Smoking Mirror kicks off six months after the conclusion of the first game. American-in-Paris George Stobbart, that game and this one’s star, has just returned to France after dealing with the death of his father Stateside. There’s he’s reunited with Nico Collard, the fetching Parisian reporter who helped him last time around and whom George has a definite hankering for, to the extent of referring to her as his “girlfriend”; Nico is more ambiguous about the nature of their relationship. At any rate, an ornately carved and painted stone, apparently Mayan in origin, has come into her possession, and she has asked George to accompany her to the home of an archaeologist who might be able to tell them something about it. Unfortunately, they’re ambushed by thugs as soon as they arrive; Nico is kidnapped, while George is left tied to a chair in a room whose only other inhabitants are a giant poisonous spider and a rapidly spreading fire.

If this game doesn’t kick off with the literal bang of an exploding bomb like last time, it’s close enough. “I believe that a videogame must declare the inciting incident immediately so the player is clear on what their character needs to do and, equally importantly, why,” says Charles Cecil.

With your help, George will escape from his predicament and track down and rescue Nico before she can be spirited out of the country, even as he also retrieves the Mayan stone from the dodgy acquaintance in whose safekeeping she left it and traces their attackers back to Central America. And so George and Nico set off together across the ocean to sun-kissed climes, to unravel another ancient prophecy and prevent the end of the world as we know it for the second time in less than a year.

Broken Sword II betrays its rushed development cycle most obviously in its central conspiracy. For all that the first game’s cabal of Knights Templar was bonkers on the face of it, it was grounded in real history and in a real, albeit equally bonkers classic book of pseudo-history, The Holy Blood and the Holy Grail. Mayans, on the other hand, are the most generic adventure-game movers and shakers this side of Atlanteans. “I was not as interested in the Mayans, if I’m truthful,” admits Charles Cecil. “Clearly human sacrifices and so on are interesting, but they were not on the same level of passion for me as the Knights Templar.”

Lacking the fascination of uncovering a well-thought-through historical mystery, Broken Sword II must rely on its set-piece vignettes to keep its player engaged. Thankfully, these are mostly still strong. Nico eventually gets to stop being the damsel in distress, becoming instead a driving force in the plot in her own right, so much so that you the player control her rather than George for a quarter or so of the game; this is arguably the only place where the second game actually improves on the first, which left Nico sitting passively in her flat waiting for George to call and collect hints from her most of the time. Needless to say, the sexual tension between George and Nico doesn’t get resolved, the writers having learned from television shows like Moonlighting and Northern Exposure that the audience’s interest tends to dissipate as soon as “Will they or won’t they” becomes “They will!” “We could very easily have had them having sex,” says Cecil, “but that would have ruined the relationship between these two people.”

The writing remains consistently strong in the small moments, full of sly humor and trenchant observations. Some fondly remembered supporting characters return, such as Duane and Pearl, the two lovably ugly American tourists you met in Syria last time around, who’ve now opted to take a jungle holiday, just in time to meet George and Nico once again. (Isn’t coincidence wonderful?)

And the game is never less than fair, with occasional deaths to contend with but no dead ends. This manifestation of respect for their players has marked Revolution’s work since Beneath a Steel Sky; they can only be applauded for it, given how many bigger, better-funded studios got this absolutely critical aspect of their craft so very wrong back in the day. The puzzles themselves are pitched perfectly in difficulty for the kind of game this is, being enough to make you stop and think from time to time but never enough to stop you in your tracks.

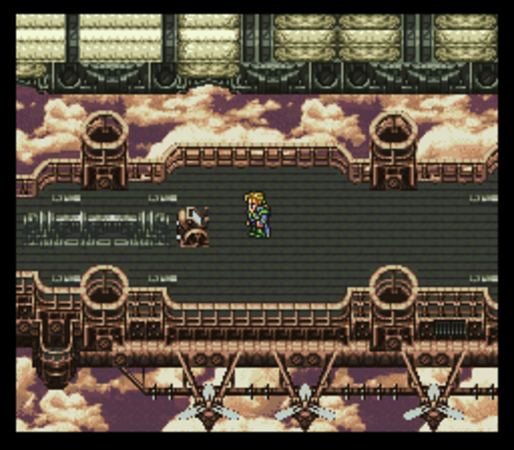

In the end, then, Broken Sword II suffers only by comparison with Broken Sword I, which does everything it does well just that little bit better. The backgrounds and animation here, while still among the best that the 1990s adventure scene ever produced, aren’t quite as lush as what we saw last time. The series’s Art Deco and Tintin-inspired aesthetic sensibility, seen in no other adventure games of the time outside of the equally sumptuous Last Express, loses some focus when we get to Central America and the Caribbean. Here the game takes on an oddly LucasArts-like quality, what with the steel-drum background music and all the sandy beaches and dark jungles and even a monkey or two flitting around. Everywhere you look, the seams show just a little more than they did last time; the original voice of Nico, for example, has been replaced by that of another actress, making the opening moments of the second game a jarring experience for those who played the first. (Poor Nico would continue to get a new voice with each subsequent game in the series. “I’ve never had a bad Nico, but I’ve never had one I’ve been happy with,” says Cecil.)

But, again, we’re holding Broken Sword II up against some very stiff competition indeed; the first game is a beautifully polished production by any standard, one of the crown jewels of 1990s adventuring. If the sequel doesn’t reach those same heady heights, it’s never less than witty and enjoyable. Suffice to say that Broken Sword II is a game well worth playing today if you haven’t done so already.

It did not, however, sell even as well as its predecessor when it shipped for computers in November of 1997, serving more to justify than disprove Virgin’s reservations about making it in the first place. In the United States, it was released without its Roman numeral as simply Broken Sword: The Smoking Mirror, since that country had never seen a Broken Sword I. Thus even those Americans who had bought and enjoyed Circle of Blood had no ready way of knowing that this game was a sequel to that one. (The names were ironic not least in that the American game called Circle of Blood really did contain a broken sword, while the American game called Broken Sword did not.)

That said, in Europe too, where the game had no such excuses to rely upon, the sales numbers it put up were less satisfactory than before. A PlayStation version was released there in early 1998, but this too sold somewhat less than the first game, whose relative success in the face of its technical infelicities had perchance owed much to the novelty of its genre on the console. It was not so novel anymore: a number of other studios were also now experimenting with computer-style adventure games on the PlayStation, to mixed commercial results.

With Virgin having no interest in a Broken Sword III or much of anything else from Revolution, Charles Cecil negotiated his way out of the multi-game contract the two companies had signed. “The good and the great decided adventures [had] had their day,” he says. Broken Sword went on the shelf, permanently as far as anyone knew, leaving George and Nico in a lovelorn limbo while Revolution retooled and refocused. Their next game would still be an adventure at heart, but it would sport a new interface alongside action elements that were intended to make it a better fit on a console. For better or for worse, it seemed that the studio’s hopes for the future must lie more with the PlayStation than with computers.

Revolution Software was not alone in this; similar calculations were being made all over the industry. Thanks to the fresh technology and fresh ideas of the PlayStation, said industry was entering a new period of synergy and cross-pollination, one destined to change the natures of computer and console games equally. Which means that, for all that this site has always been intended to be a history of computer rather than console gaming, the PlayStation will remain an inescapable presence even here, lurking constantly in the background as both a promise and a threat.

Where to Get It: Broken Sword II: The Smoking Mirror is available as a digital download at GOG.com.

Did you enjoy this article? If so, please think about pitching in to help me make many more like it. You can pledge any amount you like.

Sources: the book Grand Thieves and Tomb Raiders: How British Video Games Conquered the World by Magnus Anderson and Rebecca Levene; Retro Gamer 6, 31, 63, 146, and 148; GameFan of February 1998; PlayStation Magazine of February 1998; The Telegraph of January 4 2011. Online sources include Charles Cecil’s interviews with Anthony Lacey of Dining with Strangers, John Walker of Rock Paper Shotgun, Marty Mulrooney of Alternative Magazine Online, and Peter Rootham-Smith of Game Boomers.