All of us had become disappointed with computer RPGs because they were going in the opposite direction of where we thought they should be going. They were becoming story- and stat-laden, really appealing to a super-small niche of super RPG geeks — which we were in a way, but that wasn’t really our style.

So, when [David] Brevik mentioned these roguelike games, it was kind of a natural. “Yeah, let’s take that cool, addictive structure and modernize it. Let’s strip away the stuff that’s turning off a lot of game fans from RPGs.”

— Max Schaefer of Blizzard North

A palpable sense of ennui dogged the Consumer Electronics Shows of 1994. The venerable semiannual expo where such landmark gaming hardware as the Atari VCS, the Commodore 64 and Amiga, the Nintendo Entertainment System and Super Entertainment System, and the Sega Genesis had been seen for the first time seemed somehow past its sell-by date now. Attendance at the Summer CES in particular was down in a big way, so much so that the organizers would move the event out of its long-standing home in Chicago’s McCormick Place the following year and turn it into a traveling exhibition in the hope of drumming up some much-needed excitement. In the meantime, the makers of gaming software had an especially underwhelming time of it in Chicago that year: as usual, they were treated as second-class citizens by the organizers, relegated to the hall’s basement so that the choicer spaces were kept free for cutting-edge toasters, refrigerators, and microwave ovens.

Among the games people who were having the worst time of it of all were the folks behind a tiny San Mateo, California, studio called Condor, Incorporated. David Brevik and his co-founders, the brothers Max and Erich Schaefer, were ostensibly at the show to demonstrate their very first finished original game, a Genesis title called Justice League: Task Force. But they knew the game was no great shakes. They had made exactly what their publisher, the financially troubled Japanese giant Sunsoft, had ordered them to make in rather pedantic detail: a blatant clone of yesteryear’s massive hit Street Fighter II, with DC Comics superheroes inserted in place of its inspiration’s pugilists. They felt it was competently executed, but knew as well as anyone that it was no more than a quickie placeholder product for a five-year-old console that was soon due to be superseded by the next-generation Sega Saturn.

Their ulterior motive for being at CES was something else entirely. Brevik had an idea for a computer game called Diablo, which he had been slowly expanding upon ever since he had lived with his family at the foot of the California mountain of that name back in the mid-1980s. Now, he felt its time had come; he desperately wanted to interest a publisher in it. But every executive he talked to at the show starting shaking his head as soon as he saw the first line of the pitch document, stating that it was “a proposal for a role-playing game.” For CRPGs were dead and buried according to the industry’s conventional wisdom, having nothing to offer in an era when multimedia flash and 3D mayhem reigned supreme. They were quaint at best, deadly boring at worst, as their recent sales figures reflected.

Thoroughly disheartened by his proposal’s reception, Brevik duly turned up with the Schaefer brothers at the appointed time to show Justice League to the assembled press. And here they all got a shock. They learned only minutes before taking the stage that Sunsoft had actually arranged to make a second version of the game for the Super Nintendo, sending the same design brief to another little studio, Blizzard Entertainment of Costa Mesa, California. Both development teams could immediately see that the other had done a pretty solid, professional job with a less than inspiring project. Indeed, they were struck by how similar the two end results were to one another.

They soon learned that they had much more in common. Blizzard too had been founded on a shoestring by three games-obsessed kids just out of university, in this case by the names of Allen Adham, Mike Morhaime, and Frank Pearce. And they too had become all too familiar with workaday projects like Justice League, which they too saw as a way for their new, unproven studio to pay its dues on the way to bigger, better things to come. The big difference was that Blizzard was a few years older, and thus that much further along the road to becoming a marquee studio. They had recently been acquired by the educational-software giant Davidson & Associates, whose distributional pipeline they would be able to use to publish their own games under their own imprint. Now, they were hard at work finishing up the project that they hoped would change everything for them: a game for computers only called Warcraft. They took the Condor boys into a cramped back room and showed it to them. “I had no idea at that point that Warcraft would become an historically important game,” says Max Schaefer. “It just looked cool.” A relationship was forged. The Blizzard folks said they were just too busy to think about anything else just then, but they promised to listen to Condor’s pitch for Diablo once Warcraft was out the door.

They were true to their word. In January of 1995, with Warcraft on store shelves and selling well, everyone came together again in Blizzard’s conference room to talk about Diablo. No one in that room was unaware of the concerns that had caused publisher after publisher to walk away from the proposal; in fact, in many ways they shared them. CRPGs had glutted the market just a few years earlier, a bewildering procession of elves and dwarves and dragons. For the hardcore aficionados, all of the different games and series were (and still are) possessed of their own distinctive personalities and intricate subtleties, but it was hard for everybody else to keep Dungeons & Dragons separate from Dungeon Master, Might and Magic separate from The Magic Candle. I have a friend who likes to say that there are only two blues songs: “the fast one and the slow one.” Likewise, one might go so far as to say that for most gamers there were only two CRPGs, the first-person Wizardry style and the overhead Ultima style. As computers had gotten more capable, games of the former type had gotten ever more complex in terms of rules, while those of the latter type had threatened to collapse under the sheer weight of their lore and verbiage, which minuscule computer memories no longer restricted. Those sorts of things were not what the Condor guys were into at all. Sure, they had all played tabletop Dungeons & Dragons as kids, but world-building and storytelling hadn’t been their primary interest. “It was all about killing monsters and finding good stuff,” says Max Schaefer.

And so that was what Diablo was to be about as well. “As games today substitute gameplay with multimedia extravaganzas and strive toward needless scale and complexity,” read the pitch document, “we seek to reinvigorate the hack-and-slash, feel-good gaming audience. Emphasis will be on exploration, conflict, and character development.”

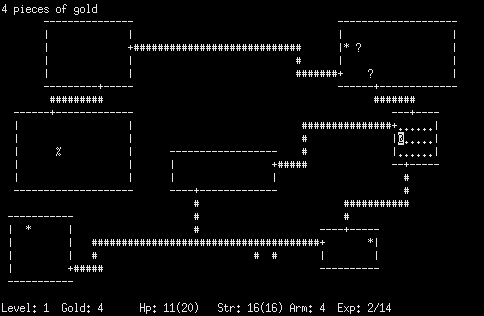

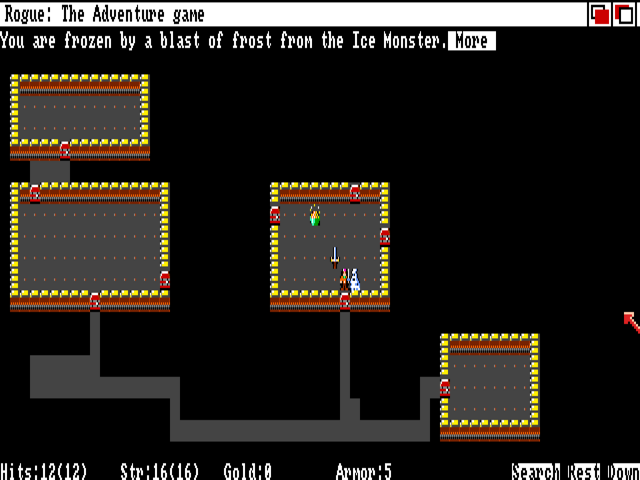

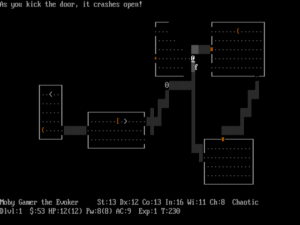

Diablo‘s most direct influence by far was the roguelike games, which David Brevik had played for hundreds upon hundreds of hours while a student at university. From roguelikes it inherited its minimalist narrative — amounting to little more than “make it to the last level and kill the boss of bosses Diablo” — as well as randomized dungeons that would be new with every playthrough, along with the randomized “good stuff” they contained. Brevik’s favorite roguelike of all was Angband, which distinguished itself from the likes of the original Rogue and its spiritual successor NetHack by having a town to serve as the player’s base of operations for her expeditions into the nearby dungeon, resulting in a slightly more relaxed pacing and introducing an economic element. Diablo was to duplicate this structure exactly: “Forays into the dungeon will be broken up by trips to the town located above. In the town, a general store will provide standard equipment and repairs, and will also purchase extra equipment from the player. A temple will provide healing for injured and sick characters. Training and other facilities may also be available.”

In Brevik’s initial vision, Diablo was even to have roguelike perma-death: if the player’s character was killed, “that character will be erased completely from the hard drive, and the player must start over from scratch.” Combat would be turn-based like in a roguelike, but heavily influenced by the game’s secondary inspiration, Julian Gollop’s 1994 strategy classic X-COM; Diablo would use a similar interface and action-points system. If it strikes you as strange that a game that would later be so commonly dismissed as nothing more than a mindless, frantic click-fest could have two such cerebral inspirations as these… well, such are the paradoxes of game development.

At any rate, Blizzard was suitably impressed, and agreed to fund and publish the game described in the pitch document. But several of the Blizzard folks who were present at the meeting have since claimed that they were already thinking about a major change: to make Diablo run in real time. Not long after work began on the game in earnest down in San Mateo, Blizzard began slowly but relentlessly to apply pressure to Condor — more specifically, to David Brevik — to make the switch.

Brevik was appalled. There was a certain kind of moment, familiar to every roguelike player, that he considered essential to recreate in Diablo. It’s that moment when you’re down to your last few hit points and are staring down the maw of a mind flayer or a wyvern, knowing that it’s about to hit you and kill you on its next turn unless you do something really clever and/or get really lucky on your own last turn before it can do so. Do you pull out that potion that you have no idea what it does and drink it down, hoping against hope that it’s a Potion of Protection? Or do you take one last swipe at the monster with your sword, hoping it’s as close to death as you are? Or do you try to get away by running down that nearby staircase, hoping against hope that it misses with its last lunge against your vulnerable backside? Most of the time, of course, you choose wrong and/or don’t get lucky, and another character goes to the graveyard. But every once in a while, it works out, your character lives to fight another day, and you shout and dance around the room and rush to tell your friends about it. That dopamine release is what keeps people coming back to roguelikes again and again. Brevik was understandably loath to lose it.

But the slow drip, drip, drip from Blizzard continued, seeping even into Condor’s own ranks. Knowing this, Allen Adham made a suggestion to Brevik in or around May of 1995: Why not ask your own people? Why not take a vote on whether just to try real time? If it doesn’t work, you can always go back to turn-based.

It was too reasonable a suggestion to refuse. Brevik asked for a show of hands among his own people of those interested in exploring real time, and was dismayed to see almost every hand in the room go up. Acceding to the will of the majority, he retreated into his office to have a good-faith go at something he was sure would never fit with the game he wanted to make. The quicker it was demonstrated to everyone that real time wasn’t a practical possibility, he thought, the quicker they could all get back to more productive endeavors. What followed instead was the project’s kairos moment.

I can remember the moment like it was yesterday. I was sitting and I was coding the game, and I had a warrior with a sword, and there was a skeleton on the other side of the screen. I’d been working on this code to make characters move smoothly, doing a whole bunch of testing, and we’d talked about how the controls would work.

We wanted it to be visceral. Click and swing, click and swing. We wanted it to automatically happen: if you clicked on the monster, your character would go over there and swing.

I remember very vividly: I clicked on the monster, the guy walked over, and he smashed this skeleton, and it fell apart onto the ground.

The light from heaven shone through the office down onto the keyboard. I said, “Oh, my God, this is so amazing!” I knew it was not only the right decision, but that Diablo was just going to be massive. It was really the most defining moment of my career, as well as for that genre of gaming.

A new genre was born in that moment, and it was really quite incredible to be the person coding it and creating it. I was just there by myself coding it up. It was pretty incredible.

Diablo may have lost that suspended instant of supreme tension that Brevik had always seen as essential, but it had gained something else, something that would make it a different sort of game entirely. Kelly Johnson, an artist who worked on the game:

In a turn-based game, when you win, you say, “Cool, my plan worked. I took time, I deliberated, I made a plan, and it worked out.” But in a real-time [game], it’s, “Wow! I won!” It’s visceral. You’re in the moment.

Everyone at Condor, including Brevik, was soon marveling that they had ever imagined Diablo being anything other than a real-time game. Millions of players would eventually feel the same way, as the game’s real-time nature became the core of its very identity.

But before that could happen, Diablo had to be finished. In their excitement over not being rejected yet again, Condor had secured less than half a million dollars in funding from Blizzard, to support a team that numbered a dozen or more. By the beginning of 1996, that money was running out. The founders dipped deep into their personal bank accounts just to cover payroll, and their employees started racing one another to the bank on payday, knowing that the last checks deposited had a tendency to bounce. Meanwhile Blizzard was soaring. That Christmas, they had released Warcraft II, a refinement of its predecessor that blew up massively; it would sell 3 million copies before all was said and done.

The Schaefer brothers and David Brevik were stunned when their publisher came to them and asked whether they would be interested in being acquired; Blizzard was suddenly flush with cash, and the brain trust there was very, very excited about Diablo‘s prospects, such that they wanted to have it all for themselves. For the people making Diablo, the unexpected offer was a lifeline materializing out of thin air in front of a drowning man. In March of 1996, Condor became Blizzard North.

It was Blizzard that had pushed the erstwhile Condor to make Diablo run in real time. Now, it would be Blizzard South that drove another core feature into being. The initial pitch document had included “two-player and multiplayer game sessions via modem or network.” Since actual work had begun on the game, however, that aspiration had been all but forgotten. Yet Blizzard South knew how important multiplayer could be for a game in this new era of widespread network connectivity. They knew that multiplayer deathmatches had made DOOM what it was, and they knew that, long after players had finished Warcraft II‘s single-player campaign, it was multiplayer that kept them going there as well, turning the game into a veritable institution. They wanted all that for Diablo, so much so that they made their only significant technical intervention into its development, sending programmers up to San Mateo to apply their Warcraft II expertise to Diablo‘s multiplayer mode.

For Blizzard had huge plans for multiplayer games in general. Everyone could sense that a large percentage of future gaming would take place between real people on the Internet, that the “LAN parties” of the current age were just a temporary stopgap. Yet gaming over long distances was still technically challenging for the user, even as sessions had to be pre-planned with buddies who had bought the same game you had; spontaneous, pick-up-and-play matches were impossible. Various third-party companies were experimenting with ways to change both of these things, but everything was in a nascent, febrile state. Having money to spend as they did, Blizzard decided to introduce a game hosting and matchmaking service for their customers, under the name (and the Internet URL) of Battle.net. And they decided to offer it to buyers of their games for the low, low price of free, on the logic that the boxed-game sales it would generate would easily pay for its upkeep. It was a revolutionary idea, one that would prove as important to Blizzard’s rise into gaming’s stratosphere as any of their individual titles, iconic as they were. Thanks to Battle.net, you would always be able to find someone to play with, then be in a game with them within seconds. Patches would download automatically when you logged onto the service, a first step toward the always-online mentality that has taken over since. And Diablo was the very first Battle.net-enabled game. If it had achieved nothing else, it would be historically notable for this fact alone.

With Diablo being refined into an ever more effortless, frictionless experience, it was inevitable that another legacy of the roguelikes would fall away. The Southerners told the Northerners that perma-death just wouldn’t fly in the modern commercial market. David Brevik kvetched, but there was no way he was going to win this argument. Even if it hadn’t started out that way, Diablo was evolving into a lean-back rather than a lean-forward sort of game, designed to be more fun than it was demanding. Mistakes would happen in a game like that, and nobody wanted to lose a character he had spent eight hours building because he got distracted by the pizza guy ringing the doorbell. By way of compromise, the Southerners did agree to allow only one save slot, which fit in nicely with the game’s ethic of simplicity anyway. And of course, if anyone really wanted to play Diablo like a roguelike, there was nothing but the temptation of that extant last save file preventing it.

Warcraft II had made Blizzard one of the biggest names in mainstream gaming, on a level with id Software of DOOM and Quake fame and Westwood Studios, the makers of Command & Conquer, Blizzard’s great rival in the real-time-strategy space. Everything Blizzard did was now of interest to obsessive gamers. Diablo was to be their first game that ran under Windows 95 rather than MS-DOS; like Battle.net, this was another outcome of the company’s guiding principle of frictionless ease in all things. In the summer of 1996, Blizzard arranged to have a two-level demo of Diablo included on a Microsoft DirectX sampler disc. Interest in the game exploded. It became easily the most anticipated title of the 1996 holiday season.

That fact makes the next bit that much more remarkable. When the last possible instant to send the game out to be burned onto hundreds of thousands of CDs and shipped to stores all over the country in time for the Christmas buying season arrived, Blizzard took a long, hard look at its current state. It wasn’t in terrible shape, but it still had its fair share of minor niggles here and there. The vast majority of publishers would have said it was good enough and shipped it at this point — after all, they could always patch it later, right? (Wasn’t that one of the points of Battle.net?) But Blizzard decided to wait, resigning themselves to letting Christmas slip by without a major new release from them. It was better, they judged, to make sure Diablo was just exactly perfect when it did ship. More than anything else, it would be this thoroughgoing focus on quality — quality at almost any cost — that would make Blizzard one of the most extraordinary success stories in the entire history of gaming. From the beginning, their tender-aged founders understood something that eluded a bizarre number of their more grizzled peers: that one’s reputation is one’s most precious business asset of all, being laborious to build up and disconcertingly easy to lose. In an industry fueled by short-term hype, they took the long view. “If you truly put the game first,” says Allen Adham, “then decisions like holding a product an extra couple of months, even if it means missing Christmas, become fairly clear.” Gamers came to know that Blizzard would never let them down, and this knowledge fueled the company’s rise. The sacrificing of tens of thousands of sales the following month led to millions and millions of sales over the following decade.

So, Diablo missed the Christmas deadline, but not by much: the first copies wended their way onto store shelves between Christmas and New Years, when lots of younger gamers had gift checks from uncles and aunts and grandparents burning holes in their pockets. Others trotted down to their local software store and traded some less desirable Christmas present for Diablo. Retailers fended off the return-season blues by turning Diablo‘s release into an event, plastering posters all over their walls and filling their display windows with mannequins of the devil on the cover. All told, it’s questionable whether the belated release really hurt Diablo very much at all, even in the shortest of terms. By spring, it was clear both from the sales reports and from the level of activity on Battle.net that Diablo was the hottest computer game in the world. It was blowing up huge, even by comparison with Warcraft II. Diablo‘s sales surpassed 1 million units within months.

Diablo‘s eventual impact on the culture and practices of computer gaming was arguably more pronounced than that of any individual title since DOOM. It introduced phrases like “loot drop” into the gamer lexicon; it was the pioneer of a new era of easy online multiplayer gaming, between friends and strangers alike; it single-handedly dragged the entire genre of the CRPG back into public favor. This long shadow can make it oddly difficult to discuss as just a game. When I went back to play it recently for the first time in a quarter of century — boy, I’m getting old! — I was impressed if not blown away by the experience. And yet, despite my best efforts, I couldn’t quite avoid allowing my opinions to be colored by some of what Diablo has wrought. We’ll get to that in due course. But first, Diablo the game…[1]The commentary in this article deals only with the original Diablo. An expansion pack to the game called Hellfire, created out-of-house by the Sierra subsidiary Synergistic Software, was released in late 1997. The relationship between Blizzard North and Synergistic was plagued with discord from first to last, and David Brevik and many of his colleagues have since disowned many elements of Hellfire as fatal dilutions of their vision. So, we’ll honor Blizzard North’s original intentions here and stick to the base game.

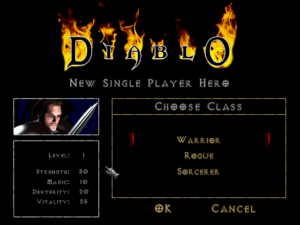

When you start a new adventure in the world of Diablo, you first choose your character from three fantasy archetypes: the warrior, who is best at bashing things with his big old sword; the rogue, who fights a little more surgically, preferring the bow and arrow; or the mage, who unlike his counterparts is pretty good with spells from the outset. But you don’t spend any time fussing about with statistics. You’re dropped into the hardscrabble village of Tristram, which has had the misfortune to be built over a demon’s not-so-final resting place, as soon as you’ve given your character a name. In Tristram, you can buy and sell in a few different shops and talk to a handful of villagers, but it’s all kept very short and sweet. Before you know it, you’ll be in the first dungeon, which is found beneath the graveyard of the local church.

You’ll have to fight your way through sixteen dungeon levels in all, divided into four sets of four that open up one after another, presenting ever more powerful monsters for your ever more powerful character to battle. In keeping with the game’s roguelike heritage, each level is procedurally generated. There is a modicum of story, even a cut scene here and there, but nothing you ever need to think too much about. (Although a fairly elaborate backstory does appear in the manual, it too is nothing you need to concern yourself with if you don’t want to. It was tacked on very late in development by Blizzard South, who realized that some gamers at least still liked to see such things.) There are also some pre-scripted quests to carry out, selected randomly from a pool of possibilities each time you start a new game. Most of these are given to you by the townspeople when you talk to them — but, again, all are extremely basic, coming down to “kill this monster” or “collect this object” (which, come to think of it, always involves killing the monster guarding it).

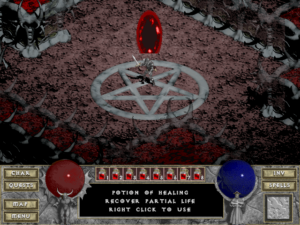

In practice, playing Diablo is a very simple loop. You go into the depths and make as much progress as you can against the hordes of enemies that await you there. Then you return topside to sell off the stuff you’ve collected that you don’t need, heal up and buy any potions or other equipment you think you’re going to need, and go downstairs again. Rinse and repeat, until you meet and hopefully kill Diablo himself. Unlike the typical epic CRPG, Diablo is intended to be a game you play over and over again. Thus the average playthrough takes only ten hours or so, as opposed to the hundred or more of its weightier brethren.

Blizzard North’s stated goal was to make Diablo “so easy your mom could play it.” Setting aside the condescension of their choice of words, they certainly achieved their goal in spirit. Fighting monsters is simply a matter of clicking on them, which causes your character to whack them with his melee weapon or fire off an arrow or spell at them. Tactics in the dungeons come down to common sense: whittling away at the edges of large groups of monsters instead of charging right into the middle of them, using doorways and narrow corridors to your advantage, keeping a healthy distance and using ranged attacks if you’re playing a rogue or a mage. That said, it does pay to learn the monsters’ strengths and weaknesses and tailor your attacks to them: skeletons, for example, are more vulnerable to attacks by blunt weapons such as maces than edged weapons such as swords.

The biggest source of tension is the question of when you should leave off in the dungeon and return to the town for succor. Usually when you die, it’s because you’ve pressed your luck just a bit too much. On the whole, though — and ironically given its line of descent through one of the most infamously unforgiving sub-genres in all of gaming — Diablo is one of the less intrinsically challenging games I’ve played in the course of writing these histories. If you do find yourself feeling under-powered and over-matched — perhaps because you made poor choices about where to allocate the ability points your character is awarded every time she levels up — you can always restart the game whilst retaining your existing character, complete with her current statistics and all of her current kit. Poor character-building choices or a general lack of skill can, in other words, always be compensated for with patient grinding.

In lieu of challenge, Diablo thrives on its polished addictiveness. Vanishingly few of its contemporaries can even begin to touch it in terms of intuitive playability. It’s clear that every last detail — every last window, every last hotkey, every last mouse click — was fussed over for hours and hours, until it was just what it ought to be. The auto-map is a thing of wonder that I have to call out for special praise. In CRPGs of the 1990s, such things are usually found in a separate window on the main display that is always too small for comfort and yet takes up too much precious screen real estate — or the auto-map can only be accessed on a separate screen, leaving you constantly flipping back and forth between the two views as you try to get somewhere. Diablo‘s auto-map, on the other hand, appears as a transparent overlay right on top of the usual display, toggled on and off by pressing the TAB key. Like everything else here, it’s elegant and perfect, a brilliant stroke that could only have come about through dedicated, dogged iteration. You have to be in awe of the craftsmanship of this game. It knows precisely what it wants to be, and it achieves its best self in every respect.

This statement applies equally to the game’s aesthetics, which are nothing short of masterful; whatever Diablo lacks in set-piece storytelling, it makes up for in atmosphere. If I had to describe that atmosphere in one word, it would be “Gothic.” Diablo captures the side of the Middle Ages that all of those Tolkienesque CRPGs cheerfully ignore in the midst of all their elves and halflings romping merrily through the forest: the all-encompassing religion of Christianity, the almost tangible reality of another life that awaits after this one, which is as much a source of fear as comfort in the minds of the people. Diablo taps into something deep and almost primal in the human psyche, having more in common with The Exorcist than The Lord of the Rings, more in common with Hieronymus Bosch than Boris Vallejo. The shocking ending, which I won’t spoil here, is likewise more horror than fantasy. Diablo is lucky it wasn’t released during the Satanic Panic of the 1980s, given that it sports much of what all those concerned parents were looking for in Dungeons & Dragons and not quite finding.

Matt Uelmen’s amazingly sophisticated soundtrack, recorded partially on real instruments at a time when many games were still relying entirely on tinny MIDI sound fonts, could easily have played behind a big-budget horror movie. The “Town” theme, featuring the best use of a twelve-string guitar since the heyday of the Byrds, is especially unforgettable; it took me back instantly when I heard it again after 25 years away.

All that said, I won’t go so far as to say that Diablo itself is scary. It seems to me that gameplay that revolves around killing hundreds of monsters is incompatible with true horror. Horror depends on a feeling of powerlessness, whereas Diablo is, like almost all CRPGs, a power fantasy at bottom. Nevertheless, it’s as audiovisually focused and accomplished as any game I’ve ever seen. I say this even as I freely acknowledge that its unrelentingly dark atmosphere tends to wear thin with me pretty quickly. (For me, a bit of light and joy brings out the shadows that much more effectively.)

And sadly, that statement pretty much sums up my response to Diablo as a whole, which is the same today as it was 25 years ago. It does what it does brilliantly. I just wish I liked what it does a bit more. Let me tell you how I got on with it when I played it for this article…

Given its titanic importance, my first plan was to play through it three times, once for each of the character classes. I first bashed my way to the finish line as a warrior. As I did so, I admired all of the qualities described above, but I also found the experience a little hollow; I didn’t dread sitting down with the game on the couch after dinner each evening for an hour or two, but neither did I look forward to it all that much — and nor did my wife have to tell me twice that it was time for bed, as she has to when I’m playing some games. I came to regard my Diablo sessions much as I might, say, an old episode of Law & Order: a low-effort something to pass the time, which I could do while chatting intermittently with my wife about completely different things. When I finished the game, I put it on the shelf for several months, intending always to get back to it but never feeling all that excited about doing so. Finally, knowing I had to write this article soon, I forced myself to start a new game as a rogue, hoping that character might be more interesting to play. But this time I found myself actively bored; “been there, done that” was the dominant note. Halfway through, I just couldn’t muster the will to continue. I could admire Diablo for its craftsmanship, but I couldn’t love it.

What am I to make of this? Obviously, I’m in the group of people who just aren’t really in the market for what Diablo is selling — a group who tend to be as vocal in their criticisms as the game’s fans are in their praise. But I’m not eager to join the chest-beating grognards who call Diablo dumbed down, or who shout that it’s not even a real CRPG at all. (Is there anything more tedious than a semantic debate between intractably biased parties?) It’s actually not Diablo‘s simplicity that puts me off; I’m much more likely to scold a game for being too complicated than for being too simple. And then too, over the years I’ve been writing these histories, I’ve found many — perhaps most — games from the 1980s and 1990s to be more rather than less difficult than I really need them to be, so it’s not precisely the lack of challenge that bothers me about Diablo either. Too easy is far, far better in my book than too hard.

On the other hand, I do tend to prefer human-crafted to procedurally-generated content in general, and Diablo doesn’t do anything to disabuse me of that notion. Its randomized nature means that its dungeons can only be a collection of rooms, corridors, and monsters, without the guileful tricks and traps and drama of the best dungeon crawlers of yore. Beyond that, and beyond an aesthetic presentation that isn’t quite to my taste, I think my lack of receptivity to Diablo is to do with the passivity of the experience. I’ve seen it described as a good “hangover game,” what with how little it actually asks of you. Even more tellingly, I’ve seen it called the gaming equivalent of candy: you can eat an awful lot of it without thinking much about it, but it doesn’t leave you feeling all that great afterward.

One nice thing about getting older is that you learn what makes you feel good and bad. I’ve long since learned, for instance, that I’m happiest if I don’t play games for more than a couple of hours per day, even on those rare occasions when I have time for more. But I want those hours to have substance — to yield fun stories to tell, interesting decisions to remember, strategies or puzzle solutions to muse about while I’m cooking dinner or working out or taking a walk, accomplishments to feel good about. For me, Diablo is peculiarly flat; I went, I saw, I clicked on monsters. For me, it feels less like a time waster than a waste of time. I almost find myself wishing the game wasn’t so superbly polished in every particular, just to relieve the monotony.

More substantively, I do see one aspect of Diablo as vaguely ominous in the larger context of gaming history: the way it uses stuff to do the heavy lifting of player motivation. As I mentioned above, “loot drops” became a thing in gaming with this game. Although CRPGs had been tempting and teasing players with the prospect of a new magic sword or armor as long as they had existed, Diablo put that temptation front and center, making it the main driver of its gameplay loop. In doing so, David Brevik and company consciously tapped into something besides the allure of the Gothic that is primal in human psychology. They liked to use the analogy of a slot machine: you clicked endlessly on monsters in the hope that eventually something really good would drop out of one of them. When I hear these anecdotes, I can’t help but think of the glassy-eyed zombies to be found in casinos from Shreveport to Macau, pulling the handles of the one-armed bandits again and again for hours, likewise waiting for something good to drop into their laps. Pat Wyatt, Blizzard’s vice president of research and development at the time of Diablo‘s creation, proffers an even more disturbing metaphor: “Positive reinforcement is one of the hardest types of conditioning to break, which is why pets beg at the table: rewards may not happen very often, but every once in a while you get a scrap, so they keep begging.” In the decades after Diablo, this Pavlovian loop would be exploited mercilessly by cynical game makers, trapping players in unsatisfying cycles of addiction that drained their time and their wallet, leaving them with nothing but a few virtual trinkets to their names in a virtual world that would be gone in a year or two anyway.

In the late 1990s, the dangerous addictiveness of loot drops was most in evidence in multi-player Diablo, as played on Battle.net, which in its early years was a fascinating if ofttimes toxic social laboratory in its own right. I do have more to say about it, but I think I’ll reserve it for a future article which will look at this formative period of online gaming in a more holistic way.

Instead, let me say in conclusion today what I often say when I end a review on a downer note: that no game is for everyone, and no way of having fun is wrong, as long as you aren’t hurting anyone else or yourself. If you love Diablo, you’re in good company. It’s a fine, fine game by any objective measure. Whatever cynicism it might have inspired is on the conscience of the folks who displayed it; this game was made for all the right reasons. It’s a triumph of care and dedication from which many another studio could learn, then and now. Just be sure to remember that there’s a beautiful world out there with plenty of cloudless blue skies to contrast with Diablo‘s perpetually sooty ones, and you’ll be just fine. Click away, my friends, click away!

Did you enjoy this article? If so, please think about pitching in to help me make many more like it. You can pledge any amount you like.

(Sources: As was the case with my last article, I’m hugely indebted to David L. Craddock for Stay Awhile and Listen Book I and Book II, which I plundered for quotes with all the enthusiasm of a Diablo loot hunter. By all means, check out these books if you’re interested in learning more about the Blizzard story.

Magazine sources include Computer Gaming World of August 1996, December 1996, March 1997, April 1997, and May 1997; Retro Gamer 43 and 103. Online sources include Lee Hutchison’s interview with David Brevik for Ars Technica, the Dev Game Club interview with Brevik, and Brevik’s Diablo post-mortem at the 1996 Game Developers Conference.

Diablo and its controversial expansion Hellfire are available as a single digital purchase at GOG.com.)

Footnotes

| ↑1 | The commentary in this article deals only with the original Diablo. An expansion pack to the game called Hellfire, created out-of-house by the Sierra subsidiary Synergistic Software, was released in late 1997. The relationship between Blizzard North and Synergistic was plagued with discord from first to last, and David Brevik and many of his colleagues have since disowned many elements of Hellfire as fatal dilutions of their vision. So, we’ll honor Blizzard North’s original intentions here and stick to the base game. |

|---|