It’s tough to put a neat label on Wing Commander IV: The Price of Freedom. On the one hand, it was a colossally ambitious and expensive project — in fact, the first computer game in history with a budget exceeding $10 million. On the other, it was a somewhat rushed, workmanlike game, developed in half the time of Wing Commander III using the same engine and tools. That these two things can simultaneously be true is down to the strange economics of mid-1990s interactive movies.

Origin Systems and Chris Roberts, the Wing Commander franchise’s development studio and mastermind respectively, wasted very little time embarking on the fourth numbered game in the series after finishing up the third one in the fall of 1994. Within two weeks, Roberts was hard at work on his next story outline. Not long after the holiday season was over and it was clear that Wing Commander III had done very well indeed for itself, his managers gave him the green light to start production in earnest, on a scale of which even a dreamer like him could hardly have imagined a few years earlier.

Like its predecessor, Wing Commander IV was destined to be an oddly bifurcated project. The “game” part of the game — the missions you actually fly from the cockpit of a spaceborne fighter — was to be created in Origin’s Austin, Texas, offices by a self-contained and largely self-sufficient team of programmers and mission designers, using the existing flight engine with only modest tweaks, without a great deal of day-to-day communication with Roberts himself. Meanwhile the latter would spend the bulk of 1995 in Southern California, continuing his career as Hollywood’s most unlikely and under-qualified movie director, shooting a script created by Frank DePalma and Terry Borst from his own story outline. It was this endeavor that absorbed the vast majority of a vastly increased budget.

For there were two big, expensive changes on this side of the house. One was a shift away from the green-screen approach of filming real actors on empty sound stages, with the scenery painted in during post-production by pixel artists; instead Origin had its Hollywood partners Crocodille Productions build traditional sets, no fewer than 37 of them in all. The other was the decision to abandon videotape in favor of 35-millimeter stock, the same medium on which feature films were shot. This was a dubiously defensible decision on practical grounds, what with the sharply limited size and resolution of the computer-monitor screens on which Roberts’s movie would be seen, but it says much about where the young would-be auteur’s inspirations and aspirations lay. “My goal is to bring the superior production values of Hollywood movies to the interactive realm,” he said in an interview. Origin would wind up paying Crocodile $7.7 million in all in the pursuit of that lofty goal.

The hall of the Terran Assembly was one of the more elaborate of the Wing Commander IV sets, showing how far the series had come but also in a way how far it still had to go, what with its distinctly plastic, stage-like appearance. It will be seen on film in a clip later on in this article.

These changes served only to distance the movie part of Wing Commander from the game part that much more; now the folks in Austin didn’t even have to paint backgrounds for Roberts’s film shoot. More than ever, the two halves of the whole were water and oil rather than water and wine. All told, it’s doubtful whether the flying-and-shooting part of Wing Commander IV absorbed much more than 10 percent of the total budget.

Origin was able to hire most of the featured actors from last time out to return for Wing Commander IV. Once again, Mark Hamill, one of the most sensible people in Hollywood, agreed to head up the cast as Colonel Blair, the protagonist and the player’s avatar, for a salary of $419,100 for the 43-day shoot. (“A lot of actors spend their whole lives wanting to be known as anything,” he said when delicately asked if he ever dwelt upon his gradual, decade-long slide down through the ranks of the acting profession, from starring as Luke Skywalker in the Star Wars blockbusters to starring in videogames. “I always thought I should be happy for what I have instead of being unhappy for what I don’t have. So, you know, if things are going alright with your family… I don’t know, not really. I think it’s good.”) Likewise, Tom Wilson ($117,300) returned to play Blair’s fellow pilot and frenemy Maniac; Malcolm McDowell ($285,500) again played the stiffly starched Admiral Tolwyn; and John Rhys-Davies ($52,100) came back as the fighter jock turned statesman Paladin. After the rest of the cast and incidental expenses were factored in, the total bill for the actors came to just under $1.4 million.

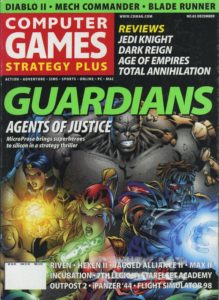

Far from being taken aback by the numbers involved, Origin made them a point of pride. If anything, it inflated them; the total development cost of $12 million which was given to magazines like Computer Gaming World over the course of one of the most extensive pre-release hype campaigns the industry had ever seen would appear to be a million or two over the real figure, based on what I’ve been able to glean from the company’s internal budgeting documents. Intentionally or not, the new game’s subtitle made the journalists’ headlines almost too easy to write: clearly, the true “price of freedom” was $12 million. The award for the most impassioned preview must go to the British edition of PC Gamer, which proclaimed that the game’s eventual release would be “one of the most important events of the twentieth century.” On an only slightly more subdued note, Computer Gaming World noted that “if Wing Commander III was like Hollywood, this game is Hollywood.” The mainstream media got in on the excitement as well: CNN ran a segment on the work in progress, Newsweek wrote it up, and Daily Variety was correct in calling it “the most expensive CD-ROM production ever” — never mind a million or two here or there. Mark Hamill and Malcolm McDowell earned some more money by traveling the morning-radio and local-television circuit in the final weeks before the big release.

Wing Commander IV was advertised on television at a time when that was still a rarity for computer games. The advertisements blatantly spoiled what was intended to be a major revelation about the real villain of the story. (You have been warned!)

The game was launched on February 8, 1996, in a gala affair at the Beverly Hills Planet Hollywood, with most of the important cast members in attendance to donate their costumes — “the first memorabilia from a CD-ROM game to be donated to the internationally famous restaurant,” as Origin announced proudly. (The restaurant itself appears to have been less enthused; the costumes were never put on display after the party, and seem to be lost now.) The assembled press included representatives of CNN, The Today Show, HBO, Delta Airlines’s in-flight magazine, and the Associated Press among others. In the weeks that followed, Chris Roberts and Mark Hamill did a box-signing tour in conjunction with Incredible Universe, a major big-box electronics chain of the time.

The early reviews were positive, and not just those in the nerdy media. “The game skillfully integrates live-action video with computer-generated graphics and sophisticated gameplay. Has saving the universe ever been this much fun?” asked Newsweek, presumably rhetorically. Entertainment Weekly called Wing Commander IV “a movie game that takes CD-ROM warfare into the next generation,” giving it an A- on its final report card. The Salt Lake City Tribune said that it had “a cast that would make any TV-movie director jealous — and more than a few feature-film directors as well. While many games tout themselves as interactive movies, Wing Commander IV is truly deserving of the title — a pure joy to watch and play.” The Detroit Free Press said that “at times, it was like watching an episode of a science-fiction show.”

The organs of hardcore gaming were equally fulsome. Australia’s Hyper magazine lived up to its name (Hyperventilate? Hyperbole?) with the epistemologically questionable assertion that “if you don’t play this then you really don’t own a computer.” Computer Gaming World, still the United States’s journal of record, was almost as effusive, writing that “as good as the previous installment was, it served only as a rough prototype for the polished chrome that adorns Wing Commander IV. This truly is the vanguard of the next generation of electronic entertainment.”

Surprisingly, it was left to PC Gamer, the number-two periodical in the American market, normally more rather than less hype-prone than its older and somewhat stodgier competitor, to inject a note of caution into the critical discourse, by acknowledging how borderline absurd it was on the face of it to release a game in which 90 percent of the budget had gone into the cut scenes.

How you feel about Wing Commander IV: The Price of Freedom is going to depend a lot on how you felt about Wing Commander III and the direction the series seems to be headed in.

When the original Wing Commander came out, it was a series of incredible, state-of-the-art space-combat sequences, tied together with occasional animated cut scenes. Today, Wing Commander IV seems more like a series of incredible, full-motion-video cut scenes tied together with occasional space-combat sequences. You can see the shift away from gameplay and toward multimedia flash in one of the ads for Wing Commander IV; seven of the eight little “bullet points” that list the game’s impressive new features are devoted to improvements in the quality of the video. Only the last point says anything about actual gameplay. If the tail’s not wagging the dog yet, it’s getting close.

For all its cosmetic improvements, Wing Commander IV feels just a little hollow. I can’t help thinking about what the fourth Wing Commander game might be like if the series had moved in the opposite direction, making huge improvements in the actual gameplay, rather than spending more and more time and effort on the stuff in between.

Still, these concerns were only raised parenthetically; even PC Gamer‘s reviewer saw fit to give the game a rating of 90 percent after unfurrowing his brow.

Today, however, the imbalance described above has become even more difficult to overlook, and seems even more absurd. As my regular readers know, narrative-oriented games are the ones I tend to be most passionate about; I’m the farthest thing from a Chris Crawford, insisting that the inclusion of any set-piece story line is a betrayal of interactive entertainment’s potential. My academic background is largely in literary studies, which perhaps explains why I tend to want to read games like others do books. And yet, with all that said, I also recognize that a game needs to give its player something interesting to do.

I’m reminded of an anecdote from Steve Diggle, a guitarist for the 1970s punk band Buzzcocks. He tells of seeing the keyboardist for the progressive-rock band Yes performing with “a telephone exchange of electronic things that nobody could afford or relate to. At the end, he brought an alpine horn out — because he was Swiss. It was a long way from Little Richard. I thought, ‘Something’s got to change.'” There’s some of the same quality to Wing Commander IV. Matters have gone so far out on a limb that one begins to suspect the only thing left to be done is just to burn it all down and start over.

But we do strive to be fair around here, so let’s try to evaluate the movie and the game of Wing Commander IV on their own merits before we address their imperfect union.

Chris Roberts is not a subtle storyteller; his influences are always close to the surface. The first three Wing Commander games were essentially a retelling of World War II in the Pacific, with the Terran Confederation for which Blair flies in the role of the United States and its allies and the evil feline Kilrathi in that of Japan. Now, with the alien space cats defeated once and for all, Roberts has moved on to the murkier ethical terrain of the Cold War, where battles are fought in the shadows and friend and foe are not so easy to distinguish. Instead of being lauded like the returning Greatest Generation were in the United States after World War II, Blair and his comrades who fought the good fight against the Kilrathi are treated more like the soldiers who came back from Vietnam. We learn that we’ve gone from rah-rah patriotism to something else the very first time we see Blair, when he meets a down-on-his-luck fellow veteran in a bar and can, at you the player’s discretion, give him a few coins to help him out. Shades of gray are not really Roberts’s forte; earnest guy that he is, he prefers the primary-color emotions. Still, he’s staked out his dramatic territory and now we have to go with it.

Having been relegated to the reserves after the end of the war with the Kilrathi, Blair has lately been running a planetside farm, but he’s called back to active duty to deal with a new problem on the frontiers of the Terran Confederation: a series of pirate raids in the region of the Border Worlds, a group of planets that is allied with the Confederation but has always preferred not to join it formally. Because the attacks are all against Confederation vessels rather than those of the Border Worlds, it is assumed that the free-spirited inhabitants of the latter are behind them. I trust that it won’t be too much a spoiler if I reveal here that the reality is far more sinister.

By all means, we should give props to Roberts for not just finding some way to bring the Kilrathi back as humanity’s existential threat. They are still around, and even make an appearance in Wing Commander IV, but they’ve seen the error of their ways with Confederation guidance and are busily rebuilding their society on more peaceful lines. (The parallels with World War II-era — and now postwar — Japan, in other words, still hold true.)

For all the improved production values, the Kilrathi in Wing Commander IV still look as ridiculous as ever, more cuddly than threatening.

The returns from Origin’s $9 million investment in the movie are front and center. An advantage of working with real sets instead of green screens is the way that the camera is suddenly allowed to move, making the end result look less like something filmed during the very earliest days of cinema and more like a product of the post-Citizen Kane era. One of the very first scenes is arguably the most impressive of them all. The camera starts on the ceiling of a meeting hall, looking directly down at the assembled dignitaries, then slowly sweeps to ground level, shifting as it moves from a vertical to a horizontal orientation. I’d set this scene up beside the opening of Activision’s Spycraft — released at almost the same time as Wing Commander IV, as it happens — as the most sophisticated that this generation of interactive movies ever got by the purely technical standards of film-making. (I do suspect that Wing Commander IV‘s relative adroitness is not so much down to Chris Roberts as to its cinematographer, a 21-year Hollywood veteran named Eric Goldstein.)

The acting, by contrast, is on about the same level as Wing Commander III: professional if not quite passionate. Mark Hamill’s dour performance is actually among the least engaging. (This is made doubly odd by the fact that he had recently been reinventing himself as a voice actor, through a series of portrayals — including a memorable one in the game Gabriel Knight: Sins of the Fathers — that are as giddy and uninhibited as his Colonel Blair isn’t.) On the other hand, it’s a pleasure to hear Malcolm McDowell and John Rhys-Davies deploy their dulcet Shakespearian-trained voices on even pedestrian (at best) dialog like this. But the happiest member of the cast must be Tom Wilson, whose agent’s phone hadn’t exactly been ringing off the hook in recent years; his traditional-cinema career had peaked with his role as the cretinous villain Biff in the Back to the Future films. Here he takes on the similarly over-the-top role of Maniac, a character who had become a surprise hit with the fans in Wing Commander III, and sees his screen time increased considerably in the fourth game as a result. As comic-relief sidekicks go, he’s no Sancho Panza, but he does provide a welcome respite from Blair’s always prattling on, a little listlessly and sleepy-eyed at times, about duty and honor and what hell war is (such hell that Chris Roberts can’t stop making games about it).

That said, the best humor in Wing Commander IV is of the unintentional kind. There’s a sort of Uncanny Valley in the midst of this business of interactive movies, as there is in so many creative fields. When the term was applied to games that merely took some inspiration from cinema, perhaps with a few (bad) actors mouthing some lines in front of green screens, it was easier to accept fairly uncritically. But the closer games like this one come to being real movies, the more their remaining shortcomings seem to stand out, and, paradoxically, the farther from their goal they seem to be. The reality is that 37 sets isn’t many by Hollywood standards — and most of these are cheap, sparse, painfully plastic-looking sets at that. Like in those old 1960s episodes of Star Trek, everybody onscreen visibly jumps — not in any particular unison, mind you — when the camera shakes to indicate an explosion and the party-supply-store smoke machines start up. The ray guns they shoot each other with look like gaudy plastic toys that Wal Mart would be ashamed to stock, while the accompanying sound effects would have been rejected as too cheesy by half by the producers of Battlestar Galactica.

All of this is understandable, even forgivable. A shooting budget of $9 million may have been enormous in game terms, but it was nothing by the standards of a Hollywood popcorn flick. (The 1996 film Star Trek: First Contact, for example, had five times the budget of Wing Commander IV, and it was not even an especially expensive example of its breed.) In the long run, interactive movies would find their Uncanny Valley impossible to bridge. Those who made them believed that they were uniquely capable of attracting a wider, more diverse audience than the people who typically played games in the mid-1990s. That proposition may have been debatable, but we’ll take it at face value. The problem was that, in order to attract these folks, they had to look like more than C-movies with aspirations of reaching B status. And the games industry’s current revenues simply didn’t give them any way to get from here to there. Wing Commander IV is a prime case in point: the most expensive game ever made still looked like a cheap joke by Hollywood standards.

The spaceships of the far future are controlled by a plastic steering wheel that looks like something you’d find hanging off of a Nintendo console. Pity the poor crew member whose only purpose in life seems to be to standing there holding on to it and fending off the advances of Major Todd “Maniac” “Sexual Harassment is Hilarious!” Marshall.

Other failings of Wing Commander IV, however, are less understandable and perchance less forgivable. It’s sometimes hard to believe that this script was the product of professional screenwriters, given the quantity of dialog which seems lifted from a Saturday Night Live sketch, which often had my wife and I rolling on the floor when we played the game together recently. (Or rather, when I played and she watched and laughed.) “Just because we operate in the void of space, is loyalty equally weightless?” Malcolm McDowell somehow manages to intone in that gorgeously honed accent of his without smirking. A young woman mourning the loss of her beau — as soon as you saw that these two had a thing going, you knew he was doomed, by the timeless logic of war movies — chooses the wrong horse as her metaphor and then just keeps on riding it out into the rhetorical sagebrush: “He’s out there along with my heart. Both no more than space dust. People fly through him every day and don’t even know it.”

Then there’s the way that everyone, excepting only Blair, is constantly referred to only by his or her call sign. This doesn’t do much to enhance the stateliness of a formal military funeral: “Some may think that Catscratch will be forgotten. They’re wrong. He’ll stay in our hearts always.” There’s the way that all of the men are constantly saluting each other at random moments, as if they’re channeling all of the feelings they don’t know how to express into that act — saluting to keep from crying, saluting as a way to avoid saying, “I love you, man!,” saluting whenever the screenwriters don’t know what the hell else to have them do. (Of course, they all do it so sloppily that anyone who really was in the military will be itching to jump through the monitor and smack them into shape.) And then there’s the ranks and titles, which sound like something children on a playground — or perhaps (ahem!) someone else? — came up with: Admiral Tolwyn gets promoted to “Space Marshal,” for Pete’s sake.

I do feel just a little bad to make fun of all this so much because Chris Roberts’s heart is clearly in the right place. At a time when an increasing number of games were appealing only to the worst sides of their players, Wing Commander IV at least gave lip service to the ties that bind, the things we owe to one another. It’s not precisely wrong in anything it says, even if it does become a bit one-note in that tedious John Wayne kind of way. Deep into the game, you discover that the sinister conspiracy you’ve been pursuing involves a new spin on the loathsome old arguments of eugenics, those beliefs that some of us have better genes than others and are thus more useful, valuable human beings, entitled to things that their inferior counterparts are not. Wing Commander IV knows precisely where it stands on this issue — on the right side. But boy, can its delivery be clumsy. And its handling of a more complex social issue like the plight of war veterans trying to integrate back into civilian society is about as nuanced as the old episodes of Magnum, P.I. that probably inspired it.

But betwixt and between all of the speechifying and saluting, there is still a game to play, consisting of about 25 to 30 missions worth of space-combat action, depending on the choices you make from the interactive movie’s occasional menus and how well you fly the missions themselves. The unsung hero of Wing Commander IV must surely be one Anthony Morone, who bore the thankless title of “Game Director,” meaning that he was the one who oversaw the creation of the far less glamorous game part of the game back in Austin while Chris Roberts was off in Hollywood shooting his movie. He did what he could with the limited time and resources at his disposal.

I noted above how the very way that this fourth game was made tended to pull the two halves of its personality even farther apart. That’s true on one level, but it’s also true that Morone made some not entirely unsuccessful efforts to push back against that centrifugal drift. Some of the storytelling now happens inside the missions themselves — something Wing Commander II, the first heavily plot-based entry in the series, did notably well, only to have Wing Commander III forget about it almost completely. Now, though, it’s back, such that your actions during the missions have a much greater impact on the direction of the movie. For example, at one point you’re sent to intercept some Confederation personnel who have apparently turned traitor. In the course of this mission, you learn what their real motivations are, and, if you think they’re good ones, you can change sides and become their escort rather than their attacker.

Indeed, there are quite a few possible paths through the story line and a handful of different endings, based on both the choices you take from those menus that pop up from time to time during the movie portions and your actions in the heat of battle. In this respect too, Wing Commander IV is more ambitious and more sophisticated than Wing Commander III.

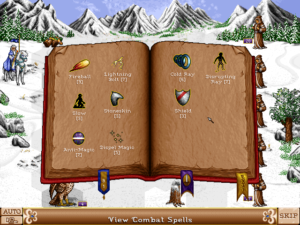

A change in Wing Commander IV that feels very symbolic is the removal of any cockpit graphics. In the first game, seeing your pilot avatar manipulate the controls and seeing evidence of damage in your physical surroundings was extraordinarily verisimilitudious. Now, all that has been discarded without a second thought by a game with other priorities.

But it is enough? It’s hard to escape a creeping sense of ennui as you play this game. The flight engine and mission design still lag well behind LucasArts’s 1994 release TIE Fighter, a game that has aged much better than this one in all of its particulars. Roughly two out of every three missions here still don’t have much to do with the plot and aren’t much more than the usual “fly between these way points and shoot whatever you find there” — a product of the need to turn Roberts’s movie into a game that lasts longer than a few hours, in order to be sure that players feel like they have gotten their $50 worth. Worse, the missions are poorly balanced, being much more difficult than those in the previous game; enemy missiles are brutally overpowered, being now virtually guaranteed to kill you with one hit. The sharply increased difficulty feels more accidental than intentional, a product of the compressed development schedule and a resultant lack of play-testing. However it came about, it pulls directly against Origin’s urgent need to attract more — read, more casual — gamers to the series in order to justify its escalating budgets. Here as in so many other places in this game, the left hand didn’t know what the right hand was doing, to the detriment of both.

In the end, then, neither the movie nor the game of Wing Commander IV can fully stand up on its own, and in combination they tend to clash more than they create any scintillating synergy. One senses when playing through the complete package that Origin’s explorations in this direction have indeed reached a sort of natural limit akin to that alpine-horn-playing keyboard player, that the only thing left to do now is to back up and try something else.

The magazines may have been carried away by the hype around Wing Commander IV, but not all ordinary gamers were. For example, one by the name of Robert Fletcher sent Origin the following letter:

I have noticed that the game design used by Origin has stayed basically the same. Wing Commander IV is a good example of a game design that has shown little growth. If one were to strip away the film clips, there would be a bare-bones game. The game would look and play like a game from the early 1980s. A very simple branching story line, with a little arcade action.

With all the muscle and talent at Origin’s command, it makes me wonder if Origin is really trying to push the frontier of game design. I know a little of what it takes to develop a game, from all the articles I have read (and I have read many). Many writers and developers are calling for their peers to get back to pushing the frontier of game design, over the development of better graphics.

Wing Commander IV has the best graphics I have seen, and it will be a while before anyone will match this work of art. But as a game, Wing Commander IV makes a better movie.

In its April 1996 issue — notice that date! — Computer Gaming World published an alleged preview of Origin’s plans for Wing Commander V. Silly though the article is, it says something about the reputation that Chris Roberts and his franchise were garnering among gamers like our Mr. Fletcher for pushing the envelope of money and technology past the boundaries of common sense, traveling far out on a limb that was in serious danger of being cut off behind them.

With Wing Commander IV barely a month old, Origin has already announced incredible plans for the next game in the highly successful series. In another first for a computer-game company, Origin says it will design small working models of highly maneuverable drones which can be launched into space, piloted remotely, and filmed. The craft will enable Wing V to have “unprecedented spaceflight realism and true ‘star appeal,'” said a company spokesman.

Although the next game in the science-fiction series sounds more like fiction than science, Origin’s Chris Roberts says it’s the next logical step for his six-year-old creation. “If you think about it,” he says, “Wing Commander [I] was the game where we learned the mechanics of space fighting. We made lots of changes and improvements in Wing II. With Wing III, we raised the bar considerably with better graphics, more realistic action, full-motion video, and big-name stars in video segments. In Wing IV, we upped the ante again with real sets, more video, and, in my opinion, a much better story. We’ve reached the point of using real stars and real sets — now it’s time to take our act on location: real space.”

Analysts say it’s nearly impossible to estimate the cost of such an undertaking. Some put figures at between $100 million and $10 billion, just to deploy a small number of remotely pilotable vehicles beyond Earth’s atmosphere. Despite this, Origin’s Lord British (Richard Garriott) claims that he has much of the necessary financial support from investors. Says Garriott, “When we told [investors] what we wanted to do for Wing Commander V, they were amazed. We’re talking about one of man’s deepest desires — to break free of the bonds of Earth. We know it seems costly in comparison with other games, but this is unlike anything that’s ever been done. I don’t see any problem getting the financial backing for this project, and we expect to recoup the investment in the first week. You’re going to see a worldwide release on eight platforms in 36 countries. It’s going to be a huge event. It’ll dwarf even Windows 95.”

Tellingly, some fans believed the announcement was real, writing Origin concerned letters about whether this was really such a good use of its resources.

Still, the sense of unease about Origin’s direction was far from universal. In a sidebar that accompanied its glowing review of Wing Commander IV in that same April 1996 issue, Computer Gaming World asked on a less satirical note, “Is it time to take interactive movies seriously?” The answer according to the magazine was yes: “Some will continue to mock the concept of ‘Siliwood,’ but the marriage of Hollywood and Silicon Valley is definitely real and here to stay. In this regard, no current game charts a more optimistic path to the future of multimedia entertainment than Wing Commander IV.” Alas, the magazine’s satire would prove more prescient than this straightforward opinion piece. Rather than the end of the beginning of the era of interactive movies, Wing Commander IV would go down in history as the beginning of the end, a limit of grandiosity beyond which further progress was impossible.

The reason came down to the cold, hard logic of dollars and cents, working off of a single data point: Wing Commander IV sold less than half as many copies as Wing Commander III. Despite the increased budget and improved production values, despite all the mainstream press coverage, despite the gala premiere at Planet Hollywood, it just barely managed to break even, long after its initial release. I believe the reason why had everything to with that Uncanny Valley I described for you. Those excited enough by the potential of the medium to give these interactive movies the benefit of the doubt had already done so, and even many of these folks were now losing interest. Meanwhile the rest of the world was, at best, waiting for such productions to mature enough that they could sit comfortably beside real movies, or even television. But this was a leap that even Origin Systems, a subsidiary of Electronic Arts, the biggest game publisher in the country, was financially incapable of making. And as things currently stood, the return on investment on productions even the size of Wing Commander IV — much less still larger — simply wasn’t there.

During this period, a group of enterprising Netizens took it upon themselves to compile a weekly “Internet PC Games Chart” by polling thousands of their fellow gamers on what they were playing just at that moment. Wing Commander IV is present on the lists they published during the spring of 1996, rising as high as number four for a couple of weeks. But the list of games that consistently place above it is telling: Command & Conquer, Warcraft II, DOOM II, Descent, Civilization II. Although some of them do have some elements of story to bind their campaigns together and deliver a long-form single-player experience, none of them aspires to full-blown interactive movie-dom (not even Command & Conquer, which does feature real human actors onscreen giving its mission briefings). In fact, no games meeting that description are ever to be found anywhere in the top ten at the same time as Wing Commander IV.

Thanks to data like this, it was slowly beginning to dawn on the industry’s movers and shakers that the existing hardcore gamers — the people actually buying games today, and thereby sustaining their companies — were less interested in a merger of Silicon Valley and Hollywood than they were. “I don’t think it’s necessary to spend that much money to suspend disbelief and entertain the gamer,” said Jim Namestka of Dreamforge Intertainment by way of articulating the emerging new conventional wisdom. “It’s alright to spend a lot of money on enhancing the game experience, but a large portion spent instead on huge salaries for big-name actors… I question whether that’s really necessary.”

I’ve written quite a lot in recent articles about 1996 as the year that essentially erased the point-and-click adventure game as one of the industry’s marquee genres. Wing Commander IV isn’t one of those, of course, even if it does look a bit like one at times, when you’re wandering around a ship talking to your crew mates. Still, the Venn diagram of the interactive movie does encompass games like Wing Commander IV, just as it does games like, say, Phantasmagoria, the biggest adventure hit of 1995, which sold even more copies than Wing Commander III. In 1996, however, no game inside that Venn diagram became a million-selling breakout hit. The best any could manage was a middling performance relative to expectations, as was the case for Wing Commander IV. And so the retrenchment began.

It would have been financially foolish to do anything else. The titles that accompanied and often bested Wing Commander IV on those Internet PC Games Charts had all cost vastly less money to make and yet sold as well or better. id Software’s Wolfenstein 3D and DOOM, the games that had started the shift away from overblown storytelling and extended multimedia cut scenes and back to the nuts and bolts of gameplay, had been built by a tiny team of scruffy outsiders working on a shoestring; call this the games industry’s own version of Buzzcocks versus Yes.

The shift away from interactive movies didn’t happen overnight. At Origin, the process of bargaining with financial realities would lead to one more Wing Commander game before the franchise was put out to pasture, still incorporating real actors in live-action cut scenes, but on a less lavish, more sustainable — read, cheaper — scale. The proof was right there in the box: Wing Commander: Prophecy, which but for a last-minute decision by marketing would have been known as Wing Commander V, shipped on three CDs in early 1997 rather than the six of Wing Commander IV. By that time, the whole franchise was looking hopelessly passé in a sea of real-time strategy and first-person shooters whose ethic was to get you into the action fast and keep you there, without any clichéd meditations about the hell that is war. Wing Commander IV had proved to be the peak of the interactive-movie mountain rather than the next base camp which Chris Roberts had imagined it to be.

This is not to say that digital interactive storytelling as a whole died in 1996. It just needed to find other, more practical and ultimately more satisfying ways to move forward. Some of those would take shape in the long-moribund CRPG genre, which enjoyed an unexpected revival close to the decade’s end. Adventure games too would soldier on, but on a smaller scale more appropriate to their reduced commercial circumstances, driven now by passion for the medium rather than hype, painted once again in lovely pixel art instead of grainy digitized video. For that matter, even space simulators would enjoy a golden twilight before falling out of fashion for good, thanks to several titles that kicked against what Wing Commander had become by returning the focus to what happened in the cockpit.

All of these development have left Wing Commander IV standing alone and exposed, its obvious faults only magnified that much more by its splendid isolation. It isn’t a great game, nor even all that good a game, but it isn’t a cynical or unlikable one either. Call it a true child of Chris Roberts: a gawky chip off the old block, with too much money and talent and yet not quite enough.

Did you enjoy this article? If so, please think about pitching in to help me make many more like it. You can pledge any amount you like.

(Sources: the book Origin’s Official Guide to Wing Commander IV: The Price of Freedom by Melissa Tyler; Computer Gaming World of February 1995, May 1995, December 1995, April 1996, and July 1997; Strategy Plus of December 1995; the American PC Gamer of September 1995 and May 1996; Origin’s internal newsletter Point of Origin of September 8 1995, January 12 1996, February 12 1996, April 5 1996, and May 17 1996; Retro Gamer 59. Online sources include the various other internal Origin documents, video clips, pictures, and more hosted at Wing Commander News and Mark Asher’s CNET GameCenter columns from March 24 1999 and October 29 1999. And, for something completely different, Buzzcocks being interview at the British Library in 2016. RIP Pete Shelley.

Wing Commander IV: The Price of Freedom is available from GOG.com as a digital purchase.)