On July 9, 1979, journalists filtered into one of the lavish reception halls in Manhattan’s Plaza Hotel to witness the flashy roll-out of The Source, an online service for home-computer owners that claimed to be the first of its kind. The master of ceremonies was none other than the famous science-fiction and science-fact writer Isaac Asimov. With his nutty-professor persona in full flower, his trademark mutton-chop sideburns bristling in the strobe of the flashbulbs, Asimov said that “this is the beginning of the Information Age! By the 21st century, The Source will be as vital as electricity, the telephone, and running water.”

Actually, though, The Source wasn’t quite the first of its kind. Just eight days before, another new online service had made a more quiet official debut. It was called MicroNET, and came from an established provider of corporate time-shared computing services called CompuServe. MicroNET got no splashy unveiling, no celebrity spokesman, just a typewritten announcement letter sent to members of selected computer users groups.

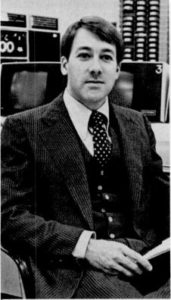

The contrast between the two roll-outs says much about the men behind them, who between them would come to shape much of the online world of the 1980s and beyond. They were almost exactly the same age as one another, but cut from very different cloths. Jeff Wilkins, the executive in charge of CompuServe, could be bold when he felt it was warranted, but his personality lent itself to a measured, incremental approach that made him a natural favorite with the conservative business establishment. “The changes that will come to microcomputing because of computer networks will be evolutionary in nature,” he said just after launching MicroNET. Even after Wilkins left CompuServe in 1985, it would continue to bear the stamp of his careful approach to doing business for many years.

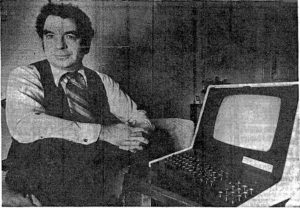

But William von Meister, the man behind The Source and its afore-described splashier unveiling, preferred revolutions to evolutions. He was high-strung, mercurial, careless, sometimes a little unhinged. Described as a “magnificent rogue” by one acquaintance, as a “pathological entrepreneur” by another, he made businesses faster than he made children — of whom, being devoted to excess in all its incarnations, he had eight. His businesses seldom lasted very long, and when they did survive did so without him at their helm, usually after he had been chased out of them in a cloud of acrimony and legal proceedings. A terrible businessman by most standards, he could nevertheless “raise money from the dead,” as one investor put it, thereby moving on to the next scheme while the previous was still going down in flames. Still, whatever else you could say about him, Bill von Meister had vision. Building the online societies of the future would require cockeyed dreamers like him just as much as it would sober tacticians like Jeff Wilkins.

Had an anonymous salesman who worked for Digital Equipment Corporation in 1968 been slightly less good at his job, CompuServe would most likely never have come to be.

The salesman in question had been assigned to a customer named John Goltz, fresh out of the University of Arizona and working now in Columbus, Ohio, for a startup. But lest the word “startup” convey a mistaken impression of young men with big dreams out to change the world, Silicon Valley-style, know that this particular startup lived within about the most unsexy industry imaginable: life insurance. No matter; from Goltz’s perspective anyway the work was interesting enough.

He found himself doing the work because Harry Gard, the founder of the freshly minted Golden United Life Insurance, wanted to modernize his hidebound industry, at least modestly, by putting insurance records online via a central computer which agents in branch offices could all access. He had first thought of giving the job to his son-in-law Jeff Wilkins, an industrious University of Arizona alumnus who had graduated with a degree in electrical engineering and now ran a successful burglar-alarm business of his own in Tucson. “The difference between electrical engineering and computing didn’t occur to him,” remembers Wilkins. “I told him that I didn’t know anything about computing, but I had a friend who did.” That friend was John Goltz, whose degree in computer science made him the more logical candidate in Wilkins’s eyes.

Once hired, Goltz contacted DEC to talk about buying a PDP-9, a sturdy and well-understood machine that should be perfectly adequate for his new company’s initial needs. But our aforementioned fast-talking salesman gave him the hard up-sell, telling him about the cutting-edge PDP-10 he could lease for only “a little more.” Like the poor rube who walks into his local Ford dealership to buy a Focus and drives out in a Mustang, Goltz’s hacker heart couldn’t resist the lure of DEC’s 36-bit hot rod. He repeated the salesman’s pitch almost verbatim to his boss, and Gard, not knowing a PDP-10 from a PDP-9 from a HAL 9000, said fine, go for it. Once his dream machine was delivered and installed in a former grocery store, Goltz duly started building the online database for which he’d been hired.

The notoriously insular life-insurance market was, however, a difficult nut to crack. Orders came in at a trickle, and Goltz’s $1 million PDP-10 sat mostly idle most of the time. It was at this point, looking for a way both to make his computer earn its keep and to keep his employer afloat, that Goltz proposed that Golden United Life Insurance enter into the non-insurance business of selling time-shared computer cycles. Once again, Gard told him to go for it; any port in a storm and all that.

At the dawn of the 1970s, time-sharing was the hottest buzzword in the computer field. Over the course of the 1950s and 1960s, the biggest institutions in the United States — government bureaucracies, banks, automobile manufacturers and other heavy industries — had all gradually been computerized via hulking mainframes that, attended by bureaucratic priesthoods of their own and filling entire building floors, chewed through and spat out millions of records every day. But that left out the countless smaller organizations who could make good use of computers but had neither the funds to pay for a mainframe’s care and upkeep nor a need for more than a small fraction of its vast computing power. DEC, working closely with university computer-science departments like that of MIT, had been largely responsible for the solution to this dilemma. Time-sharing, enabled by a new generation of multi-user, multitasking operating systems like DEC’s TOPS-10 and an evolving telecommunications infrastructure that made it possible to link up with computers from remote locations via dumb terminals, allowed computer cycles and data storage to be treated as a commodity. A business or other organization, in other words, could literally share time on a remote computer system with others, paying for only the cycles and storage they actually used. (If you think that all this sounds suspiciously like the supposedly modern innovation of “cloud computing,” you’re exactly right. In technology as in life, a surprising number of things are cyclical, with only the vocabulary changing.)

John Goltz possessed a keen technical mind, but he had neither the aptitude nor the desire to run the business side of Golden United’s venture into time-sharing. So, Harry Gard turned once again to his son-in-law. “I liked what I was doing in Arizona,” Jeff Wilkins says. “I enjoyed having my own company, so I really didn’t want to come out.” Finally, Gard offered him $1.5 million in equity, enough of an eye-opener to get him to consider the opportunity more seriously. “I set down the ground rules,” he says. “I had to have complete control.” In January of 1970, with Gard having agreed to that stipulation, the 27-year-old Jeff Wilkins abandoned his burglar-alarm business in Tucson to come to Columbus and run a new Golden Life subsidiary which was to be called Compu-Serv.

With time-sharing all the rage in computer circles, it was a tough market they were entering. Wilkins remembers cutting his first bill to a client for all of $150, thinking all the while that it was going to take a lot of bills just like it to pay for this $1 million computer. But Compu-Serv was blessed with a steady hand in Wilkins himself and a patient backer with reasonably deep pockets in his father-in-law. Wilkins hired most of his staff out of big companies like IBM and Xerox. They mirrored their young but very buttoned-down boss, going everywhere in white shirt and tie, lending an aura of conservative professionalism that belied the operation’s small size and made it attractive to the business establishment. In 1972, Compu-Serv turned the corner into a profitability that would last for many, many years to come.

In the beginning, they sold nothing more than raw computer access; the programs that ran on the computers all had to come from the clients themselves. As the business expanded, though, Compu-Serv began to offer off-the-shelf software as well to suit the various industries they found themselves serving. They began, naturally enough, with the “Life Insurance Data Information System,” a re-purposing of the application Goltz had already built for Golden United. Expanding the reach of their applications from there, they cultivated a reputation as a full-service business partner rather than a mere provider of a commodity. Most importantly of all, they invested heavily into their own telecommunications infrastructure that existed in parallel with the nascent Internet and other early networks, using lines leased from AT&T and a system of routers — actually, DEC minicomputers running software of their own devising — for packet-switching. From their first handful of clients in and around Columbus, Compu-Serv thus spread their tendrils all over the country. They weren’t the cheapest game in town, but for the risk-averse businessperson looking for a full-service time-sharing provider with a fast and efficient network, they made for a very appealing package.

In 1975, Compu-Serv was spun off from the moribund Golden United Life Insurance, going public with a NASDAQ listing. Thus freed at last, the child quickly eclipsed the parent; the first stock split happened within a year. In 1977, Compu-Serv changed their name to CompuServe. By this point, they had more than two dozen offices spread through all the major metropolitan areas, and that one PDP-10 in a grocery store had turned into more than a dozen machines filling two data centers near Columbus. Their customer roll included more than 600 businesses. By now, even big business had long since come to see the economic advantages time-sharing offered in many scenarios. CompuServe’s customers included Fortune 100 giants like AMAX (the largest miner of aluminum, coal, and steel in the country), Goldman Sachs, and Owens Corning, along with government agencies like the Department of Transportation. “CompuServe is one of the best — if not the best — time-sharing companies in the country,” said AMAX’s director of research.

The process that would turn this corporate data processor of the 1970s into the most popular consumer online service of the 1980s was born out of much the same reasoning that had spawned it in the first place. Once again, it all came down to precious computer cycles that were sitting there unused. To keep their clients happy, CompuServe was forced to make sure they had enough computing capacity to meet peak-hour demand. This meant that the majority of the time said capacity was woefully underutilized; the demand for CompuServe’s computer cycles was an order of magnitude higher during weekday working hours than it was during nights, evenings, and weekends, when the offices of their corporate clients were deserted. This state of affairs had always rankled Jeff Wilkins, nothing if not a lover of efficiency. Yet it had always seemed an intractable problem; it wasn’t as if they could ask half their customers to start working a graveyard shift.

Come 1979, though, a new development was causing Wilkins to wonder if there might in fact be a use for at least some of those off-hour cycles. The age of personal computing was in the offing. Turnkey microcomputers were now available from Apple, Commodore, and Radio Shack. The last company alone was on track to sell more than 50,000 TRS-80s before the end of the year, and many more models from many more companies were in the offing. The number of home-computer hobbyists was still minuscule by any conventional standard, but it could, it seemed to Wilkins, only grow. Might some of those hobbyists be willing and able to dial in and make use of CompuServe’s dearly bought PDP-10 systems while the business world slept? If so, who knew what it might turn into?

It wasn’t as if a little diversity would be a bad thing. While CompuServe was still doing very well on the strength of their fine reputation — they would bill their clients for $19 million in 1979 — the time-sharing market in general was showing signs of softening. The primary impetus behind it — the sheer expense of owning one’s own computing infrastructure — was slowly bleeding away as minicomputers like the DEC PDP-11, small enough to shove away in a closet somewhere rather than requiring a room or a floor of its own, became a more and more cost-effective solution. Rather than a $1 million proposition, as it had been ten years ago, a new DEC system could now be had for as little as $150,000. Meanwhile a new piece of software called VisiCalc — the first spreadsheet program ever, at least as the modern world understands that term — would soon show that even an early, primitive microcomputer could already replace a time-shared terminal hookup in a business’s accounting department. And once entrenched in that vital area, microcomputers could only continue to spread throughout the corporation.

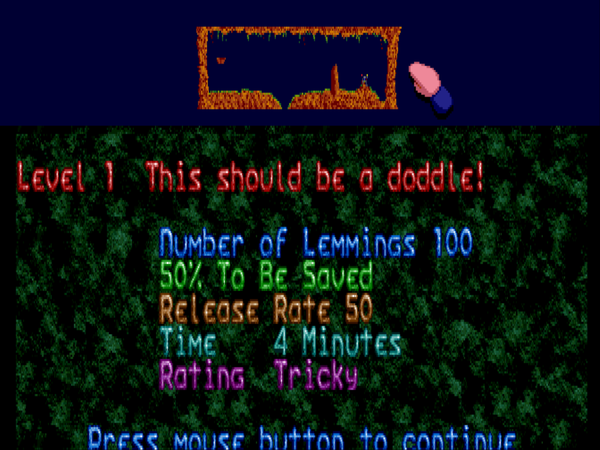

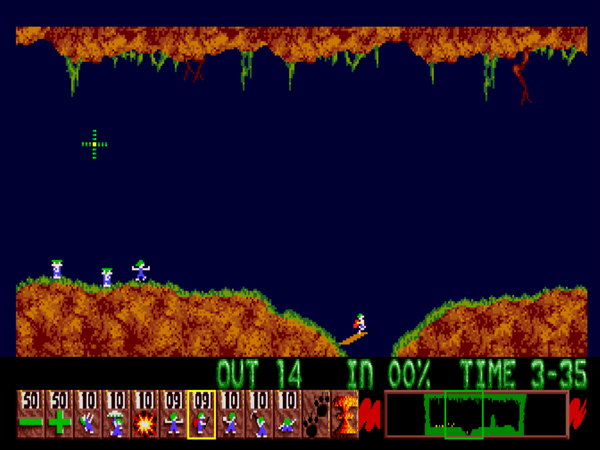

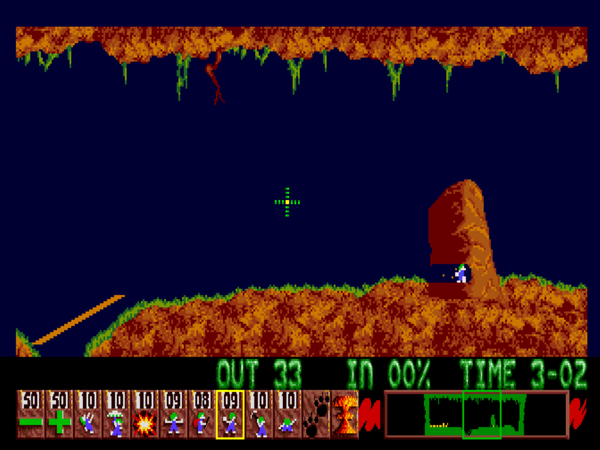

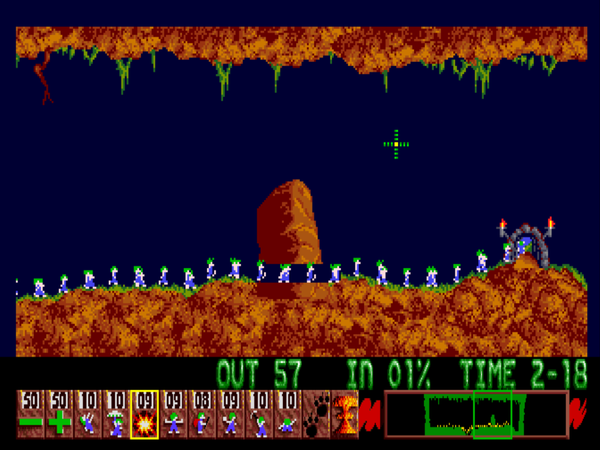

Still, the consumer market for online services, if it existed, wasn’t worth betting CompuServe’s existing business model on. Wilkins entered this new realm, as he did most things, with cautious probity. The new service would be called MicroNET so as to keep it from damaging the CompuServe brand in the eyes of their traditional customers, whether because it became a failure or just because of the foray into the untidy consumer market that it represented. And it would be “market-driven” rather than “competition-driven.” In Wilkins’s terminology, this meant that they would provide some basic time-sharing infrastructure — including email and a bulletin board for exchanging messages, a selection of languages for writing and running programs, and a suite of popular PDP-10 games like Adventure and Star Trek — but would otherwise adopt a wait-and-see attitude on adding customized consumer services, letting the market — i.e., all those hobbyists dialing in from home — do what they would with the system in the meantime.

Even with all these caveats, he had a hard time selling the idea to his board, who were perfectly happy with the current business model, thank you very much, and who had the contempt for the new microcomputers and the people who used them that was shared by many who had been raised on the big iron of DEC and IBM. They took to calling his idea “schlock time-sharing.”

Mustering all his powers of persuasion, Wilkins was able to overrule the naysayers sufficient to launch a closed trial. On May 1, 1979, CompuServe quietly offered free logins to any members of the Midwest Affiliation of Computer Clubs, headquartered right there in Columbus, who asked for them. With modems still a rare and pricey commodity, it took time to get MicroNET off the ground; Wilkins remembers anxiously watching the connectivity lights inside the data center during the evenings, and seeing them remain almost entirely dimmed. But then, gradually, they started blinking.

After exactly two months, with several hundred active members having proved to Wilkins’s satisfaction that a potential market existed, he made MicroNET an official CompuServe service, open to all. To the dissatisfaction of his early adopters, that meant they had to start paying: a $30 signup charge, followed by $5 per hour for evening and weekend access, $12 per hour if they were foolish enough to log on during the day, when CompuServe’s corporate clients needed the machines. To the satisfaction of Wilkins, most of his early adopters grumbled but duly signed up, and they were followed by a slow but steady trickle of new arrivals. The service went entirely unadvertised, news of its existence spreading among computer hobbyists strictly by word of mouth. MicroNET was almost literally nothing in the context of CompuServe’s business as a whole — it would account for roughly 1 percent of their 1979 revenue, less than heaps of their larger individual corporate accounts — yet it marked the beginning of something big, something even Wilkins couldn’t possibly anticipate.

But MicroNET didn’t stand alone. Even as one online service was getting started in about the most low-key fashion imaginable, another was making a much more high-profile entrance. It was fortunate that Wilkins chose to see MicroNET as “market-driven” rather than “competition-driven.” Otherwise, he wouldn’t have been happy to see his thunder being stolen by The Source.

Like Jeff Wilkins, Bill von Meister was 36 years old. Unlike Wilkins, he already had on his resume a long string of entrepreneurial failures to go along with a couple of major successes. An unapologetic epicurean with a love for food, wine, cars, and women, he had been a child not just of privilege but of aristocracy, his father a godson of the last German kaiser, his mother an Austrian countess. His parents had immigrated to New York in the chaos that followed World War I, when Germany and Austria could be uncomfortable places for wealthy royalty, and there his father had made the transition from landed aristocrat to successful businessman with rather shocking ease. Among other ventures, he became a pivotal architect of the storied Zeppelin airship service between Germany and the United States — although the burning of the Hindenburg did rather put the kibosh on that part of his portfolio, as it did passenger-carrying airships in general.

The son inherited at least some of the father’s acumen. Leveraging his familial wealth alongside an unrivaled ability to talk people into giving him money — one friend called him the best he’d ever seen at “taking money from venture capitalists, burning it all up, and then getting more money from the same venture capitalists” — the younger von Meister pursued idea after idea, some visionary, some terrible. By 1977, he had hit pay dirt twice already in his career, once when he created what was eventually branded as Western Union’s “Mailgram” service for sending a form of electronic mail well before computer email existed, once when he created a corporate telephone service called Telemax. Unfortunately, the money he earned from these successes disappeared as quickly as it poured in, spent to finance his high lifestyle and his many other, failed entrepreneurial projects.

Late in 1977, he founded Digital Broadcasting Corporation in Fairfax County, Virginia, to implement a scheme for narrow-casting digital data using the FM radio band. “Typical uses,” ran the proposal, “would include price information for store managers in a retail chain, bad-check information to banks, and policy information to agents of an insurance company.” Von Meister needed financing to bring off this latest scheme, and he needed a factory to build the equipment that would be needed. Luckily, a man who believed he could facilitate both called him one day in the spring of 1978 after reading a description of his plans in Business Week.

Jack Taub had made his first fortune as the founder of Scott Publishing, known for their catalogs serving the stamp-collecting hobby. Now, he was so excited by von Meister’s scheme that he immediately bought into Digital Broadcasting Corporation to the tune of $500,000 of much-needed capital, good for a 42.5 percent stake. But every bit as important as Taub’s personal fortune were the connections he had within the federal government. By promising to build a factory in the economically disadvantaged inner city of Charlotte, North Carolina, he convinced the Commerce Department’s Economic Development Administration to guarantee 90 percent of a $6 million bank loan from North Carolina National Bank, under a program meant to channel financing into job-creating enterprises.

Unfortunately, the project soon ran into serious difficulties with another government agency: the Federal Communications Commission, who noted pointedly that the law which had set aside the FM radio band had stipulated it should be reserved for applications “of interest to the public.” Using it to send private data, many officials at the FCC believed, wasn’t quite what the law’s framers had had in mind. And while the FCC hemmed and hawed, von Meister was fomenting chaos within the telecommunications and broadcasting industries at large by claiming his new corporation’s name gave him exclusive rights to the term “digital broadcasting,” a modest buzzword of its own at the time. His legal threats left a bad taste in the mouth of many a potential partner, and the scheme withered away under the enormous logistical challenges getting such a service off the ground must entail. The factory which the Commerce Department had so naively thought they were financing never opened, but Digital Broadcasting kept what remained of the money they had received for the purpose.

They now planned to use the money for something else entirely. Von Meister and Taub had always seen business-to-business broadcasting as only the first stage of their company’s growth. In the longer term, they had envisioned a consumer service which would transmit and even receive information — news and weather reports, television listings, shopping offers, opinion polls, etc. — to and from terminals located in ordinary homes. When doing all this over the FM radio band began to look untenable, they had cast about for alternative approaches; they were, after all, still flush with a fair amount of cash. It didn’t take them long to take note of all those TRS-80s and other home computers that were making their way into the homes of early adopters. Both Taub and von Meister would later claim to have been the first to suggest a pivot from digital broadcasting to a microcomputer-oriented online information utility. In the beginning, they called it CompuCom.

The most obvious problem CompuCom faced — its most obvious disadvantage in comparison to CompuServe’s MicroNET — was the lack of a telecommunications network of its own. Once again, both Taub and von Meister would later claim to have been the first to see the solution. One or the other or both took note of another usage inequality directly related to the one that had spawned MicroNET. Just as the computers of time-sharing services like CompuServe sat largely idle during nights and weekends, traffic on the telecommunications lines corporate clients used to connect to them was also all but nonexistent more than half of the time. Digital Broadcasting came to GTE Telenet with an offer to lease this idle bandwidth at a rate of 75¢ per connection per hour, a dramatically reduced price from that of typical business customers. GTE, on the presumption that something was better than nothing, agreed. And while they were making the deal to use the telecommunications network, von Meister and Taub also made a deal with GTE Telenet to run the new service on the computers in the latter’s data centers, using all that excess computing power that lay idle along with the telecommunications bandwidth on nights and weekends. Because they needed to build no physical infrastructure, von Meister and Taub believed that CompuCom could afford to be relatively cheap during off-hours; the initial pricing plan stipulated just $2.75 per hour during evenings and weekends, with a $100 signup fee and a minimum monthly charge of $10.

For all the similarities in their way of taking advantage of the time-sharing industry’s logistical quirks, not to mention their shared status as the pioneers of much of modern online life, there were important differences between the nascent MicroNET and CompuCom. From the first, von Meister envisioned his service not just as a provider of computer access but as a provider of content. The public-domain games that were the sum total of MicroNET’s initial content were only the beginning for him. Mirroring its creator, CompuCom was envisioned as a service for the well-heeled Playboy– and Sharper Image-reading technophile lounge lizard, with wine lists, horoscopes, entertainment guides for the major metropolitan areas, and an online shopping mall. In a landmark deal, von Meister convinced United Press International, one of the two providers of raw news wires to the nation’s journalistic infrastructure, to offer their feed through CompuCom as well — unfiltered, up-to-the-minute information of a sort that had never been available to the average consumer before. The New York Times provided a product-information database, Prentice Hall provided tax information, and Dow Jones provided a stock ticker. Von Meister contracted with the French manufacturer Alcatel for terminals custom-made just for logging onto CompuCom, perfect for those wanting to get in on the action who weren’t interested in becoming computer nerds in the process. For the same prospective customers, he insisted that the system, while necessarily all text given the state of the technology of the time, be navigable via multiple-choice menus rather than an arcane command line.

In the spring of 1979, just before the first trials began, CompuCom was renamed The Source; the former name sounded dangerously close to “CompuCon,” a disadvantage that was only exacerbated by the founder’s checkered business reputation. The service officially opened for business, eight days after MicroNET had done the same, with that July 9 press conference featuring Isaac Asimov and the considerable fanfare it generated. Indeed, the press notices were almost as ebullient as The Source’s own advertising, with the Wall Street Journal calling it “an overnight sensation among the cognoscenti of the computing world.” Graeme Keeping, a business executive who would later be in charge of the service but was at this time just another outsider looking in, had this to say about those earliest days:

The announcement was made with the traditional style of the then-masters of The Source. A lot of fanfare, a lot of pizazz, a lot of sizzle. There was absolutely no substance whatsoever to the announcement. They had nothing to back it up with.

Electronic publishing was in its infancy in those days. It was such a romantic dream that there never had to be a product in order to generate excitement. Nobody had to see anything real. People wanted it so badly, like a cure for cancer. We all want it, but is it really there? I equate it to Laetrile.

While that is perhaps a little unfair — there were, as we’ve just seen, several significant deals with content providers in place before July of 1979 — it was certainly true that the hype rather overwhelmed the comparatively paltry reality one found upon actually logging into The Source.

Nevertheless, any comparison of The Source and MicroNET at this stage would have to place the former well ahead in terms of ambition, vision, and public profile. That distinction becomes less surprising when we consider that what was a side experiment for Jeff Wilkins was the whole enchilada for von Meister and Taub. For the very same reason, any neutral observer forced to guess which of these two nascent services would rise to dominance would almost certainly have gone with The Source. Such a reckoning wouldn’t have accounted, however, for the vortex of chaos that was Bill von Meister.

It was the typical von Meister problem: he had built all this buzz by spending money he didn’t have — in fact, by spending so much money that to this day it’s hard to figure out where it could all possibly have gone. As of October of 1979, the company had $1000 left in the bank and $8 million in debt. Meanwhile The Source itself, despite all the buzz, had managed to attract at most a couple of thousand actual subscribers. It was, after all, still very early days for home computers in general, modems were an even more exotic species, and the Alcatel terminals had yet to arrive from France, being buried in some transatlantic bureaucratic muddle.

By his own later account, Jack Taub had had little awareness over the course of the last year or so of what von Meister was doing with the company’s money, being content to contribute ideas and strategic guidance and let his partner handle day-to-day operations. But that October he finally sat down to take a hard look at the books. He would later pronounce the experience of doing so “an assault on my system. Von Meister is a terrific entrepreneur, but he doesn’t know when to stop entrepreneuring. The company was in terrible shape. It was not going to survive. Money was being spent like water.” With what he considered to be a triage situation on his hands, Taub delivered an ultimatum to von Meister. He would pay him $3140 right now — 1¢ for each of his shares — and would promise to pay him another dollar per share in three years if The Source was still around then. In return, von Meister would walk away from the mess he had created, escaping any legal action that might otherwise become a consequence of his gross mismanagement. According to Taub’s account, von Meister agreed to these terms with uncharacteristic meekness, leaving his vision of The Source as just one more paving stone on his boulevard of broken entrepreneurial dreams, and leaving Taub to get down to the practical business of saving the company. “I think if I had waited another week,” the latter would later say, “it would have been too late.”

As it was, Digital Broadcasting teetered on the edge of bankruptcy for months, with Taub scrambling to secure new lines of credit to keep the existing creditors satisfied and, when all else failed, injecting more of his own money into the company. Through it all, he still had to deal with von Meister, who, as any student of his career to date could have predicted, soon had second thoughts about going away quietly — if, that is, he’d ever planned to do so in the first place. Taub learned that von Meister had taken much of Digital Broadcasting’s proprietary technology out the door with him, and was now shopping it around the telecommunications industry; that sparked a lawsuit on Taub’s behalf. Von Meister claimed his ejection had been illegal; that sparked another, going in the opposite direction. Apparently concluding that his promise not to sue von Meister for his mismanagement of the company was thus nullified, Taub counter-sued with exactly that charge. With a vengeful von Meister on his trail, he said that he couldn’t afford to “sleep with both eyes closed.”

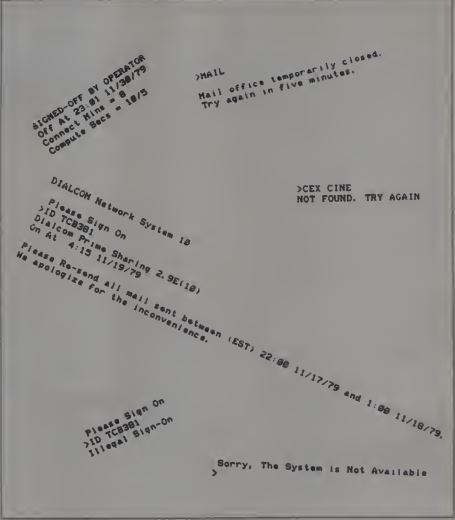

By March of 1980, The Source had managed to attract about 3000 subscribers, but the online citizens were growing restless. Many features weren’t quite as advertised. The heavily hyped nightlife guides, for instance, mostly existed only for the Washington Beltway, the home of The Source. The email system was down about half the time, and even when it was allegedly working it was anyone’s guess whether a message that was sent would actually be delivered. Failings like these could be attributed easily enough to the usual technical growing pains, but other complaints carried with them an implication of nefarious intent. The Source’s customers could read the business pages of the newspaper as well as anyone, and knew that Jack Taub was fighting for his company’s life on multiple fronts. In that situation, some customers reasoned, there would be a strong incentive to find ways to bill them just that little bit more. Thus there were dark accusations that the supposedly user-friendly menu system had been engineered to be as verbose and convoluted as possible in order to maximize the time users spent online just trying to get to where they wanted to go. On a 110- or 300-baud connection — for comparison purposes, consider that a good touch typist could far exceed the former rate — receiving all these textual menus could take considerable time, especially given the laggy response time of the system as a whole whenever more than a handful of people were logged on. And for some reason, a request to log off the system in an orderly way simply didn’t work most of the time, forcing users to break the connection themselves. After they did so, it would conveniently — conveniently for The Source’s accountants, that is — take the system five minutes or so to recognize their absence and stop charging them.

The accusations of nefarious intent were, for what it’s worth, very unlikely to have had any basis in reality. Jack Taub was a hustler, but he wasn’t a con man. On the contrary, he was earnestly trying to save a company whose future he deeply believed in. His biggest problem was the government-secured loan, on which Digital Broadcasting Corporation had by now defaulted, forcing the Commerce Department to pay $3.2 million to the National Bank of North Carolina. The government bureaucrats, understandably displeased, were threatening to seize his company and dismantle it in the hope of getting at least some of that money back. They were made extra motivated by the fact that the whole affair had leaked into the papers, with the Washington Post in particular treating it as a minor public scandal, an example of Your Tax Dollars at Waste.

Improvising like mad, Taub convinced the government to allow him to make a $300,000 down payment, and thereafter to repay the money he owed over a period of up to 22 years at an interest rate of just 2 percent. Beginning in 1982, the company, now trading as The Source Telecomputing Corporation rather than Digital Broadcasting Corporation, would have to repay either $50,000 or 10 percent of their net profit each year, whichever was greater; beginning in 1993, the former figure would rise to $100,000 if the loan still hadn’t been repaid. “The government got a good deal,” claimed Taub. “They get 100 cents on the dollar, and get their money back faster if I’m able to do something with the company.” While some might have begged to differ with his characterization of the arrangement as a “good deal,” it was, the government must have judged, the best it was likely to get under the circumstances. “The question is to work out some kind of reasonable solution where you recover something rather than nothing,” said one official familiar with the matter. “While it sounds like they’re giving it away, they already did that. They already made their mistake with the original loan.”

With the deal with the Commerce Department in place, Taub convinced The Readers Digest Association, publisher of the most popular magazine in the world, who were eager to get in on the ground floor of what was being billed in some circles as the next big thing in media, to buy 51 percent of The Source for $3 million in September of 1980, thus securing desperately needed operating capital. But when a judge ruled in favor of von Meister on the charge that he had been unlawfully forced out of the company shortly thereafter, Taub was left scrambling once again. He was forced to go back to Readers Digest, convincing them this time to increase their stake to 80 percent, leaving only the remaining 20 percent in his own hands. And with that second capital injection to hand, he convinced von Meister to lay the court battle to rest with a settlement check for $1 million.

The Source had finally attained a measure of stability, and Jack Taub’s extended triage could thus come to an end at last. Along the way, however, he had maneuvered himself out of his controlling interest and, soon, out of a job. Majority ownership having its privileges, Readers Digest elected to replace him with one of their own: Graeme Keeping, the executive who had lobbied hardest to buy The Source in the first place. “Any publisher today, if he doesn’t get into electronic publishing,” Keeping was fond of saying, “is either going to be forced into it by economic circumstances or will have great difficulty staying in the paper-and-ink business.”

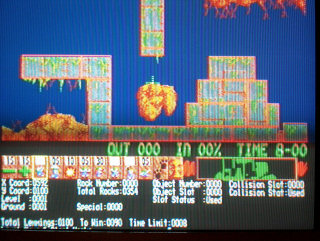

The Source’s Prime computer systems, a millstone around their neck for years (although the monkey does seem to be enjoying them).

The Source may have found a safe harbor with one of the moneyed giants of American media, but it would never regain its early mojo. Keeping proved to be less than the strategic mastermind he believed himself to be, with a habit of over-promising and under-delivering — and, worse, of making terrible choices based on his own overoptimistic projections. The worst example of the tendency came early in his tenure, in the spring of 1981, when he was promising the New York Times he would have 60,000 subscribers by 1982. Determined to make sure he had the computing capacity to meet the demand, he cancelled the contract to use GTE Telenet’s computing facilities, opening his own data center instead and filling it with his own machines. At a stroke, this destroyed a key part of the logistical economies which had done so much to spawn The Source (and, for that matter, CompuServe’s MicroNET) in the first place. The Source’s shiny new computers now sat idle during the day with no customers to service. Come 1982, The Source had only 20,000 subscribers, and all those expensive computers were barely ticking over even at peak usage. This move alone cost The Source millions. Meanwhile, the deal with Alcatel for custom-made terminals having fallen through during the chaos of Taub’s tenure, Keeping made a new one with Zenith to make “a semi-intelligent terminal with a hole in the back through which you can turn it into a computer.” That impractical flight of fancy also came to naught, but not before costing The Source more money. Such failures led to Keeping’s ouster in June of 1982, to be replaced by another anodyne chief from the Readers Digest executive pool named George Grune.

Soon after, Control Data Corporation, a maker of supercomputers, bought a 30 percent share of The Source for a reported $5 million. But even this latest injection of capital, technical expertise, and content — Control Data would eventually move much of their pioneering Plato educational network onto the service — changed little. The Source went through three more chief executives in the next two years. The user roll continued to grow, finally reaching 60,000 in September of 1984 — some two and a half years after Graeme Keeping’s prediction, for those keeping score — but the company perpetually lost money, was perpetually about to turn the corner into mainstream acceptance and profitability but never actually did. Thanks not least to Keeping’s data-center boondoggle, the hourly rate for non-prime usage had risen to $7.75 per hour by 1984, making this onetime pioneer that now felt more and more like an also-ran a hard sell in terms of dollars and cents as well. Neither the leading name in the online-services industry nor the one with the deepest pockets — there were limits to Readers Digest’s largess — The Source struggled to attract third-party content. A disturbing number of those 60,000 subscribers rarely or never logged on, paying only the minimum monthly charge of $10. One analyst noted that well-heeled computer owners “apparently are willing to pay to have these electronic services available, even if they don’t use them regularly. From a business point of view, that’s a formula for survival, but not for success.”

The Source was fated to remain a survivor but never a real success for the rest of its existence. Back in Columbus, however, CompuServe’s consumer offering was on a very different trajectory. Begun in such a low-key way that Jeff Wilkins had refused even to describe it as being in competition with The Source, CompuServe’s erstwhile MicroNET — now re-branded as simply CompuServe, full stop — was going places of which its rival could only dream. Indeed, one might say it was going to the very places of which Bill von Meister had been dreaming in 1979.

(Sources: the books On the Way to the Web: The Secret History of the Internet and its Founders by Michael A. Banks and Stealing Time: Steve Case, Jerry Levin, and the Collapse of AOL Time Warner by Alec Klein; Creative Computing of March 1980; InfoWorld of April 14 1980, May 26 1980, January 11 1982, May 24 1982, and November 5 1984; Wall Street Journal of November 6 1979; Online Today of July 1989; 80 Microcomputing of November 1980; The Intelligent Machines Journal of March 14 1979 and June 25 1979; Washington Post of May 11 1937, July 10 1978, February 10 1980, and November 4 1980; Alexander Trevor’s brief technical history of CompuServe, which was first posted to Usenet in 1988; interviews with Jeff Wilkins from the Internet History Podcast and Conquering Columbus.)