IBM’s greatest triumph was inextricably linked with what by 1986 was turning into their biggest problem. Following its introduction five years before, the IBM PC had remade the face of corporate computing in its image, legitimizing personal computing in the eyes of the Fortune 500 and all those smaller companies who dreamed of someday joining their ranks. The ecosystem that surrounded the IBM PC and its successors was now worth countless billions, the greatest story of American business success of them all to play out during Ronald Reagan’s storied Morning in America.

The problem, at least as IBM and many of their worried stockholders perceived it, was that they now seemed on the verge of losing control of the very standard they had created. A combination of the decisions that had allowed the original IBM PC to become a standard in the first place — its simple, workmanlike design that utilized only off-the-shelf components; the scrupulously thorough documentation of said design; the decision to outsource the machine’s operating system to Microsoft, a third party all too willing to license the same operating system to other parties as well — had led to a thriving market in so-called “clone” machines whose combined revenues now far exceeded IBM’s personal-computer sales. IBM believed that the clonesters were lifting billions out of their pockets every year, even as they saw their own sales, which had broken record after record in the first few years following the IBM PC’s launch, beginning to show signs of stagnation.

Compaq of Houston, Texas, the most aggressive and innovative of the clonesters, had first begun to collect for themselves a reputation to rival IBM’s own with their very first product back in 1983, a portable — or, perhaps better said, “luggable” — all-in-one IBM-compatible. The Compaq Portable had forced IBM for the first time to play catch-up with a personal-computing rival, rushing to market a luggable of their own. To make matters worse, the IBM version of portable computing had proved far less practical than the Compaq, as many a reviewer wasn’t shy about pointing out.

Now, in 1986, Compaq threatened to wrangle away from IBM the mantle of technological leadership via a machine that represented a more fundamental advance than a new form factor. After hearing that IBM didn’t have any immediate plans to release a machine built around the Intel 80386, a new 32-bit processor that was sending waves of excitement rippling through the industry, Compaq decided to push ahead with a 386-based machine of their own — right now, this very year. The public launch of the Compaq Deskpro 386 on September 9, 1986 — almost exactly five years after the debut of the original IBM PC — was another watershed moment, the first time one of the clonesters had released a machine more powerful than anything in IBM’s stable. Compaq’s CEO Rod Canion, never a shrinking violet under any circumstances, outdid himself at the launch, declaring the Deskpro 386 “the third generation of the personal-computer revolution” after the Apple II and the IBM PC, thus implicitly placing his own Compaq on a par with those two storied companies.

The clone market was getting so big that there seemed a danger that the clones wouldn’t be dismissed under that selfsame moniker much longer. People in the business world were beginning to replace the phrase “IBM clone” with phrases like “the MS-DOS standard” or “the Intel standard,” giving no credit to the company that had really created that standard. As was well attested by their checkered history of antitrust investigations and allegations of unfair competitive practices, IBM had never been known as a bastion of corporate generosity. It may not be exaggerating the case to say that they felt themselves to have a moral right to the PC standard they’d created, a right that encompassed not just an acknowledgement that said standard was still the IBM standard but also the ability to continue to steer every aspect of the further development of that standard. And by all rights the right should also encompass — and this was the sticking point that really irked — their fair share of all those billions that all those other companies were making from IBM’s standard.

In addition to furnishing what they saw as ample evidence of a need for them to reassert control of their industry, this period found IBM at another, more purely technical crossroads. The imminent move from 16-bit to 32-bit computing represented by the new 80386 would have to bring with it some elaborations on IBM’s tried-and-true architecture — elaborations that would undoubtedly define the face of mainstream business computing into the 1990s. IBM saw in those elaborations a way to remedy the ongoing problem of the clonesters as well. Unknown to everyone outside the company, they were about to initiate the so-called “bus wars,” a premeditated strike aimed directly at what they saw as parasites like Compaq.

The bus in this context referred not to a mode of public transportation but rather to the system of expansion slots that allowed the innermost core of an IBM-compatible computer — little more than the processor and memory — to communicate with just about everything else that made up a full-fledged PC: floppy and hard disk drives, monitors, modems, printers, ad infinitum, from the most generalized components found in just about every office to the most specialized for the most esoteric of tasks. The original IBM PC, built around a hybrid 8-bit and 16-bit chip called the Intel 8088, had used an 8-bit bus, meaning the electronic “channel” it used to talk to all these myriad devices was just 8 bits wide. In 1984, IBM had released the PC/AT, built around the newer fully 16-bit Intel 80286, and in that machine had expanded the original bus to support 16-bit devices while remaining backward compatible with the older 8-bit standard. The result retroactively came to be known as the Industry Standard Architecture, or ISA.

Now, with the 32-bit 80386 a reality, it was time to think about revisiting the bus again, to make it support 32-bit communications. To fail to do so would be to cripple the 386, forcing it to act like a 16-bit chip every time it wanted to communicate with a peripheral; impressive as they were in many ways, the Compaq Deskpro 386 and other early 386 clones saw their performance limited by exactly this problem. Most people expected IBM to do for the 386 what they had previously done for the 286, delivering a new bus which would support 32-bit peripherals but remain compatible with older 16-bit and even 8-bit devices. Instead they delivered something they called the Micro Channel Architecture, or MCA, a complete break with the past which supported only 32-bit peripherals.

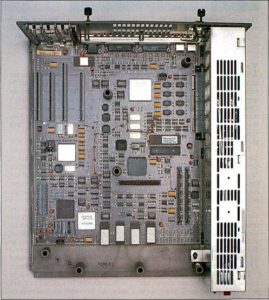

So much controversy over something barely noticeable. The four Micro Channel slots sit at the left rear of this PS/2 Model 50. Many of the components that would have been housed in expansion cards in earlier IBM systems, such as the video card and hard-drive controller, were moved onto the motherboard with the PS/2 line.

MCA debuted as a key component in a new line of personal computers in April of 1987, the most ambitious such IBM had ever or would ever introduce. The Personal System/2 lineup — better known as the PS/2 — was envisioned as exactly the next generation in personal computing that an ebullient Rod Canion had perhaps overenthusiastically declared the Compaq Deskpro 386 to represent barely six months before. IBM was determined to once again remake the computer industry in their image — and to get it right this time, avoiding the perceived mistakes that had led to the rise of the clonesters. The PS/2 lineup did encompass lower-end machines using the old 16-bit PC/AT bus, but the real point of the effort lay with the higher-end models, IBM’s first to use the 80386 and their first to use the new MCA bus architecture to take advantage of all of the 32 bits of throughput offered by that chip. IBM offered various technical justifications for the failure of MCA to support their older bus standards, but they always rang false. As the more astute industry observers quickly realized, MCA had more to do with business and marketing than it did with technology in the abstract.

IBM was attempting a delicate trick with MCA. They wanted to be able to continue to reap the enormous benefits of the business-computing standard they had birthed, with its huge constellation of compatible software that by now even more so than IBM’s reputation made an MS-DOS machine the only kind to be seriously considered by the vast majority of corporate purchasing departments. At the same time, though, they wanted to cut off the oxygen to the clonesters who were also benefiting so conspicuously from that same universal acceptance, and to reassert their role as the ultimate authorities on the direction business computing would take in the future. They believed they could accomplish all of that, in the long term at least, by threading the needle of compatibility — keeping the 386-based PS/2 lineup software-compatible with the older machines while deliberately breaking the hardware compatibility so relied on by the clonesters. In doing so, they would take the hardware to a place the clonesters couldn’t follow, thus securing for themselves all those billions the clonesters had heretofore been stealing out of their pockets.

Unlike the original IBM bus architecture, MCA was locked up inside an ironclad cage of patents, making it legally uncloneable unless one could somehow negotiate a license to do so through IBM. The patents even extended to add-on cards and other peripherals that might be compatible with MCA, meaning that absolutely anyone who wanted to make a hardware add-on for an MCA machine would have to negotiate a license and pay for the privilege. The result should be not only a lucrative new revenue stream but also complete control of business computing’s further evolution. Yes, the clonesters would be able to survive for a few more years making machines using the older 16-bit bus architecture. In the longer term, however, as personal computing inevitably transitioned into a realm of 32 bits, they would survive purely at IBM’s whim, their fate predicated on IBM’s willingness to grant them a patent license for MCA and their own willingness to pay dearly for it.

The clonesters rightly and immediately saw MCA as nothing less than an existential threat, and were thrown into a tizzy trying to figure out how to respond to it. It was the ever-quotable Rod Canion who came up with the best line of attack, drawing an analogy between MCA and the recent soft-drink marketing disaster of New Coke. (What with Pepsi alumnus John Sculley in charge over at Apple, computers and soft drinks seemed to be running oddly in parallel during this era.) Clever, pithy, and blessedly non-technical, Canion’s comparison spread like wildfire through the business press, regurgitated ad nauseam by journalists who often had little to no idea what this MCA thing that it referenced actually was. IBM never quite managed to formulate a response that didn’t sound nefariously evasive.

With the “New Coke” meme setting the tone, just about everything about the PS/2 line turned into an unexpected uphill struggle for IBM. While plenty of early reviewers dutifully toed the line, doubtless mindful that if no one ever got fired for buying IBM no one was likely to get fired for giving them a positive review either, a surprising number of the reviews were distinctly lukewarm. The complaints started and often ended with the prices. Even the low-end 16-bit PS/2 models started at a suggested list price of $2295 without monitor, while the high-end models topped out at almost $7000. Insider reports had it that IBM was enjoying profit margins of 40 percent or more, leading to rampant speculation on what the cost of entry into business-friendly personal computing might become if they really should manage to stamp out the clonesters.

The high-end models in particular struck many as a pointless waste of money given that IBM didn’t have an operating system ready to take advantage of their capabilities. The machines were all still saddled with MS-DOS, clunky and archaic and barely worthy of the name “operating system” even in the terms of 1987. In one of the more striking examples of hardware running away from software in computing history, the higher-end models shipped with 1 MB of memory, but couldn’t actually use more than 640 K of it thanks to MS-DOS’s built-in limitations. IBM promised a new, next-generation operating system called OS/2 to unlock the real potential of these next-generation machines. But OS/2, a project they had once again chosen to turn over to Microsoft, was still an unknown number of months away, with the so-called “Presentation Manager” that would add to it a Macintosh-style GUI due yet further months after that. [1]The full story of OS/2 and the Presentation Manager and their relationship to Microsoft Windows and even Apple’s MacOS is a complex yet fascinating one, but also one best reserved for a future article where I can give it its proper due. And, as a final little bit of buyer discouragement, IBM planned to charge the people who had already spent many thousands on their PS/2 hardware another $800 or so for the privilege of using the eventual OS/2 to take advantage of it.

The PS/2 launch prompted constant comparisons with the original IBM PC launch of five and a half years before, and constantly came up wanting. IBM’s publicity campaign was lavish — as it ought to have been, given those profit margins — but unfocused and uninspired. Its centerpiece was a series of commercials involving much of the cast from M*A*S*H, playing their old sitcom characters inexplicably transported from the Korean War to a modern office. With M*A*S*H still a beloved cultural touchstone only a few years removed from its record-shattering final episode, the spots had plenty of sheer star power, but lacked even a modicum of the charm or creativity that had characterized the award-winning “Charlie Chaplin” advertisements for the original IBM PC.

Likewise, it was hard not to compare the unexpected spirit of openness that had suffused the 1981 IBM PC with the domination and control IBM so plainly intended to assert with the 1987 PS/2 launch. Apple’s iconic old “Big Brother” Macintosh advertisement, a soaring triumph of rhetoric over substance back in its day, would have fit much better to the PS/2 line than it had to the state of business computing back in 1984. Many chose to blame the change in tone on the loss of Don Estridge, the leader of the small team that had built the original IBM PC. An unusually charismatic personality and independent thinker for the famously conservative and bureaucratic IBM — enough so that he had been courted by Steve Jobs to fill the CEO role John Sculley ended up taking at Apple — Estridge had been killed in a plane crash in 1985. His stewardship over IBM’s microcomputer division had been succeeded by that of William Lowe, a much more traditional rank-and-file, buttoned-down IBM man. Whether due to this reason or some other, the shift in tone and direction from 1981 to 1987 was striking.

In the months following the PS/2 line’s release, the media narrative drifted from one of uncertain excitement to reports of the new machines’ disappointing reception in many quarters. IBM sold around 200,000 MCA-equipped PS/2s in the first six months, mostly to the biggest of big business; United Airlines alone, for example, bought 40,000 of them as part of a complete revamping of their reservations system. But far too many even within the Fortune 500 proved stubbornly, unexpectedly resistant to IBM’s unsubtle prodding to jump onto the PS/2 train. Many chose to invest in the clonesters’ cheaper 80386 offerings instead; the 16-bit bus used by those machines, while far from ideal from a purely technical standpoint, did at least have the advantage of compatibility with existing peripherals. Seventeen months after MCA’s debut, 66 percent of all business computers being sold each month were still using the old bus architecture, versus just 20 percent that used MCA. (The remainder was largely accounted for by the Macintosh.) Survey after survey reported IBM to be losing market share rather than gaining it since the arrival of the PS/2. By this point OS/2 and its “Presentation Manager” GUI were finally available, but, hampered by that high price tag, the new operating system’s uptake had also been limited at best.

And then, just when it seemed the news couldn’t get much worse for IBM, much of the industry went into unthinkable open revolt against their ongoing hegemony. On September 13, 1988, a group of the clonesters, driven as usual by Compaq and with the tacit support of Intel and Microsoft, announced the creation of a new 32-bit bus standard, to be called the Extended Industry Standard Architecture, or EISA. Unlike MCA, EISA would be compatible with older 16-bit and 8-bit peripherals. And it would manage to be so without performing notably worse than MCA, thus giving the lie to IBM’s claims that their decision to abandon bus compatibility had been motivated by technical rather than business concerns. The press promptly dubbed the budding consortium, which included virtually every manufacturer of IBM-compatible computers not named IBM, the “Gang of Nine” after the allegedly traitorous Gang of Four of the Chinese Cultural Revolution. Machines using the new EISA bus entered production within a year.

This shot of an EISA card illustrates the unique two-layer connection devised by the Gang of Nine to extend the old ISA standard without requiring ridiculously long, unwieldy cards and sockets. The shorter pins correspond to the older 16-bit standard; the longer extend it to 32 bits.

In the end, EISA would prove of limited technical importance in the evolution of the Intel architecture. The new standard didn’t have much more luck than had MCA in establishing itself as the market’s default. Instead, by the time a 32-bit bus became a truly commonplace need among ordinary computer users, EISA and MCA alike were replaced by a still newer and better standard than either called the Peripheral Component Interconnect, or PCI. The bus wars of the late 1980s and very early 1990s can thus all too easily be seen as just another of the industry’s tempests in a teapot, an obscure squabble over technical esoterica of interest only to hardcore hackers.

Look a little harder at EISA, however, and we see a watershed moment in the history of the personal computer that dwarfs even the arrival of the Compaq Portable or the Deskpro 386. The Gang of Nine’s announcement brought with it a torrent of press coverage that for the first time openly questioned IBM’s continuing dominance of business-oriented computing. CNN’s Moneyline, the most-watched business report on cable television, dredged up Canion’s evergreen New Coke analogy yet again, going so far as to open its reports on the Gang of Nine’s announcement with a shot of soda bottles moving down a production line. IBM was “faced with overwhelming resistance to the flavor of ‘New Compute,'” declared the breathless report that followed; September 13, 1988, “was a day that left Big Blue looking black and blue.” An only slightly more sober Wall Street Journal article had it that the Gang of Nine “was joining forces in an audacious attempt to wrest away from IBM the power of setting the standard for how personal computers are designed, and they seem to have a chance of succeeding.” The article threw all its metaphors in a blender for the big conclusion: “For IBM, the Gang’s announcement yesterday is at best a dust storm of confusion, and, at worst, a dagger to the heart of its PC strategy.” When the Wall Street Journal threatens to turn against your big business, you know you have problems.

And, indeed, September 13, 1988, wound up representing everything the pundits and journalists said it might and more. Simply put, this was the instant that IBM finally and definitively lost control of the business-computing industry, the moment when the architecture they had created back in 1981 left the nest to go its own way. After this instant, no one would ever defer to IBM again. In January of 1989, Arlan Levitan, a columnist for the big consumer-computing magazine Compute! — like most such magazines, not particularly known for the boldness of its editorial stances — signaled the shifting conventional wisdom. His editors empowered him to launch a satirical broadside at IBM, the PS/2, MCA, and even all those who had bought into the hype, a group that very much included their own magazine.

During a Monday morning press breakfast hosted by IBM, over a thousand representatives of the computing press were shocked to hear newly hired Entry Systems Division president P.W. Herman declare that the firm’s PS/2 computer systems and its associated products were part of an elaborate psychological study undertaken at the behest of the National Institute of Mental Health. “I sure am glad the American people haven’t lost their sense of humor. It’s good to know that in these times everybody still appreciates a good joke.” According to Herman, the study was intended to quantify the limits of the operational parameters associated with Abraham Lincoln’s most famous aphorism. Said Herman, “I guess you really can’t fool all of the people all of the time. I’ll tell ya, though — the Micro Channel Architecture even had me going for a while.” All PS/2 owners will receive a letter signed by Herman and thanking them for their personal contribution toward furthering the present-day understanding of aberrant behavior. Corporate executives who committed their firms to IBM’s $800 OS/2 operating system will receive free remedial therapy in DOS reeducation centers. Those who took advantage of IBM’s trade-in policy, whereby users gave up their XTs or ATs for a PS/2, will receive their weight in PCjr computers. According to internal IBM sources, all costs associated with manufacturing and promoting PS/2s will cumulatively qualify as a tax-deductible research grant.

In terms of hardware if not software — Microsoft’s long, often damaging domination was just beginning in the latter realm — the industry was now a meritocracy, bound together only by a set of mutually if often only tacitly agreed-upon standards. That could only mean hard times for IBM, who were hardly used to competing on such a level playing field. In 1993, they posted a loss of a staggering $8 billion, the largest to that point in American business history, prompting a long, painful process of reinvention as a smaller, nimbler, dare I say it even humbler company. In 2004, in another watershed moment symbolic of many things, IBM stopped making PCs altogether, selling what was left of their personal-computer division to the Chinese computer manufacturer Lenovo in order to focus on consulting services.

The PS/2 story has rightfully gone down in business history as a classic tale of overweening arrogance that received its justified comeuppance. In attempting so aggressively to seize complete control of business computing — all of it — IBM pissed away the enviable dominance they already enjoyed. In attempting to build an empire that stood utterly alone and unchallenged, they burned the one they already had.

Yet there is another side to the PS/2 story that also deserves its due. Existing in those seemingly misbegotten machines alongside MCA and the cynicism it represented was a more positive, one might even say technically idealistic determination to advance the state of the art for this architecture that had long since become the mainstream face of computing, dwarfing in terms of the sheer money it generated any other platform.

And make no mistake: the world of the IBM compatibles was in sore need of advancement on multiple fronts. While machines like the Apple Macintosh and Commodore Amiga had opened whole new paradigms of computing — the former with its friendly GUI interface and crisp almost print-quality display, the latter with its multitasking operating system and implementation of the ideal of multimedia computing long before “multimedia” became a buzzword — the world of the clones had remained as bland as ever, a land of green or amber text-only displays, unpleasant beeps and squawks, and inscrutable command lines. For all the apparently proud users and sellers who took all this ugliness as a sign of serious businesslike intent, there were others who recognized that IBM and the clonesters had long since ceded the high ground of real, fundamental innovation in computing to rival platforms. Thankfully, some inside IBM were included in the latter group, and the results could be seen in the PS/2 machines.

Given how far the IBM-compatible world had fallen behind, it’s not surprising that many or most of the alleged innovations of the PS/2 were really a case of playing catch-up. For example, IBM finally produced their first-ever mouse for the line. They also switched over from the old, fragile 5.25-inch floppy-disk format to the newer, more robust and higher-capacity 3.5-inch format already being used by machines like the Macintosh and Amiga.

But undoubtedly the most welcome and significant of all the PS/2’s new technical developments were some desperately needed display improvements. The Video Graphics Array, or VGA, was included with the higher-end PS/2 models; lower-end models shipped with something called the Multi-Color Graphics Array (MCGA), with many but not quite all of the capabilities of VGA. After allowing their machines’ graphics capabilities to languish for years, IBM through VGA and to some extent MCGA finally brought them up to a level that compared very favorably with the Amiga. VGA and MCGA defined a palette of fully 262,144 colors, a huge leap over the 64 offered by the Enhanced Graphics Adapter (EGA), IBM’s previous best display option for their mainstream machines. The Amiga, by contrast, offered just 4096 colors, although its blitter and other custom hardware still gave it some notable advantages in the realm of fast animation.

All of these new developments marked IBM’s last great gifts to the standard they had birthed — gifts destined to long outlive the PS/2 line itself. The mouse connection IBM developed, for instance, remained a standard well beyond the millennium, with so-called “PS/2” connectors remaining common jargon, used by younger tech-heads and system builders who likely had only the vaguest idea from whence the usage derived. The VGA standard proved even longer-lived. It still survives today as the lowest-common-denominator baseline for computer displays, while ports matching the specification defined by IBM all those years ago remain on the back of every monitor and television set.

Ironically given IBM’s laser focus on using the PS/2 line to secure their dominance of business computing, its technical innovations ultimately proved most important in making the architecture viable as a proposition for the home, paving the way for the Microsoft-dominated second home-computer revolution of the 1990s. With good graphics falling into place at last thanks to VGA and the raw power of the 32-bit 80386, only two barriers remained to making PC-compatible machines realistic rivals to the likes of the Amiga as compelling home computers: decent sound to replace those atrocious beeps and squawks, and a decent price.

The first problem wouldn’t be a problem at all for very much longer. The first gaming-focused sound cards began to reach the market within a year of the PS/2 line’s debut, and by 1989 Creative Music Systems and Ad Lib both offered popular cards at street prices of $200 or less.

But the prices of home-oriented systems incorporating all of the PS/2 line’s innovations — MCA excepted — would, alas, take a little longer to fall. As late as July of 1989, when the VGA standard was already more than two years old, Computer Gaming World ran an article titled “Is VGA Worth It?” that seriously questioned whether it was indeed worth the still very considerable expense — VGA boards still cost $500 or more — to so equip a machine, especially given how few games supported VGA at that point. Nor did the 80386 find an immediate place in homes. As the 1980s turned into the 1990s, the newer chip was still a piece of pricey exotica in terms of the average consumer’s budget; the vast majority of the Intel-based PCs that were in consumers’ homes were still built around the 80286 or even the venerable old 8088.

Still, in the long run prices could only fall in such a hyper-competitive market. Given Commodore’s lackadaisical attitude toward improving the Amiga and Apple’s almost complete neglect of the consumer market in their eagerness to force the Macintosh into the offices of corporate America, the emerging standard of a 32-bit Intel-based PC with VGA graphics and a sound card came to the fore effectively unopposed. With the Internet having yet to emerge as home computing’s killer app to end all killer apps, it was games that drove this shift. In 1989, an Amiga was still the ultimate gaming computer. By 1991, it was an afterthought for American game publishers, the market being absolutely dominated by what was now starting to be called the “Wintel” standard. While game consoles and mobile devices have come and gone by the handful over the years since, in the realm of desktop- and laptop-based personal computing the heirs of the original IBM PC remain the overwhelming standard to this day. How ironic that this decades-long dominance was ensured by the PS/2, simultaneously the downfall of IBM and the savior of the inadvertently standard architecture IBM created.

(Sources: the books Big Blues: The Unmaking of IBM by Paul Carroll, Open: How Compaq Ended IBM’s PC Domination and Helped Invent Modern Computing by Rod Canion, and Hard Drive: Bill Gates and Making of the Microsoft Empire by James Wallace and Jim Erickson; Byte of June 1987, July 1987, August 1987, and December 1987; Compute! of June 1988, January 1989, and March 1989; Computer Gaming World of July 1989 and September 1989; Wall Street Journal of September 14 1988; the episodes of The Computer Chronicles titled “Intel 386 — The Fast Lane,” “IBM Personal System/2,” and “Bus Wars.”)

Footnotes

| ↑1 | The full story of OS/2 and the Presentation Manager and their relationship to Microsoft Windows and even Apple’s MacOS is a complex yet fascinating one, but also one best reserved for a future article where I can give it its proper due. |

|---|

John

August 19, 2016 at 7:33 pm

I haven’t seen a TV with a DE-9 VGA connector on it like I remember VGA cards having.

Are you thinking SVGA with the DE-15 connector?

Jimmy Maher

August 20, 2016 at 9:33 am

The 15-pin connector debuted with MCGA and VGA, not SVGA. There’s a pretty good shot of the PS/2 video connector in the July 1987 Byte: https://archive.org/stream/byte-magazine-1987-07/1987_07_BYTE_12-08_LANs_IBM_PS2_Models_30_50_60_CAD_Software#page/n253/mode/2up.

Casey Muratori

August 19, 2016 at 10:53 pm

Minor point for those interested: it’s a little misleading to discuss the relative total color counts of Amiga vs. VGA or MCGA because that wasn’t the primary thing that determined how good the graphics looked or what games could be played on them. Yes, the Amiga could select from 4096 colors, but in practice each individual pixel could only select from 32 colors (or 64 with some restrictions), whereas VGA and MCGA displays could select from 256 colors per pixel, so that was the more relevant figure for colors… but this wasn’t the most important part either.

The more important part was that VGA displays had the benefit of optionally allowing pixels to be wholly determined by individual bytes in memory, in contrast to the Amiga’s “bit-planar” architecture where each bit of a pixel’s color index was stored in a separate plane. While bit-planar displays are more flexible in that they offer more display mode options that use less memory (eg., you can have a 32-color display mode that actually only uses 5 bits per pixel, something obviously not possible with a byte-per-pixel layout), it made it all but impossible for CPUs at the time to actually render pixels efficiently.

This ended up being why Amigas, despite previously being much more powerful graphically due to built-in hardware for moving memory around, couldn’t deliver the kind of CPU-centric rendering that would come to characterize the PC’s 2.5D game revolution (Wolfenstein, Doom, etc.)

It was a very interesting transition and a notable relevance to the architectural decisions of the displays. Unfortunately, we’ll never know if Amiga would have countered in time with a similar architecture, since the plans of the original Amiga team to move the platform forward were scrapped by Commodore long before any of this happened :(

Cheers,

– Casey

Jimmy Maher

August 20, 2016 at 9:50 am

As I’m sure you’re well aware, any comparison of whether VGA or Amiga graphics were “better” quickly gets very complicated, with even the finest of points seemingly having a counterpoint. One might, for instance, be tempted to say that the IBM architecture could display still images in better quality (perfect for business graphics), while the Amiga had the edge in animation (perfect for gaming and video production), and leave it at that. But then the Amiga actually was much better at displaying still photographs and other scanned images. Its HAM mode, for all its restrictions, was so suited to this particular task as almost to seem custom-designed for it. In this particular application, being able to display (almost) any of 4096 colors was far more useful than being restricted to 256 from a palette of 262,144. In the end, as happens so often in technology, the question of which was better comes down to what you’re really trying to do.

For anyone interested in digging deeper into this, I do just that in my book about the Amiga, including the death blow that Wolfenstein 3D and Doom represented to the Amiga as continuing viable rival to the Intel architecture as a game machine. In this article, though, I thought it better to stay out of those weeds — they being the virulent sort that can quickly eat an article whole — and just point out that VGA did at the very least put the IBM world and the Amiga world in the same conversation when it came to graphics quality.

One other wonderful thing about VGA that would make life vastly easier for countless artists and programmers over decades to come: it had *square* pixels. In other words, if you drew a box of 25 pixels by 25 pixels, you actually *got* a box, not a rectangle. Words can hardly express how wonderful this is to work with after living with an Amiga or another computer with funky oblong pixels.

Lotus

August 20, 2016 at 5:18 pm

I think the simplest way to look at it is:

– The most common resolution for games on the Amiga was 320×200 pixels with 32 colors. Due to the Amiga’s custom chips, the games had smooth scrolling, animated sprites, digitial sound samples and pretty good MOD music.

– Once games on the PC used VGA and Soundblaster cards, they mostly used a resolution of 320×200 pixels with 256 colors, had digital sound samples and poor/mediocre/somewhat okay Adlib/MIDI music. The PC’s fast main CPU (386 & later 486) was far more capable of calculating 3D graphics (especially compared to the now old but most widespread Amiga model, the A500), so with PC games like Comanche, X-Wing, Ultima Underworld, Wolfenstein 3D and Doom, the Amiga was left far behind.

Whomever

August 21, 2016 at 1:48 am

Re music, let’s not forget the Roland MT-32, which a lot of games supported. Not super cheap but the sound lasted-Roland combo was epic. And lots of games supported it.

Whomever

August 21, 2016 at 1:49 am

Argh! Sound blaster, not lasted. Damn you autocorrect.

Pedro Timóteo

August 21, 2016 at 8:41 am

Actually, I’d say the most common resolution on the Amiga was, unfortunately, 320x200x16 colors, especially in UK-made games, since it was common to port Atari ST graphics (and often code) to the Amiga, to save on costs (much like how many Amstrad CPC games used Spectrum code and, often, ported Spectrum monochrome-ish graphics). American and German-made games were more likely to use 32 colors, as far as I remember.

Like others said, the advent of VGA / MCGA graphics, particularly the resolution of 320x200x256 colors, was when games started to look better on the PC than on any other system (and would do so for a while, with perhaps the SNES as its only competition). This was particularly noticeable in magazine screenshots (the PC ones started looking better than everything else), and also in the complaints of Amiga gamers: instead of “they’re selling us bad EGA PC ports that don’t take advantage of the Amiga’s superior graphics” (e.g. Sierra’s AGI and EGA SCI releases, Ultima up to 5, etc.), suddenly it was “these graphics look bad when dithered from 256 to 32 or 16 colors, the game has a bad frame rate, and switching between 15 disks is not fun.” :) (Sierra’s VGA games, Ultima 6, Wing Commander, and many more.)

It was also impressive how long the 320x200x256 mode lasted as the default / best mode for gaming. For instance, three generations of the Ultima series, all with different engines:

– Ultima 6 (1990)

– Ultima 7 (1992)

– Ultima 8 (1994)

Gnoman

August 21, 2016 at 8:21 pm

The truly odd thing about that is that XGA -which was required to support 640x480x65536 or 1024x768x256 – was introduced in 1990 (technically even sooner with the IBM 8514 add-in board for the PS/2 released in 1987 – the first plug-in GPU ever as far as I can tell) and folded into the Super VGA umbrella standard.

While it took time for this to create significant market penetration, it is honestly surprising that it had so little support even 4 years after introduction.

jmdesp

November 19, 2019 at 11:06 am

There’s an obvious answer for why 320x200x256 stood for so long, and it’s very linked to the 640 kb story !

Why you could not use more than 640 kb is that memory above it was reserved for “other usages”, and one of those was a 64 kb “windows” to address video memory.

But the largest 256 colors resolution that fits into that is 320×200 ! For an amazingly long time, this resulted in a lot of problems doing anything in video memory that required more than 64 kb.

You had to switch the 64 kb windows to a different part of the video memory to address it which worked in a completely different and hardly documented way for every single video card ! Until the VESA standard came with an API to do it in a compatible way between cards.

But even that didn’t really solve the issue immediately.

Because even if you knew how to do it, there was additionally a more fundamental problem : calculation in 64kb in a still largely 16 bit CPU was very easy and efficient, but as soon as you had more pixels than that it became a lot less efficient and quick. Programmers had learned at that time to do with the limited 320×200 resolution, and compensate with highly sophisticated 3D graphics within that resolution. If you wanted to use a higher resolution, yes it was more pleasant on the eyes, but you suddenly had 4 time more pixel to handle, which quite overwhelmed the limited CPUs of the time, especially given all the calculations required to address the correct part of memory. As a result, you ended up hardly able to still have as sophisticated graphics as in 320×200 ! (or if you did, your rendering speed was at risk of suffering greatly).

So 320×200 stayed as the best, more convenient option for a long time, until games were fully running in “protected” and really 32 bits mode, and also very soon using video cards API for 3D display, not manipulating all the pixels themselves anymore.

berg

August 27, 2016 at 5:23 am

> One other wonderful thing about VGA that would make life vastly easier for countless artists and programmers over decades to come: it had *square* pixels. In other words, if you drew a box of 25 pixels by 25 pixels, you actually *got* a box, not a rectangle.

That’s true for the 640×480 mode, but it only supported 16 colors—fine for office software but not great for games or art. The only “standard” mode with more colors was 320×200, meaning non-square pixels. The underdocumented 320×240 “mode X” was somewhat popular, and had square pixels with 256 colors… in a weird planar memory layout that made programming difficult.

Jimmy Maher

August 27, 2016 at 7:41 am

Ah, you’re right of course. At least it would get there in time, when SVGA and 640X480 resolutions became typical in games!

Iggy Drougge

February 6, 2021 at 2:36 am

In PAL resolutions, which was where most Amiga games were developed, pixels were square. It was only in the 320×200 NTSC resolutions that pixels were oblong.

Scali

December 26, 2016 at 4:11 pm

Yes, you can argue that you can only pick from a palette of N colours, where N = 32 (or 16 or whatever) on Amiga, and N = 256 on PC.

However, you can change this palette as many times as you like during the screen.

On PC this wasn’t really practical, because the limited hardware and the fact that no two systems were exactly the same made timing such changes very difficult.

On Amiga however, you had the copper, where you could have the hardware change the palette at exact positions on the screen, as many times as you liked.

So quite a few Amiga games had far more than 256 colours on screen, while the VGA versions did not. A common example is Blues Brothers. Or Zool. They have a very colourful backdrop on Amiga, and just a plan single colour on PC.

Aside from that, many early VGA games were similar to the Atari/Amiga games mentioned: VGA just got the graphics ported from the Amiga version, so only 16 or 32 colours were used.

Edgar

August 20, 2016 at 1:22 am

I have 5.25 inch disks that still work, whereas all my 3.5 inchers are long dead…

Thanks for the article!

Alexander Freeman

August 20, 2016 at 5:29 am

I’d say even though IBM was indeed arrogant, it still had good justification to be pissed off at all the people making IBM clones. After all, even though what they were doing was technically legal, it was still IBM that had done most of the work, but it was they who were reaping most of the benefits. Making all the computer parts but one (the chip) open-architecture was remarkably short-sighted of IBM, though.

_RGTech

March 29, 2025 at 6:50 am

They just couldn’t have done it differently. Remember how the original PC 5150 was a skunkworks piece, done in 12 months?

Yes, in hindsight it’s clear that the copyrighted BIOS was not really a “copy protection” for the whole system, but it’s also clear that the open architecture and all the available third-party accessories were a necessary part of the success story. Don Estridge really knew what he was doing.

Shutting the cloners out of the business in the long term would have required _adding_ a new (faster, better, nearly plug&play) standard like MCA _and_ – at the same time – keeping the ISA bus. But removing all legacy add-in compatibility was like shooting yourself in the foot.

anonymous

August 20, 2016 at 6:53 am

You’ve got a “costed” where you should have ” cost.”

Jimmy Maher

August 20, 2016 at 10:01 am

Thanks!

Christopher Benz

August 21, 2016 at 3:29 am

Another great read – thanks!

I’m finding this blog utterly compelling whether you are taking a macro perspective on the 80’s IT industries or doing a close reading of ‘Wishbringer’. Remarkable the relationship between these seemingly diverse preoccupations seems natural in a way that I think is quite ahead of its time.

Looking forward to reading future instalments!

FZ

August 26, 2016 at 4:48 am

Wow, this sure brought back memories. Great job, as always.

One overlooked, crucial fact about Amiga vs. VGA graphics: More often than not the Amiga output ended up on a CRT TV with scanlines, which meant that an identical 320×200, 32 color image looked much better than the comparatively blockier VGA monitor version.

Jim

August 30, 2016 at 8:09 pm

Great memories – thank you!

One small point, the EISA card you have pictured is actually a VESA ISA card – used exclusively for video adaptors including the first accelerated 3D cards. The EISA adaptor was actually a very interesting bit of engineering that used a two level socket (the shallow part was pinned for ISA cards, and the deeper level (that ISA cards could not reach) had extra contacts. Example pictured:

http://philipstorr.id.au/pcbook/images/ex33e3.jpg

Jimmy Maher

August 31, 2016 at 10:20 am

Thanks so much! I’ve replaced my image with yours, and changed the description appropriately.

Nate Edel

October 24, 2024 at 3:01 am

VESA Local Bus (or VLB) is an interesting technology, because the video card as the early 1990s settled in were far and away the biggest thing most people wanted to be faster. VLB was a very much ISA-like, simple standard unlike EISA (or MCA.)

Other than video, I’m not sure what desktop users would have wanted at the time where 16-bit ISA wasn’t fast enough – individual IDE hard drives couldn’t saturate the bus back then, and just about anything else was slower still.

EISA was also a popular technology in early servers, which were the only places I ever saw it (although they did make VLB SCSI controllers, too.)

_RGTech

March 29, 2025 at 7:07 am

VLB was a nice piece of technology for a short period, very closely related to the 486 – it was connected directly to the CPU. This made it faster than EISA or MCA, even in their later revisions. And that was also a problem, because the specification only accounted for 33 MHz… faster 486 CPUs with a higher bus clock (it took a while for the invention of the clock-doubling DX2!) really drove VLB to its limits. Where a 33 MHz system could use 3 VLB cards, you had to stay with 1 card on a 50 MHz system (and even that wasn’t necessarily reliable).

Aside from the usual VLB video card, there were also VLB controller cards for IDE drives and ports. Those were the parts that really profited from the faster bus.

Then came the Pentium, and with it usually PCI, and the topic was moot*. (Until the ever faster video cards demanded their separate AGP.)

* okay, I have a EISA-PCI-board for a Pentium 60/66… for whatever reason, someone thought it was a good idea to build that strange combination.

Melfina the Blue

October 1, 2016 at 10:20 am

Whee, the PS/2! The first computer I fell in love with (along with OS/2). Sadly, ours was retired in favor of an Aptiva with a CD-Rom drive (either the CD-Rom for the PS/2 was ridiculously expensive even with an employee discount or IBM didn’t have one).

Also, you’ve finally given me an idea as to why IBM offered long-time employees such a good retirement buyout in ’93. Always wondered why my mother (who’d been there for 35 years and was in Consulting Relations which apparently meant helping customers figure out what they wanted to buy) got such a good package.

Love the blog, I find all the history of this sort of thing really fascinating, especially since we’re now getting to an era I have some memories of.

flowmotion

December 5, 2016 at 4:58 am

Great article about a critical episode in PC history which isn’t discuss very often.

One aspect not mentioned in this article was the USDOJ Anti-Trust Consent Degree was under. It is often stated that IBM mistakenly make the original PC an open architecture due to time or market considerations. The truth is they really didn’t have a choice, they were under a government edict to provide fully documented interfaces to “plug-compatible” manufacturers at a “reasonable and non-discriminatory” fee. Government purchasing rules also required them to provide “second sources” for their equipment, which is essentially why AMD exists.

In 1986, IBM successfully argued to a judge that the decree be lifted, using Microsoft and the clone market as proof they were no longer a computing monopoly. How right they were. The first thing IBM did was introduce MicroChannel and OS/2, and the resulting stink eventually killed them both. However, IBM still exists largely because they still have a complete monopoly on the mainframe market.

> Compaq’s CEO Rod Canion, never a shrinking violet under any circumstances, outdid himself at the launch, declaring the Deskpro 386 “the third generation of the personal-computer revolution” after the Apple II and the IBM PC, thus implicitly placing his own Compaq on a par with those two storied companies.

Given that Linux was developed on a generic 386 AT clone, I have trouble disagreeing with this. The world’s datacenters currently run on commodity x86 and Linux.

While desktop PCs were boggled down with DOS and all that crap for years, Compaq essentially invented the enterprise x86 server market, promoted Windows NT and SCO and Netware and other 32-bit OSes, and was the x86 server market leader until HP (#3) bought DEC (#2) and Compaq (#1).

Meanwhile, IBM did everything they could to stall x86 servers, in order to protect their midrange equipment. They were the last major vendor to sell rackmount systems, even while “IBM shops” filled up with Compaq file servers and mail servers and the like. I’m certain if MCA had won, IBM would have simply banned large scale x86 systems and delayed the commodity server revolution by ten years or more. That means no Yahoo, no Hotmail, no Google, and so on.

Jimmy Maher

December 5, 2016 at 8:37 am

Thanks for this! That’s some context and background I hadn’t fully considered before. The myth that the IBM PC was a slapped-together product that “accidentally” became open-architecture is a pervasive one. I blame it primarily on the horrid Robert X. Cringely (what a perfect name!) and his Triumph of the Nerds book and television documentary.

flowmotion

December 6, 2016 at 7:40 am

Thanks. The patent licensing was certainly a huge part of it, but not all of it.

The RND decree was why the PC industry spent the 386 and 486 era mostly pushing extended IBM PC AT (286) technologies rather than new things. EISA. SVGA. etc. It was safe stuff that IBM couldn’t sue over.

But, after the leader had its head chopped-off, the PC market devolved into chaos until circa 1994 when Microsoft and Intel started to make WinTel into a modern platform with PCI, USB, ISA-PNP, APIC, etc. Even now, there still is 1981 stuff they haven’t totally replaced.

Historians like Cringely like to focus on the sexy big-named “failures” like Apple and Commodore. But before the IBM PC came out there was already a large established market of “Altair-clones” — Z80/S100 commodity “business computers”. And that included some (back-then) name-brand competitors like Texas Instruments and Zenith and the like. The hardware was mostly identical, but all the details differed and that killed interoperability. These personal computers mostly didn’t play games, so they aren’t relevant to your blog, but they were a huge market with hundreds of competitors.

IBM legally had to create a platform which would be commodified and “standardized”. But they actually were largely competing against an already commodified market of Z80 business machines, and not really against Apple and Atari and the rest. IBM had a stragegy to up the game, and it was not a mistake that the pre-existing commodity market converged on “IBM PC” RND standards. IBM wanted to be A#1 in commodity personal computing, and that’s what they were. Point being it was no accident.

DZ-Jay

May 1, 2017 at 10:58 am

You’ve mentioned this in a few articles already and I disagree with your characterization of Cringely. Not that I have any love for the tabloid rambler, but having read his book and watched his documentary, I never got the impression from him that IBM just happen to “slap together” a product that “accidentally” became open-architecture.

It is true that his narrative focuses on the time-to-market as the big motivator, but his point is that IBM did a very un-IBM thing at the time: created a product from off-the-shelves components rather than from proprietary technology, which is their wont. Moreover, in Cringely’s telling, this was very much by design, with some very smart people assigned to the project — not “slapped together.”

You, on the other hand, seem to be taking two opposing views on the subject. On the one hand you claimed in your previous IBM article how very much IBM-like this move was, that “open architecture” was the way they always operated (and let’s not get into your confusion between “open architecture” as it was known in the mainframe industry, to represent a vendor-supplied, yet still proprietary, ecosystem; versus that used in software nowadays, to represent transparently published technology standards).

You even went out of your way to suggest that the project was run by some renegade group attempting to move IBM into a new direction of openness.

Then, on the other hand, here you are admitting to the notion that IBM “went back” to their old ways by attempting to take control of the industry via their proprietary standards.

As I see it, Cringely’s version rings much, much closer to what flowmotion said above, if a bit overzealous in the motivations: That IBM briefly took a non-IBM step in creating the Personal Computer, one which they eventually aimed to correct by tightening their grip of all standards and technologies; resulting in the abdication of the first and the failure of the second.

About the only thing Cringely missed to that point was that the motivation was largely a consent decree constraining their business practices, rather than purely time-to-market.

-dZ.

Harlan Gerdes

January 9, 2017 at 3:54 am

I love this blog, I learn so much about computing history that I never knew. And it’s all in a semi chronologically arranged format. Great work!!

Steve Pitts

January 7, 2023 at 4:43 pm

Is it just me or is “clonester” a horrid made up word? Surely somebody who clones things is a cloner?

_RGTech

March 29, 2025 at 7:57 am

One little bit…

The MCA was _not_ designed as “a complete break with the past which supported only 32-bit peripherals.”. IBM also relied heavily on 80286 machines in the PS/2 line (the 80286 was the design target for OS/2!), so they did the worst thing possible: create a new, incompatible bus – but with different specs for 16 or 32 bit machines.

But I’ll admit that it was the first (and, for a while, only) bus to even allow 32 bit data transfer. So, if the word “only” vanishes or moves to another place, the sentence will be perfectly fine :)

I don’t envy the card manufacturers or the buyers, having to choose between best performance (32 bit MCA, only for the expensive 386 PS/2s) or a wider audience (16 bit MCA for 286 and 386 systems)

No wonder many skipped MCA altogether and kept on building (buying) ISA cards for the rest of the world.

And we shouldn’t forget that IBM also introduced the PS/2 Model 30, with a full 16-bit CPU (8086, as opposed to the 8088 from the PC and XT) and still only an 8-bit ISA-Bus. And despite the name, it would never be able to run OS/2. Confusion, anyone?