The first finished devices to ship with the 3Dfx Voodoo chipset inside them were not add-on boards for personal computers, but rather standup arcade machines. That venerable segment of the videogames industry was enjoying its last lease on life in the mid-1990s; this was the last era when the graphics of the arcade machines were sufficiently better than those which home computers and consoles could generate as to make it worth getting up off the couch, driving into town, and dropping a quarter or two into a slot to see them. The Voodoo chips now became part and parcel of that, ironically just before they would do much to destroy the arcade market by bringing equally high-quality 3D graphics into homes. For now, though, they wowed players of arcade games like San Francisco Rush: Extreme Racing, Wayne Gretzky’s 3D Hockey, and NFL Blitz.

Still, Gary Tarolli, Scott Sellers, and Ross Smith were most excited by the potential of the add-on-board market. All too well aware of how the chicken-or-the-egg deadlock between game makers and players had doomed their earlier efforts with Pellucid and Media Vision, they launched an all-out charm offensive among game developers long before they had any actual hardware to show them. Smith goes so far as to call “connecting with the developers early on and evangelizing them” the “single most important thing we ever did” — more important, that is to say, than designing the Voodoo chips themselves, impressive as they were. Throughout 1995, somebody from 3Dfx was guaranteed to be present wherever developers got together to talk among themselves. While these evangelizers had no hardware as yet, they did have software simulations running on SGI workstations — simulations which, they promised, duplicated exactly the capabilities the real chips would have when they started arriving in quantity from Taiwan.

Our core trio realized early on that their task must involve software as much as hardware in another, more enduring sense: they had to make it as easy as possible to support the Voodoo chipset. In my previous article, I mentioned how their old employer SGI had created an open-source software library for 3D graphics, known as OpenGL. A team of programmers from 3Dfx now took this as the starting point of a slimmed-down, ultra-optimized MS-DOS library they called GLide; whereas OpenGL sported well over 300 individual function calls, GLide had less than 100. It was fast, it was lightweight, and it was easy to program. They had good reason to be proud of it. Its only drawback was that it would only work with the Voodoo chips — which was not necessarily a drawback at all in the eyes of its creators, given that they hoped and planned to dominate a thriving future market for hardware-accelerated 3D graphics on personal computers.

Yet that domination was by no means assured, for they were far from the only ones developing consumer-oriented 3D chipsets. One other company in particular gave every indication of being on the inside track to widespread acceptance. That company was Rendition, another small, venture-capital-funded startup that was doing all of the same things 3Dfx was doing — only Rendition had gotten started even earlier. It had actually been Rendition who announced a 3D chipset first, and they had been evangelizing it ever since every bit as tirelessly as 3Dfx.

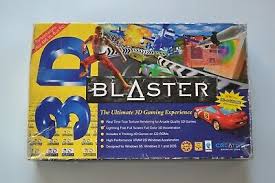

The Voodoo chipset was technologically baroque in comparison to Rendition’s chips, which went under the name of Vérité. This meant that Voodoo should easily outperform them — eventually, once all of the logistics of East Asian chip fabricating had been dealt with and deals had been signed with board makers. In June of 1996, when the first Vérité-powered boards shipped, the Voodoo chipset quite literally didn’t exist as far as consumers were concerned. Those first Vérité boards were made by none other than Creative Labs, the 800-pound gorilla of the home-computer add-on market, maker of the ubiquitous Sound Blaster sound cards and many a “multimedia upgrade kit.” Such a partner must be counted as yet another early coup for Rendition.

The Vérité cards were followed by a flood of others whose slickly aggressive names belied their somewhat workmanlike designs: 3D Labs Permedia, S3 Virge, ATI 3D Rage, Matrox Mystique. And still Voodoo was nowhere.

What was everywhere was confusion; it was all but impossible for the poor, benighted gamer to make heads or tails of the situation. None of these chipsets were compatible with one another at the hardware level in the way that 2D graphics cards were; there were no hardware standards for 3D graphics akin to VGA, that last legacy of IBM’s era of dominance, much less the various SVGA standards defined by the Video Electronic Standards Association (VESA). Given that most action-oriented computer games still ran on MS-DOS, this was a serious problem.

For, being more of a collection of basic function calls than a proper operating system, MS-DOS was not known for its hardware agnosticism. Most of the folks making 3D chips did provide an MS-DOS software package for steering them, similar in concept to 3Dfx’s GLide, if seldom as optimized and elegant. But, just like GLide, such libraries worked only with the chipset for which they had been created. What was sorely needed was an intermediate layer of software to sit between games and the chipset-manufacturer-provided libraries, to automatically translate generic function calls into forms suitable for whatever particular chipset happened to exist on that particular computer. This alone could make it possible for one build of one game to run on multiple 3D chipsets. Yet such a level of hardware abstraction was far beyond the capabilities of bare-bones MS-DOS.

Absent a more reasonable solution, the only choice was to make separate versions of games for each of the various 3D chipsets. And so began the brief-lived, unlamented era of the 3D pack-in game. All of the 3D-hardware manufacturers courted the developers and publishers of popular software-rendered 3D games, dangling before them all sorts of enticements to create special versions that took advantage of their cards, more often than not to be included right in the box with them. Activision’s hugely successful giant-robot-fighting game MechWarrior 2 became the king of the pack-ins, with at least half a dozen different chipset-specific versions floating around, all paid for upfront by the board makers in cold, hard cash. (Whatever else can be said about him, Bobby Kotick has always been able to spot the seams in the gaming market where gold is waiting to be mined.)

It was an absurd, untenable situation; the game or games that came in the box were the only ones that the purchasers of some of the also-ran 3D contenders ever got a chance to play with their new toys. Gamers and chipset makers alike could only hope that, once Windows replaced MS-DOS as the gaming standard, their pain would go away.

In the meanwhile, the games studio that everyone with an interest in the 3D-acceleration sweepstakes was courting most of all was id Software — more specifically, id’s founder and tech guru, gaming’s anointed Master of 3D Algorithms, John Carmack. They all begged him for a version of Quake for their chipset.

And once again, it was Rendition that scored the early coup here. Carmack actually shared some of the Quake source code with them well before either the finished game or the finished Vérité chipset was available for purchase. Programmed by a pair of Rendition’s own staffers working with the advice and support of Carmack and Michael Abrash, the Vérité-rendered version of the game, commonly known as vQuake, came out very shortly after the software-rendered version. Carmack called it “the premier platform for Quake” — truly marketing copy to die for. Gamers too agreed that 3D acceleration made the original’s amazing graphics that much more amazing, while the makers of other 3D chipsets gnashed their teeth and seethed.

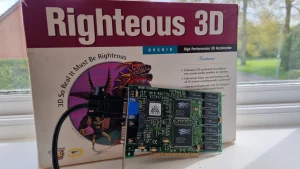

Among these, of course, was the tardy 3Dfx. The first Voodoo cards appeared late, seemingly hopelessly so: well into the fall of 1996. Nor did they have the prestige and distribution muscle of a partner like Creative Labs behind them: the first two Voodoo boards rather came from smaller firms by the names of Diamond and Orchid. They sold for $300, putting them well up at the pricey end of the market — and, unlike all of the competition’s cards, these required you to have another, 2D-graphics card in your computer as well. For all of these reasons, they seemed easy enough to dismiss as overpriced white elephants at first blush. But that impression lasted only until you got a look at them in action. The Voodoo cards came complete with a list of features that none of the competition could come close to matching in the aggregate: bilinear filtering, trilinear MIP-mapping, alpha blending, fog effects, accelerated light sources. If you don’t know what those terms mean, rest assured that they made games look better and play faster than anything else on the market. This was amply demonstrated by those first Voodoo boards’ pack-in title, an otherwise rather undistinguished, typical-of-its-time shooter called Hellbender. In its new incarnation, it suddenly looked stunning.

The Orchid Righteous 3D card, one of the first two to use the Voodoo chipset. (The only consumer category as fond of bro-dude phraseology like “extreme” and “righteous” as the makers of 3D cards was men’s razors.)

The battle lines were drawn between Rendition and 3Dfx. But sadly for the former, it quickly emerged that their chipset had one especially devastating weakness in comparison to its rival: its Z-buffering support left much to be desired. And what, you ask, is Z-buffering? Read on!

One of the non-obvious problems that 3D-graphics systems must solve is the need for objects in the foreground of a scene to realistically obscure those behind them. If, at the rendering stage, we were to simply draw the objects in whatever random order they came to us, we would wind up with a dog’s breakfast of overlapping shapes. We need to have a way of depth-sorting the objects if we want to end up with a coherent, correctly rendered scene.

The most straightforward way of depth-sorting is called the Painter’s Algorithm, because it duplicates the process a human artist usually goes through to paint a picture. Let’s say our artist wants to paint a still life of an apple sitting in front of a basket of other fruits. First she will paint the basket to her satisfaction, then paint the apple right over the top of it. Similarly, when we use a Painter’s Algorithm on the computer, we first sort the whole collection of objects into a hierarchy that begins with those that are farthest from our virtual camera and ends with those closest to it. Only after this has been done do we set about the task of actually drawing them to the screen, in our sorted order from the farthest away to the closest. And so we end up with a correctly rendered image.

But, as so often happens in matters like this, the most logically straightforward way is far from the most efficient way of depth-sorting a 3D scene. When the number of objects involved is few, the Painter’s Algorithm works reasonably well. When the numbers get into the hundreds or thousands, however, it results in much wasted effort, as the computer ends up drawing objects that are completely obscured by other objects in front of them — i.e., objects that don’t really need to be drawn at all. Even more importantly, the process of sorting all of the objects by depth beforehand is painfully time-consuming, a speed bump that stops the rendering process dead until it is completed. Even in the 1990s, when their technology was in a laughably primitive stage compared to today, GPUs tended to emphasize parallel processing — i.e., staying constantly busy with multiple tasks at the same time. The necessity of sorting every object in a scene by depth before even getting properly started on rendering it rather threw all that out the window.

Enter the Z-buffer. Under this approach, every object is rendered right away as soon as it comes down the pipeline, used to build the appropriate part of the raster of colored pixels that, once completed, will be sent to the monitor screen as a single frame. But there comes an additional wrinkle in the form of the Z-buffer itself: a separate, parallel raster containing not the color of each pixel but its distance from the camera. Before the GPU adds an entry to the raster of pixel colors, it compares the distance of that pixel from the camera with the number in that location in the Z-buffer. If the current distance is less than the one already found there, it knows that the pixel in question should be overwritten in the main raster and that the Z-buffer raster should be updated with that pixel’s new distance from the camera. Ditto if the Z-buffer contains a null value, indicating no object has yet been drawn at that pixel. But if the current distance is larger than the (non-null) number already found there, the GPU simply moves on without doing anything more, confident in the knowledge that what it had wanted to draw should actually be hidden by what it has already drawn.

There are plenty of occasions when the same pixel is drawn over twice — or many times — before reaching the screen even under this scheme, but it is nevertheless still vastly more efficient than the Painter’s Algorithm, because it keeps objects flowing through the pipeline steadily, with no hiccups caused by lengthy sorting operations. Z-buffering support was reportedly a last-minute addition to the Vérité chipset, and it showed. Turning depth-sorting on for 100-percent realistic rendering on these chips cut their throughput almost in half; the Voodoo chipset, by contrast, just said, “No worries!,” and kept right on trucking. This was an advantage of titanic proportions. It eventually emerged that the programmers at Rendition had been able to get Quake running acceptably on the Vérité chips only by kludging together their own depth-sorting algorithms in software. With Voodoo, programmers wouldn’t have to waste time with stuff like that.

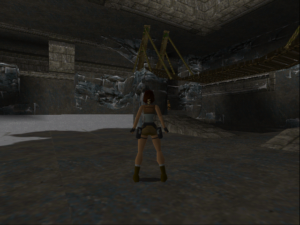

But surprisingly, the game that blew open the doors for the Voodoo chipset wasn’t Quake or anything else from id. It was rather a little something called Tomb Raider, from the British studio Core Design, a game which used a behind-the-back third-person perspective rather than the more typical first-person view — the better to appreciate its protagonist, the buxom and acrobatic female archaeologist Lara Croft. In addition to Lara’s considerable assets, Tomb Raider attracted gamers with its unprecedentedly huge and wide-open 3D environments. (It will be the subject of my next article, for those interested in reading more about its massive commercial profile and somewhat controversial legacy.)

In November of 1996, when Tomb Raider been out for less than a month, Core put a Voodoo patch for it up on their website. Gamers were blown away. “It’s a totally new game!” gushed one on Usenet. “It was playable but a little jerky without the patch, but silky smooth to play and beautiful to look at with the patch.” “The level of detail you get with the Voodoo chip is amazing!” enthused another. Or how about this for a ringing testimonial?

I had been playing the regular Tomb Raider on my PC for about two weeks

before I got the patch, with about ten people seeing the game, and not

really saying anything regarding how amazing it was. When I got the

accelerated patch, after about four days, every single person who has

seen the game has been in awe watching the graphics and how

smooth [and] lifelike the movement is. The feel is different, you can see

things much more clearly, it’s just a more enjoyable game now.

Tomb Raider became the biggest hit of the 1996 holiday season, and tens if not hundreds of thousands of Voodoo-based 3D cards joined it under Christmas trees.

In January of 1997, id released GLQuake, a new version of that game that supported the Voodoo chipset. In telling contrast to the Vérité-powered vQuake, which had been coded by Rendition’s programmers, GLQuake had been taken on by John Carmack as a personal project. The proof was in the pudding; this Quake ran faster and looked better than either of the previous ones. Running on a machine with a 200 MHz Intel Pentium processor and a Voodoo card, GLQuake could manage 70 frames per second, compared to 41 frames for the software-rendered version, whilst appearing much more realistic and less pixelated.

One last stroke of luck put the finishing touch on 3Dfx’s destiny of world domination: the price of memory dropped precipitously, thanks to a number of new RAM-chip factories that came online all at once in East Asia. (The factories had been built largely to feed the memory demands of Windows 95, the straw that was stirring the drink of the entire computer industry.) The Voodoo chipset required 4 MB of memory to operate effectively — an appreciable quantity in those days, and a big reason why the cards that used it tended to cost almost as twice as much as those based on the Vérité chips, despite lacking the added complications and expense of 2D support. But with the drop in memory prices, it suddenly became practical to sell a Voodoo card for under $200. Rendition could also lower their prices somewhat thanks to the memory windfall, of course, but at these lower price points the dollar difference wasn’t as damaging to 3Dfx. After all, the Voodoo cards were universally acknowledged to be the class of the industry. They were surely worth paying a little bit of a premium for. By the middle of 1997, the Voodoo chipset was everywhere, the Vérité one left dead at the side of the road. “If you want full support for a gamut of games, you need to get a 3Dfx card,” wrote Computer Gaming World.

These were heady times at 3Dfx, which had become almost overnight the most hallowed name in hardcore action gaming outside of id Software, all whilst making an order of magnitude more money than id, whose business model under John Carmack was hardly fine-tuned to maximize revenues. In a comment he left recently on this site, reader Captain Kal said that, when it comes to 3D gaming in the late 1990s, “one company springs to my mind without even thinking: 3Dfx. Yes, we also had 3D solutions from ATI, NVIDIA, or even S3, but Voodoo cards created the kind of dedication that I hadn’t seen since the Amiga days.” The comparison strikes me as thoroughly apropos.

3Dfx brought in a high-profile CEO named Greg Ballard, formerly of Warner Music and the videogame giant Capcom, to oversee a smashingly successful initial public offering in June of 1997. He and the three thirty-something founders were the oldest people at the company. “Most of the software engineers were [in their] early twenties, gamers through and through, loved games,” says Scott Sellers. “Would code during the day and play games at night. It was a culture of fun.” Their offices stood at the eighth hole of a golf course in Sunnyvale, California. “We’d sit out there and drink beer,” says Ross Smith. “And you’d have to dodge incoming golf balls a bit. But the culture was great.” Every time he came down for a visit, says their investing angel Gordon Campbell,

they’d show you something new, a new demo, a new mapping technique. There was always something. It was a very creative environment. The work hard and play hard thing, that to me kind of was Silicon Valley. You went out and socialized with your crew and had beer fests and did all that kind of stuff. And a friendly environment where everybody knew everybody and everybody was not in a hierarchy so much as part of the group or the team.

I think the thing that was added here was, it’s the gaming industry. And that was a whole new twist on it. I mean, if you go to the trade shows, you’d have guys that would show up at our booth with Dracula capes and pointed teeth. I mean, it was just crazy.

Gary Tarolli, Scott Sellers, and Greg Ballard do battle with a dangerous houseplant. The 1990s were wild and crazy times, kids…

While the folks at 3Dfx were working hard and playing hard, an enormously consequential advancement in the field of software was on the verge of transforming the computer-games industry. As I noted previously, in 1996 most hardcore action games were still being released for MS-DOS. In 1997, however, that changed in a big way. With the exception of only a few straggling Luddites, game developers switched over to Windows 95 en masse. Quake had been an MS-DOS game; Quake II, which would ship at the end of 1997, ran under Windows. The same held true for the original Tomb Raider and its 1997 sequel, as it did for countless others.

Gaming was made possible on Windows 95 by Microsoft’s DirectX libraries, which finally let programmers do everything in Windows that they had once done in MS-DOS, with only a slight speed penalty if any, all while giving them the welcome luxury of hardware independence. That is to say, all of the fiddly details of disparate video and sound cards and all the rest were abstracted away into Windows device drivers that communicated automatically with DirectX to do the needful. It was an enormous burden lifted off of developers’ shoulders. Ditto gamers, who no longer had to futz about for hours with cryptic “autoexec.bat” and “config.sys” files, searching out the exact combination of arcane incantations that would allow each game they bought to run optimally on their precise machine. One no longer needed to be a tech-head simply to install a game.

In its original release of September 1995, the full DirectX suite consisted of DirectDraw for 2D pixel graphics, DirectSound for sound and music, DirectInput for managing joysticks and other game-centric input devices, and DirectPlay for networked multiplayer gaming. It provided no support for doing 3D graphics. But never fear, Microsoft said: 3D support was coming. Already in February of 1995, they had purchased a British company called RenderMorphics, the creator of Reality Lab, a hardware-agnostic 3D library. As promised, Microsoft added Direct3D to the DirectX collection with the latter’s 2.0 release, in June of 1996.

But, as the noted computer scientist Andrew Tanenbaum once said, “the nice thing about standards is that you have so many to choose from.” For the next several years, Direct3D would compete with another library serving the same purpose: a complete, hardware-agnostic Windows port of SGI’s OpenGL, whose most prominent booster was no less leading a light than John Carmack. Direct3D would largely win out in the end among game developers despite Carmack’s endorsement of its rival, but we need not concern ourselves overmuch with the details of that tempest in a teacup here. Suffice to say that even the most bitter partisans on one side of the divide or the other could usually agree that both Direct3D and OpenGL were vastly preferable to the bad old days of chipset-specific 3D games.

Unfortunately for them, 3Dfx, rather feeling their oats after all of their success, made in response to these developments the first of a series of bad decisions that would cause their time at the top of the 3D-graphics heap to be a relatively short one.

Like all of the others, the Voodoo chipset could be used under Windows with either Direct3D or OpenGL. But there were some features on the Voodoo chips that the current implementations of those libraries didn’t support. 3Dfx was worried, reasonably enough on the face of it, about a “least-common-denominator effect” which would cancel out the very real advantages of their 3D chipset and make one example of the breed more or less as good as any other. However, instead of working with the folks behind Direct3D and OpenGL to get support for the Voodoo chips’ special features into those libraries, they opted to release a Windows version of GLide, and to strongly encourage game developers to keep working with it instead of either of the more hardware-agnostic alternatives. “You don’t want to just have a title 80 percent as good as it could be because your competitors are all going to be at 100 percent,” they said pointedly. They went so far as to start speaking of Voodoo-equipped machines as a whole new platform unto themselves, separate from more plebeian personal computers.

It was the talk and actions of a company that had begun to take its own press releases a bit too much to heart. But for a time 3Dfx got away with it. Developers coded for GLide in addition to or instead of Direct3D or OpenGL, because you really could do a lot more with it and because the cachet of the “certified” 3Dfx logo that using GLide allowed them to put on their boxes really was huge.

In March of 1998, the first cards with a new 3Dfx chipset, known as Voodoo2, began to appear. Voodoo2 boasted twice the overall throughput of its predecessor, and could handle a screen resolution of 800 X 600 instead of just 640 X 480; you could even join two of the new cards together to get even better performance and higher resolutions. This latest chipset only seemed to cement 3Dfx’s position as the class of their field.

The bottom line reflected this. 3Dfx was, in the words of their new CEO Greg Ballard, “a rocket ship.” In 1995, they earned $4 million in revenue; in 1996, $44 million; in 1997, $210 million; and in 1998, their peak year, $450 million. And yet their laser focus on selling the Ferraris of 3D acceleration was blinding Ballard and his colleagues to the potential of 3D Toyotas, where the biggest money of all was waiting to be made.

Over the course of the second half of the 1990s, 3D GPUs went from being exotic pieces of kit known only to hardcore gamers to being just another piece of commodity hardware found in almost all computers. 3Dfx had nothing to do with this significant shift. Instead they all but ignored this so-called “OEM” (“Original Equipment Manufacturer”) side of the GPU equation: chipsets that weren’t the hottest or the sexiest on the market, but that were cheap and easy to solder right onto the motherboards of low-end and mid-range machines bearing such unsexy name plates as Compaq and Packard Bell. Ironically, Gordon Campbell had made a fortune with Chips & Technologies selling just such commodity-grade 2D graphics chipsets. But 3Dfx was obstinately determined to fly above the OEM segment, determined to offer “premium” products only. “It doesn’t matter if 20 million people have one of our competitors’ chips,” said Scott Sellers in 1997. “How many of those people are hardcore gamers? How many of those people are buying games?” “I can guarantee that 100 percent of 3Dfx owners are buying games,” chimed in a self-satisfied-sounding Gary Tarolli.

The obvious question to ask in response was why it should matter to 3Dfx how many games — or what types of games — the users of their chips were buying, as long as they were buying gadgets that contained their chips. While 3Dfx basked in their status as the hardcore gamer’s favorite, other companies were selling many more 3D chips, admittedly at much less of a profit on a chip-per-chip basis, at the OEM end of the market. Among these was a firm known as NVIDIA, which had been founded on the back of a napkin in a Denny’s diner in 1993. NVIDIA’s first attempt to compete head to head with 3Dfx at the high end was underwhelming at best: released well after the Voodoo2 chipset, the RIVA TNT ran so hot that it required a noisy onboard cooling fan, and yet still couldn’t match the Voodoo2’s performance. By that time, however, NVIDIA was already building a lucrative business out of cheaper, simpler chips on the OEM side, even as they were gaining the wisdom they would need to mount a more credible assault on the hardcore-gamer market. In late 1998, 3Dfx finally seemed to be waking up to the fact that they would need to reach beyond the hardcore to continue their rise, when they released a new chipset called Voodoo Banshee which wasn’t quite as powerful as the Voodoo2 chips but could do conventional 2D as well as 3D graphics, meaning its owners would not be forced to buy a second video card just in order to use their computers.

But sadly, they followed this step forward with an absolutely disastrous mistake. You’ll remember that prior to this point 3Dfx had sold their chips only to other companies, who then incorporated them into add-on boards of their own design, in the same way that Intel sold microprocessors to computer makers rather than directly to consumers (aside from the build-your-own-rig hobbyists, that is). This business model had made sense for 3Dfx when they were cash-strapped and hadn’t a hope of building retail-distribution channels equal to those of the established board makers. Now, though, they were flush with cash, and enjoyed far better name recognition than the companies that made the boards which used their chips; even the likes of Creative Labs, who had long since dropped Rendition and were now selling plenty of 3Dfx boards, couldn’t touch them in terms of prestige. Why not cut out all these middlemen by manufacturing their own boards using their own chips and selling them directly to consumers with only the 3Dfx name on the box? They decided to do exactly that with their third state-of-the-art 3D chipset, the predictably named Voodoo3, which was ready in the spring of 1999.

Those famous last words apply: “It seemed like a good idea at the time.” With the benefit of hindsight, we can see all too clearly what a terrible decision it actually was. The move into the board market became, says Scott Sellers, the “anchor” that would drag down the whole company in a rather breathtakingly short span of time: “We started competing with what used to be our own customers” — i.e., the makers of all those earlier Voodoo boards. Then, too, 3Dfx found that the logistics of selling a polished consumer product at retail, from manufacturing to distribution to advertising, were much more complex than they had reckoned with.

Still, they might — just might — have been able to figure it all out and make it work, if only the Voodoo3 chipset had been a bit better. As it was, it was an upgrade to be sure, but not quite as much of one as everyone had been expecting. In fact, some began to point out now that even the Voodoo2 chips hadn’t been that great a leap: they too were better than their predecessors, yes, but that was more down to ever-falling memory prices and ever-improving chip-fabrication technologies than any groundbreaking innovations in their fundamental designs. It seemed that 3Dfx had started to grow complacent some time ago.

NVIDIA saw their opening and made the most of it. They introduced a new line of their own, called the TNT2, which outdid its 3Dfx competitor in at least one key metric: it could do 24-bit color, giving it almost 17 million shades of onscreen nuance, compared to just over 65,000 in the case of Voodoo3. For the first time, 3Dfx’s chips were not the unqualified, undisputed technological leaders. To make matters worse, NVIDIA had been working closely with Microsoft in exactly the way that 3Dfx had never found it in their hearts to do, ensuring that every last feature of their chips was well-supported by the increasingly dominant Direct3D libraries.

And then, as the final nail in the coffin, there were all those third-party board makers 3Dfx had so rudely jilted when they decided to take over that side of the business themselves. These had nowhere left to go but into NVIDIA’s welcoming arms. And needless to say, these business partners spurned were highly motivated to make 3Dfx pay for their betrayal.

NVIDIA was on a roll now. They soon came out with yet another new chipset, the GeForce 256, which had a “Transform & Lighting” (T&L) engine built in, a major conceptual advance. And again, the new technology was accessible right from the start through Direct3D, thanks to NVIDIA’s tight relationship with Microsoft. Meanwhile the 3Dfx chips still needed GLide to perform at their best. With those chips’ sales now plummeting, more and more game developers decided the oddball library just wasn’t worth the trouble anymore. By the end of 1999, a 3Dfx death spiral that absolutely no one had seen coming at the start of the year was already well along. NVIDIA was rapidly sewing up both the high end and the low end, leaving 3Dfx with nothing.

In 2000, NVIDIA continued to go from strength to strength. Their biggest challenger at the hardcore-gamer level that year was not 3Dfx, but rather ATI, who arrived on the scene with a new architecture known as Radeon. 3Dfx attempted to right the ship with a two-pronged approach: a Voodoo4 chipset aimed at the long-neglected budget market, and a Voodoo5 aimed at the high end. Both had potential, but the company was badly strapped for cash by now, and couldn’t afford to give them the launch they deserved. In December of 2000, 3Dfx announced that they had agreed to sell out to NVIDIA, who thought they had spotted some bits and bobs in their more recent chips that they might be able to make use of. And that, as they say, was that.

3Dfx was a brief-burning comet by any standard, a company which did everything right up to the instant when someone somewhere flipped a switch and it suddenly started doing everything wrong instead. But whatever regrets Gary Tarolli, Scott Sellers, and Ross Smith may have about the way it all turned out, they can rest secure in the knowledge that they changed not just gaming but computing in general forever. Their vanquisher NVIDIA had revenues of almost $27 billion last year, on the strength of GPUs which are as far beyond the original Voodoo chips as an F-35 is beyond the Wright Brothers’ flier, which are at the forefront not just of 3D graphics but a whole new trend toward “massively parallel” computing.

And yet even today, the 3Dfx name and logo can still send a little tingle of excitement running down the spines of gamers of a certain age, just as that of the Amiga can among some just slightly older. For a brief few years there, over the course of one of most febrile, chaotic, and yet exciting periods in all of gaming history, having a Voodoo card in your computer meant that you had the best graphics money could buy. Most of us wouldn’t want to go back to the days of needing to constantly tinker with the innards of our computers, of dropping hundreds of dollars on the latest and the greatest and hoping that publishers would still be supporting it in six months, of poring over magazines trying to make sense of long lists of arcane bullet points that seemed like fragments of a particularly esoteric PhD thesis (largely because they originally were). No, we wouldn’t want to go back; those days were kind of ridiculous. But that doesn’t mean we can’t look back and smile at the extraordinary technological progression we were privileged to witness over such a disarmingly short period of time.

Did you enjoy this article? If so, please think about pitching in to help me make many more like it. You can pledge any amount you like.

(Sources: the books Renegades of the Empire: How Three Software Warriors Started a Revolution Behind the Walls of Fortress Microsoft by Michael Drummond, Masters of DOOM: How Two Guys Created an Empire and Transformed Pop Culture by David Kushner, and Principles of Three-Dimensional Computer Animation by Michael O’Rourke. Computer Gaming World of November 1995, January 1996, July 1996, November 1996, December 1996, September 1997, October 1997, November 1997, and April 1998; Next Generation of October 1997 and January 1998; Atomic of June 2003; Game Developer of December 1996/January 1997 and February/March 1997. Online sources include “3Dfx and Voodoo Graphics — The Technologies Within” at The Overclocker, former 3Dfx CEO Greg Ballard’s lecture for Stanford’s Entrepreneurial Thought Leader series, the Computer History Museum’s “oral history” with the founders of 3Dfx, Fabian Sanglard’s reconstruction of the workings of the Vérité chipset and the Voodoo 1 chipset, “Famous Graphics Chips: 3Dfx’s Voodoo” by Dr. Jon Peddie at the IEEE Computer Society’s site, and “A Fallen Titan’s Final Glory” by Joel Hruska at the long-defunct Sudhian Media. Also, the Usenet discussions that followed the release of the 3Dfx patch for Tomb Raider and Nicol Bolas’s crazily detailed reply to the Stack Exchange question “Why Do Game Developer Prefer Windows?”.)

lee

May 19, 2023 at 5:10 pm

Missing a word – “…a 3dfx death spiral that absolutely no had seen coming …”

One quibble—I remember the Voodoo 2 being a _tremendous_ jump in performance over the V1, at lest going by the gushing copy at VoodooExtreme.com and Operation 3dfx. Though perhaps it’s just those damned rose-tinted lenses again.

(At one point when the cards were on their way out, I acquired a second Voodoo 2 and got to experience the face-meltingness of the original SLI implementation, back when it still stood for “scanline interleave.” Though it was long enough after the V2’s debut that single-card solutions like the Geforce 256 had eclipsed it, I still remember the tremendous sense of satisfaction at slotting that second Voodoo2 in and hooking up the SLI cable. Good times, man. good times.)

Sarah Walker

May 19, 2023 at 5:14 pm

V2 was particularly effective in games that supported multitexturing; it was sold in a configuration that included a second texture unit chip & memory, something that was possible on Voodoo 1 but was never implemented on the consumer boards. On games that supported this – mostly Quake 2 and Unreal in 1998 – it doubled performance _again_, giving you nearly 4x Voodoo 1 performance on a single Voodoo 2.

lee

May 19, 2023 at 5:23 pm

Thanks Sarah—good details, and that jives with what I remember. Cheers!!

Jimmy Maher

May 19, 2023 at 7:07 pm

Thanks! The Voodoo2 chipset was certainly an advance, but I think there was a fair amount of hyperbole going around about it. It was, as you say, a very heady time.

Sarah Walker

May 19, 2023 at 5:12 pm

> Throughout 1995, somebody from 3dx was guaranteed

3dfx

>The battle lines were drawn between Rendition and 3dfx. But sadly for the former, it quickly

>emerged that their chipset had one especially devastating weakness in comparison to its rival: it

>didn’t provide hardware support for Z-buffering

As best as I can tell (I started writing an emulator for the Verite a while ago, but never finished it), the Rendition 1000 chip _does_ have Z-buffering in hardware. It’s just that, in common with most of the other early 3D chips, it’s pretty sluggish. Z-buffering roughly doubles the required memory bandwidth for a given rendering performance point, and the 64-bit EDO memory buses on most of these cards weren’t up to the job.

3Dfx had two advantages here – texturing was moved to a second 64-bit bus, so that didn’t impact (much) on overall rendering performance, and Voodoo also used the fastest EDO memory available at the time; 35ns as opposed to the more common 60 or 70ns chips. Hence they were essentially able to outspend their way out of this common bottleneck.

Rendition failed due to their second generation chip being late to market – allegedly due to a design failure from their silicon partner that took months to track down. By the time it was released it was okay but unexceptional and didn’t sell well enough for their subsequent designs to hit production.

>3dfx had nothing to do with this significant shift. Instead they all but ignored this so-called

>“OEM” (“Original Equipment Manufacturer”) side of the GPU equation

They didn’t totally ignore this – Banshee was intended as an OEM product. It just got to production too late for the late 1998 OEM refresh and ended up pushed to retail instead. Voodoo 3 did get some OEM use, and I believe the “Velocity” brand was also intended for OEMs.

>a Voodoo4 chipset aimed at the long-neglected budget market, and a Voodoo5 aimed at the high end

These are the same chip, the VSA-100 – Voodoo 4 was a single chip configuration, Voodoo 5 was a dual chip configuration. A noticeable improvement, but they arrived 6 months too late to be truly relevant.

Maybe there should be some mention of Rampage, their long term next gen project that was first announced in 1997 but never hit the market (though it was probably about 6 months off when they closed)? It’s probably the main reason their post-V2 designs were so underwhelming.

I can ramble on about 3Dfx and the early 3D card designs and market for days :)

Lt. Nitpicker

May 19, 2023 at 6:15 pm

A quote from a Rendition engineer in a Fabien Sanglard article says Z-Buffering was done in software.

https://fabiensanglard.net/vquake/

Sarah Walker

May 19, 2023 at 6:20 pm

That doesn’t match what I discovered emulating the thing. I suspect he is misremembering.

Jimmy Maher

May 19, 2023 at 7:40 pm

It looks like this was indeed a rare mistake on the part of Fabien Sanglard. Based on an article at Vintage 3D (https://vintage3d.org/verite1.php), it seems that the Rendition chips did have support for Z-buffering, but it was something of a last-minute addition and wasn’t very efficient. Edits made. Thanks!

I do appreciate the added information, but I think I’m fairly comfortable eliding those other details in the interest of readability. I did place 3dfx’s haughtiness about the OEM market in the context of 1997 rather than 1998, and, since Voodoo4 and Voodoo5 were different combinations of chips, one can call them separate chipsets without being wrong, strictly speaking. ;)

Lt. Nitpicker

May 19, 2023 at 7:58 pm

Technically, the implementation of Z-Buffering on the V1000 is software, but it’s done with software running on the card as microcode (like most 3D operations on the card). When you say software, however, most people think “on the (host) CPU” instead of on the graphics card’s processor. I feel that going deep into that is not worth it IMO, because for your narrative it doesn’t matter why the Verite is slower than Voodoo, only that it is slower.

Sarah Walker

May 19, 2023 at 8:22 pm

I’ve just had a quick scan over the V1000 microcode for both the generic Speedy3D API and the custom Quake port. Quake doesn’t touch the pixel engine’s Z buffer related registers other than to turn them off, matching Stefan Podell’s testimony that vQuake doesn’t use any hardware Z buffering. Speedy3D on the other hand does have code to write to the Z buffer registers, suggesting that using hardware Z buffering is supported (if slow) and therefore that it is in fact supported by hardware.

Agree that none of this really changes the narrative!

Jeff Thomas

June 1, 2023 at 6:30 pm

If I remember correctly Quake was written with a software Z buffer by Carmack. If the Vérité buffer used slow memory and an 8 bit bus it’s entirely possible that Carmack’s buffer was faster.

Sarah Walker

May 20, 2023 at 8:18 am

Fair enough on the 1997 OEM front, though one could consider the ill thought out Voodoo Rush disaster as at least partly aimed at that sector.

The other factor which had a knock on effect on 3dfx in 1997 and beyond was the aborted deal with Sega for what became the Dreamcast. 3dfx did benefit monetarily from an out of court settlement, but it would be a major distraction for them which most likely did impact on future product development.

Andy Bailey

May 22, 2023 at 1:44 pm

The Dreamcast deal is possibly the only significant note that’s missing from the article.

Whilst that wouldn’t have necessarily changed their fortunes in the end, there’s a certain amount of kudos and free marketing that can be gained by having your brand on a box (or even on a console itself).

I’d wager that someone seeing some of the great graphics of something like the Gamecube, then noticing the ATI sticker on the console, would bear the brand in mind when they came to buy a new video card for their PC.

Lisa H.

May 19, 2023 at 6:57 pm

And yet even today, the 3dfx name and logo can still send a little prickle of excitement running down the spines of gamers of a certain age, just as that of the Amiga can among some just slightly older.

I’m in this Amiga age group, but I know what you mean about Voodoo/3dfx. The first Voodoo card we got was Golly That’s Sure Pretty Sexy at the time.

such unsexy marquees as Compaq and Packard Bell.

Should this be “marques” with one E?

Jimmy Maher

May 19, 2023 at 7:44 pm

Yes, but I don’t think it was the right word anyway on second thought. ;) Thanks!

Lt. Nitpicker

May 19, 2023 at 8:54 pm

>released at the same time as the Voodoo2 chipset, the RIVA 128ZX filled two slots in a computer, had a fan that sounded like a lawnmower, and still couldn’t match the Voodoo2’s performance.

I think you mixed up the RIVA 128ZX with the much later Geforce FX 5800, which had a noisy and at the time unusual dual slot cooler and couldn’t outperform its competitor, the Radeon 9700. The RIVA 128ZX was basically a normal OEM refresh of their already fairly successful RIVA 128 and had no notable issues over its predecessor. I’d cut the mention or mention the RIVA TNT instead, which shipped many months (Wikipedia says June 1998, but nVIDIA’s press releases and most media sources say September) after the Voodoo 2, ran hot enough that many cards using it included a fan, (which was unusual for a consumer graphics card at the time) and even then, it was slightly slower than a single Voodoo 2.

Salvation122

May 22, 2023 at 1:32 pm

I’m probably one of very few people that would say this but I have nearly as much affection for the Radeon 9700 as anything 3dfx put out. That thing was an absolute monster of a card.

Dan V.

May 23, 2023 at 12:05 pm

I think more would agree with you than you realize. The Radeon 9700 put the Radeon brand on the map for a lot of people.

Erik

May 19, 2023 at 10:02 pm

vQuake just uses the same span-sorting that the software renderer of quake uses, and draws the output of that stage. It does exactly what software quake does, z-buffer writes for world geometry, and z-buffer writes+compares for objects rendered on top of the world geometry.

I wouldn’t exactly call it a kludge, but it is a workaround for the poor z-compare performance fo the V1000.

Alex

May 20, 2023 at 5:46 am

The last sentence is so spot on! I remember every single name in this article as well as the not-impressed-reviews for the late 3dfx-cards, at least I think I do. What a mess this all was. The shadows of those times can even reach over today, when certain games from that period refuse to run properly on modern chipsets without some fiddling around. No, I certainly don´t wanna live through that time again as a customer, but from a technical point of view, it was magical, for the lack of better words.

John

May 20, 2023 at 11:31 am

Somehow I have spent decades believing that the “gl” in glQuake was a reference to OpenGL. In my defense, I spent most of the Voodoo era without either a 3D accelerator card or a PC. I’m not even sure where or why I heard about glQuake.

In my head, the introduction of the 3D accelerator is the beginning of The Bad Times for PC gaming. The games themselves are fine for the most part. Rather, it’s the necessity and the expense of buying a discrete GPU that I resent. I would happily play older games capable of running on integrated graphics, but games don’t always render properly on integrated graphics. I’ve seen more than one game with incorrectly translucent or even invisible textures when run on a GPU-less machine.

I do not claim that my resentment is entirely rational. It has always been expensive to play the latest games. It just seems strange and counter-intuitive to me that I can build or buy a thoroughly functional computer for what amounts to a pittance but that the computer cannot play contemporary games unless I also buy an expansion card that costs double or triple what the rest of the computer did.

Sarah Walker

May 20, 2023 at 1:26 pm

The “gl” in glQuake _is_ a reference to OpenGL. glQuake ran on 3dfx hardware via an OpenGL minidriver, basically a wrapper around Glide.

John

May 20, 2023 at 10:19 pm

Ah. When Jimmy said “In January of 1997, id released GLQuake, a new version of that game that used the Voodoo chipset,” I got the impression that the “gl” was a reference to Glide rather than OpenGL. In retrospect, it makes sense that Carmack would favor proper OpenGL.

Supernaut

May 20, 2023 at 12:00 pm

Another great article! I spotted one typo: “It’s only drawback”

Jimmy Maher

May 20, 2023 at 3:23 pm

Thanks!

Richard

May 20, 2023 at 12:34 pm

Very enjoyable. Some typos:

No, we wouldn’t to go back -> we wouldn’t want to

and sound cards and the all the rest -> and all the rest

was the verge of transforming the computer-games industry -> was on the verge

Quake ran faster and look better -> looked better

more typical-first person -> more typical first-person

number of objects involved are few -> is few

when it comes to 3D gaming in the late 1990s -> when it came to

Jimmy Maher

May 20, 2023 at 3:26 pm

Thanks!

Leo Vellés

May 20, 2023 at 2:17 pm

“That is to say, all of the fiddly details of disparate video and sound cards and the all the rest were abstracted…”.

A double “the” there Jimmy

William Hern

May 20, 2023 at 6:34 pm

Another fascinating and information article Jimmy.

However I must mildly chide you for writing the following:

‘… as a wag once said, “the nice thing about standards is that there are so many of them to choose from.” ‘

The “wag” you quote is noted computer scientist Andrew Tanenbaum and there is much more to him than just his sense of humour! Professor Tanenbaum has written many books, most of them regarded as seminal texts in Computer Science. He also wrote the MINIX operating system – that and his book “Operating Systems: Design and Implementation” were two of the key inspirations for Linus Torvalds to create the Linux kernel.

The quote you used comes from his “Computer Networks” book. The full quotation reads: “The nice thing about standards is that you have so many to choose from; furthermore, if you do not like any of them, you can just wait for next year’s model.”

Jimmy Maher

May 20, 2023 at 7:22 pm

I didn’t know whom that old chestnut originated with. Thanks!

Kim S.

May 21, 2023 at 4:40 am

The Voodoo series had the speed and the 3D features, but they did not have good image quality. Upon “upgrading” my Matrox Mystique with a Voodoo 2 in 1998 I was shocked by the image downgrade.

The external, analog pass-through solution made colors noticeably duller and introduced artifacts, even on 2D images like the Windows desktop.

In early 3D games, much of the charm was in the clearly visible pixels with their strong, distinct colors. By comparison, the washed-out, blurry, filtered mess the Voodoo generated looked awful, in my opinion.

I returned the Voodoo “downgrade” and looked elsewhere.

The Matrox G200, also in 1998, with its 32-bit vibrant colors, was a significant upgrade in 3D image quality. It became my 3D jaw-dropping moment, unlike the Voodoo.

For the record, I used a Sony Trinitron 19-inch CRT monitor. I guess on some “lesser” screens the image quality differences on these cards might not have been as visible.

Fform

May 22, 2023 at 3:45 am

The voodoo2 had a max resolution of 800×600, so on 19” it would definitely look terrible.

My first real 3d card was a TNT2, on a 19” Trinitron with a max resolution of 1600×1200 in 1999. It was amazing.

_RGTech

December 13, 2025 at 7:56 am

2D image quality was also the main reason why I sold my Voodoo 1: after acquiring a 15″ LCD in 1999, the pixel sync was a mess. Either the analog passthrough or other interferences reduced the image quality just like you used a very cheap cable, and there was not much I could do (changing slots in an already full tower was not easy, and the cable was short).

I replaced it with a passive-cooled TNT2, which was really capable for the newer games and elimiinated the passthrough issues… it just never captured the old GLide-magic of The Need For Speed 2 SE any more. (I should’ve kept the Voodoo just for those few games! Or, if nothing else, for today’s resale value…!)

It was not a resolution topic, mind you; back then you were still totally fine with low-res VGA/SVGA games – the blurring didn’t matter much, even on the LCD where it had to be upscaled somehow, as long as there was smooth action! Titles like Civ II on the other hand were great on flicker-free, pixel-perfect 1024*768.

Gnoman

May 21, 2023 at 1:06 pm

The perspective in this article is interesting to me, because economic circumstances made me miss this little sub-area entirely – my computer experience of this era was primarily with a succession of machines built from parts handed down from acquaintances or literally found in the garbage, and an expensive 3D card was so far beyond my reach that it might not have existed.

This means that my very strong feelings for 3Dfx are entirely negative. In the late stages of the era, I was constantly annoyed that the game I was playing (in software mode) often looked nothing like the pictures on the back of the box (which were using 3Dfx), which only got worse as Glide was increasingly deprecated and the 3D cards I then had remained largely incompatible because neither DirectX or even OpenGL fully interfaced properly.

IIRC, Glide also has a lot to do with why so many excellent titles remain locked to Windows 9X unless somebody like GOG manages to get the rights, or people like Sarah Walker are willing to put the effort into providing emulation solutions.

Alex

May 22, 2023 at 5:08 am

Well, I don´t know if this is something you are looking for or already aware of, but the little tool “DgVoodoo2” can sometimes be helpful when it comes to incompatibilities:

http://dege.freeweb.hu/dgVoodoo2/

Speaking of GOG, I´m wondering time and time again how they are managing to upgrade the compatibility of (certain) older games to new hardware and new versions of Windows. I really appreciate their technical competence.

Sion Fiction

January 2, 2025 at 1:09 am

I think Devs like DOSBox & NightDive might have a little something to do with it. ;)

Tim Kaiser

May 25, 2023 at 3:24 pm

“would need mount a more credible assault” -> “would need to mount a more credible assault”

Also, I got waves of nostalgia when you mentioned autoexec.bat and config.sys. I remember having to fiddle with those all the time to get games to run on my old 386 computer.

Jimmy Maher

May 25, 2023 at 3:56 pm

Thanks!

Olof

May 25, 2023 at 6:57 pm

Great article. I often say that the two biggest leaps in computers during my lifetime was the CD-ROM and the 3Dfx cards. The former immediately gave you 450x more storage than the 3.5″ discs they replaced. The latter made games (Quake is the one I specifically remember) run at 4x the resolution, with 256x the colors, transparent water and dynamic light sources, and all that running it twice as fast as before. The difference was absolutely unbelievable at the time.

Of course neither CD and the 3Dfx are primitive compared to today’s technology, but the progress has been much more incremental. 2x improvements rather than these 100x improvements. Perhaps the only thing I can think of that comes close to this would be the iPhone

Chris D

May 28, 2023 at 4:34 pm

Great article! I found a typo in this sentence:

“Z-buffering support was reportedly a last-minute edition to the Vérité chipset, and it showed.”

Jimmy Maher

May 28, 2023 at 8:51 pm

Thanks!

Tim Kaiser

May 28, 2023 at 6:07 pm

FYI: I get an error when trying to submit a comment on this week’s Analog Antiquarian article. Maybe that’s why there are no comments yet?

Anyway, I found two typos on that article:

of the of the two renowned writers’ output today. -> of the two renowned writers’ output today.

called for Fifth Crusade to another novel target -> called for a Fifth Crusade to another novel target

Cheers!

Jimmy Maher

May 28, 2023 at 8:50 pm

Thanks, corrected. Do you recall what kind of error message you got?

Joshua Barrett

June 3, 2023 at 12:21 am

Two minor technical corrections:

> In January of 1997, id released GLQuake, a new version of that game that used the Voodoo chipset.

GLQuake ran on Voodoo, but wasn’t designed specifically for 3DFX cards. As other commenters have noted, the GL in GLQuake was in reference to OpenGL: after vquake, Carmack had resolved not to do any single-card ports, and strongly pushed OpenGL as the standard for hardware-independent 3D graphics over Direct3D (largely because early versions of Direct3D were not very pleasant to work with). Voodoo support was enabled with the OpenGL minidriver, which implemented just enough of OpenGL on top of GLide to make Quake run.

> Direct3D would finally win out despite Carmack’s endorsement of its rival…

This is true, but somewhat misleading, in that it implies OpenGL stopped being a going concern. Direct3D was and remains popular with developers, arguably more popular than OpenGL, but OpenGL remains relevant: on Mac and Linux systems, it was the only way to do 3D graphics for years, with its variants OpenGL ES and WebGL being the way that 3D graphics are done on mobile and the web respectively. The advent of Vulkan and WebGPU (as well as Apple’s proprietary Metal API) have changed this, but OpenGL is still definitely something people use. id themselves have never used Direct3D on PCs.

Jimmy Maher

June 3, 2023 at 4:06 pm

I’m reluctant to include too many messy details of these things in the interests of readability, but I made some modest edits. Thanks!

Alexey Romanov

October 8, 2023 at 8:11 pm

The linked SE answer says “So Microsoft purchased a bit of middleware and fashioned it into Direct3D Version 3”, not 2. Or was Direct3D 3 a part of DirectX 2.0?

Jimmy Maher

October 10, 2023 at 11:03 am

Just a mistake on Bolas’s part, I think. Direct3D was definitely first included in DirectX 2.

andym00

April 12, 2024 at 6:02 pm

As someone who lived through this, who retargetted a software only project into one that supported 3DFX, you write it so so well! I could see these last 3 parts being an amazing film. You’re style of writing is so beautiful, suspenseful and always delivers. What amazing times we lived through back then..

RandomGamer

August 21, 2024 at 5:13 am

The way I remember the story of 3dfx downfall is that GLide was a better approach in more ways than one, but 3dfx missed an opportunity to forge it into an industry standard by opening it up to third party manufacturers. Second problem was that 3dfx bet bigly on anti-aliasing while the gaming industry wanted advanced shaders first.

Also, the story of the rise of 3dfx is incomplete without mentioning Unreal as the first real competition to ID Software domination