Microsoft entered the last year of the 1980s looking toward a new decade that seemed equally rife with opportunity and danger. On the one hand, profits were up, and Bill Gates and any number of his colleagues could retire as very rich men indeed even if it all ended tomorrow — not that that outcome looked likely. The company was coming to be seen as the standard setter of the personal-computer industry, more important even than an IBM that had been gravely weakened by the PS/2 debacle and the underwhelming reception of OS/2. Microsoft Windows, now once again viewed by Gates as the keystone of his company’s future after the disappointment that had been OS/2, stood to benefit greatly from Microsoft’s new clout. Windows 2 had gained some real traction, and the upcoming Windows 3 was being talked about with mounting expectation by an MS-DOS marketplace that finally seemed to be technologically and psychologically ready for a GUI environment.

The more worrisome aspects of the future, on the other hand, all swirled around the other two most important companies in American business computing. Through most of the decade now about to pass away, Microsoft had managed to maintain cordial if not always warm relationships with both IBM and Apple — until, that is, the latter declared war by filing a copyright-infringement lawsuit against Windows in 1988. The stakes of that lawsuit were far greater than any mere monetary settlement; they were rather the very right of Windows to continue to exist. It wasn’t at all clear what Microsoft could or would do next if they lost the case and with it Windows. Meanwhile their relationship with IBM was becoming almost equally strained. Disagreements about the technical design of OS/2, along with disputes over the best way to market it, had caused Microsoft to assume the posture of little more than subcontractors working on IBM’s operating system of the future at the same time that they pushed hard on their own Windows. OS/2 and Windows, those two grand bids for the future of mainstream business computing, seemingly had to come into conflict with one another at some point. What happened then? IBM’s reputation had unquestionably been tarnished by recent events, but at the end of the day they were still IBM, the legendary Big Blue, the most important and influential company in the history of computing to date. Was Microsoft ready to take on both Apple and IBM as full-fledged enemies?

So, the people working on Windows 3 had plenty of potential distractions to contend with as they tried to devise a GUI environment good enough to leap into the mainstream. “Just buckle down and make it as good as possible,” said Bill Gates, “and let our lawyers and business strategists deal with the distractions.” By all indications, the Windows people managed to do just that; there’s little indication that all of the external chaos had much effect on their work.

That said, when they did raise their heads from their keyboards, they could take note of encouraging signs that Microsoft might be able to navigate through their troubles with Apple and IBM. As I described in my previous article, on March 18, 1989, Judge William Schwarzer ruled that the 1985 agreement between the two companies applied only to those aspects of Windows 2 — and by inference of an eventual Windows 3 — which had also been a part of Windows 1. Thus the 1985 agreement wouldn’t be Microsoft’s ticket to a quick victory; it appeared that they would rather have to invalidate the very premise of “visual copyright” as applied by Apple in this case in order to win. On July 21, however, Microsoft got some more positive news when Judge Schwarzer made his ruling on exactly which features of Windows 2 weren’t covered by the old agreement. He threw out no less than 250 of Apple’s 260 instances of claimed infringement, vastly simplifying the case — and vastly reducing the amount of damages which Apple could plausibly ask for. The case remained a potential existential threat to Windows, but disposing of what Microsoft’s lawyers trumpeted was the “vast bulk” of it at one stroke did give some reason to take heart. Now, what remained of the case seemed destined to grind away quietly in the background for a long, long time to come — a Sword of Damocles perhaps, but one which Bill Gates at any rate was determined not to let affect the rest of his company’s strategy. If he could make Windows a hit — a fundamental piece of the world’s computing infrastructure — while the case was still grinding on, it would be very difficult indeed for any judge to order the nuclear remedy of banning Microsoft from continuing to sell it.

Microsoft’s strategy with regard to IBM was developing along a similarly classic Gatesian line. Inveterate bets-hedger that he was, Gates wasn’t willing to cut ties completely with IBM, just in case OS/2 and possibly even PS/2 turned around and some of Big Blue’s old clout returned. Instead he was careful to maintain at least a semblance of good relations, standing ready to jump off the Windows bandwagon and back onto OS/2, if it should prove necessary. He was helped immensely in this by the unlamented departure from IBM of Bill Lowe, architect of the disastrous PS/2 strategy, an executive with whom Gates by this point was barely on speaking terms. Replacing Lowe as head of IBM’s PC division was one Jim Cannavino, a much more tech-savvy executive who trusted Gates not in the slightest but got along with him much better one-on-one, and was willing to continue to work with him for the time being.

At the Fall 1989 Comdex, the two companies made a big show of coming together — the latest of the series of distancings and rapprochements that had always marked their relationship. They trotted out a new messaging strategy that had Windows as the partnership’s “low-end” GUI, OS/2’s Presentation Manager as the high-end GUI of the future, suitable at present only for machines with an 80386 processor and at least 4 MB of memory. (The former specification was ironic in light of all the bickering IBM and Microsoft had done in earlier years on the issue of supporting the 80286 in OS/2.) The press release stated that “Windows is not intended to be used as a server, nor will future releases contain advanced OS/2 features [some of which were only planned for future OS/2 releases at this point] such as distributed processing, the 32-bit flat memory model, threads, or long filenames.” The pair even went so far as to recommend that developers working on really big, ambitious applications for the longer-term future focus their efforts on OS/2. (“No advice,” InfoWorld magazine would wryly note eighteen months later, “could have been worse.”)

But Microsoft’s embrace of the plan seemed tentative at best even in the moment. It certainly didn’t help IBM’s comfort level when Steve Ballmer in an unguarded moment blurted out that “face it: in the future, everyone’s gonna run Windows.” Likewise, Bill Gates showed little personal enthusiasm for this idea of Windows as the cut-price, temporary alternative to OS/2 and the Presentation Manager. As usual, he was just trying to keep everyone placated while he worked out for himself what the future held. And as time went on, he seemed to find more and more to like about the idea of a Windows-centric future. Several months after the Comdex show, he got slightly drunk at a big industry dinner, and confessed to rather more than he might have intended. “Six months after Windows 3 ships,” he said, “it will have a greater market share than Presentation Manager will ever have — OS/2 applications won’t have a chance.” He further admitted to deliberately dragging his feet on updates to OS/2 in order to ensure that Windows 3.0 got all the attention in 1990.

He needn’t have worried too much on that front: press coverage of the next Windows was reaching a fever pitch, and evincing little of the skepticism that had accompanied Windows 1 and 2. Throughout 1989, rumors and even the occasional technical document leaked out of Microsoft — and not, one senses, by accident. Carefully timed grist for the rumor mill though it may have been, the news was certainly intriguing on its own merits. The press wrote that Tandy Trower, the manager who had done the oft-thankless job of bringing Windows 1 and 2 to fruition, had transferred off the team, but the team itself was growing like never before, and now being personally supervised once again by the ever-flexible Steve Ballmer, who had left Microsoft’s OS/2 camp and rejoined the Windows zealots. Ballmer had hired visual designer Susan Kare, known throughout the industry as the author of MacOS’s clean and crisp look, to apply some of the same magic to their own GUI.

But for those who understood Windows’s longstanding technical limitations, another piece of news was the most intriguing and exciting of all. Already before the end of 1989, Microsoft started talking openly about their plans to accomplish two things which had heretofore been considered mutually exclusive: to continue running Windows on top of hoary old MS-DOS, and yet to shatter the 640 K barrier once and for all.

It had all begun back in June of 1988, when Microsoft programmer David Weise, one of the former Dynamical Systems Research people who had proved such a boon to Windows, bumped into an old friend named Murray Sargent, a physics professor at the University of Arizona who happened to do occasional contract programming for Microsoft on the side. At the moment, he told Weise, he was working on adding new memory-management functionality to Microsoft’s CodeView debugger, using an emerging piece of software technology known as a “DOS extender,” which had been pioneered over the last couple of years by an innovative company in the system-software space called Quarterdeck Office Systems.

As I’ve had occasion to describe in multiple articles by now, the most crippling single disadvantage of MS-DOS had always been its inability to directly access more than 640 K of memory, due to its origins on the Intel 8088 microprocessor, which had a sharply limited address space. Intel’s newer 80286 and 80386 processors could run MS-DOS only in their 8088-compatible “real” mode, where they too were limited to 640 K, rather than being able to use their “protected” mode to address up to 16 MB (in the case of the 80286) or 4 GB (in the case of the 80386). Because they ran on top of MS-DOS, most versions of Windows as well had been forced to run in real mode — the sole exception was Windows/386, which made extensive use of the 80386’s virtual mode to ease some but not all of the constant headache that was memory management in the world of MS-DOS. Indeed, when he asked himself what were the three biggest aggravations which working with Windows entailed, Weise had no doubt about the answer: “memory, memory, and memory.” But now, he thought that Sargent might just have found a solution through his tinkering with a DOS extender.

It turned out that the very primitiveness of MS-DOS could be something of a saving grace. Its functions mostly dealt only with the basics of file management. Almost all of the other functions that we think of as rightfully belonging to an operating system were handled either by an extended operating environment like Windows, or not handled at all — i.e., left to the programmer to deal with by banging directly on the hardware. Quarterdeck Office Systems had been the first to realize that it should be possible to run the computer most of the time in protected mode, if only some way could be found to down-shift into real mode when there was a need for MS-DOS, as when a file on disk needed to be read from or written to. This, then, was what a DOS extender facilitated. Its code was stashed into an unused corner of memory and hooked into the function calls that were used for communicating with MS-DOS. That done, the processor could be switched into protected mode for running whatever software you liked with unfettered access to memory beyond 640 K. When said software tried to talk to MS-DOS after that, the DOS extender trapped that function call and performed some trickery: it copied any data that MS-DOS might need to access in order to carry out the task into the memory space below 640 K, switched the CPU into real mode, and then reissued the function call to let MS-DOS act on that data. Once MS-DOS had done its work, the DOS extender switched the CPU back into protected mode, copied any necessary data back to where the protected-mode software expected it to be, and returned control to it.

One could argue that a DOS extender was just as much a hack as any of the other workarounds for the 640 K barrier; it certainly wasn’t as efficient as a more straightforward contiguous memory model, like that enjoyed by OS/2, would have been. It was particularly inefficient on the 80286, which unlike the 80386 had to perform a costly reset every time it was switched between protected and real mode and vice versa. But even so, it was clearly a better hack than any of the ones that had been devised to date. It finally let Intel’s more advanced processors run, most of the time anyway, as their designers had intended them to run. And from the programmer’s perspective it was, with only occasional exceptions, transparent; you just asked for the memory you needed and went about your business from there, and let the DOS extender worry about all the details going on behind the scenes. The technology was still in an imperfect state that summer of 1988, but if it could be perfected it would be a dream come true for programmers, the next best thing to a world completely free of MS-DOS and its limitations. And it might just be a dream come true for Windows as well, thought David Weise.

Quarterdeck may have pioneered the idea of the DOS extender, but their implementation was lacking in the view of Weise and his sometime colleague Murray Sargent. With Sargent’s help in the early stages, Weise implemented his own DOS extender and then his own protected-mode version of Windows which used it over three feverish months of nights and weekends. “We’re not gonna ask anybody, and then if we’re done and they shoot it down, they shoot it down,” he remembers thinking.

There are all these little gotchas throughout it, but basically you just work through the gotchas one at a time. You just close your eyes, and you just charge ahead. You don’t think of the problems, or you’re not gonna do it. It’s fun. Piece by piece, it’s coming. Okay, here come the keyboard drivers, here come the display drivers, here comes GDI — oh, look, here’s USER!

By the fall of of 1988, Weise had his secret project far enough along to present to Bill Gates, Steve Ballmer, and the rest of the Windows team. In addition to plenty of still-unresolved technical issues, the question of whether a protected-mode Windows would step too much on the toes of OS/2, an operating system whose allure over MS-DOS was partially that it could run in protected mode all the time, haunted the discussion. But Gates, exasperated beyond endurance by IBM, wasn’t much inclined to defer to them anymore. Never a boss known for back-patting, he told Weise simply, “Okay, let’s do it.”

Microsoft would eventually release their approach to the DOS extender as an open protocol called the “DOS Protected Mode Interface,” or DPMI. It would change the way MS-DOS-based computers were programmed forever, not only inside Windows but outside of it as well. The revolutionary non-Windows game Doom, for example, would have been impossible without the standalone DOS extender DOS/4GW, which implemented the DPMI specification and was hugely popular among game programmers in particular for years. So, DPMI became by far the most important single innovation of Windows 3.0. Ironically given that it debuted as part of an operating environment designed to hide the ongoing existence of MS-DOS from the user, it single-handedly made MS-DOS a going concern right through the decade of the 1990s, giving the Quick and Dirty Operating System That Refused to Die a lifespan absolutely no one would ever have dreamed for it back in 1981.

But the magic of DPMI wouldn’t initially apply to all Windows systems. Windows 3.0 could still run, theoretically at least, on even a lowly 8088-based PC compatible from the early 1980s — a computer whose processor didn’t have a protected mode to be switched into. For all that he had begged and cajoled IBM to make OS/2 an 80386-exclusive operating system, Bill Gates wasn’t willing to abandon less powerful machines for Microsoft’s latest operating environment. In addition to fueling conspiracy theories that Gates had engineered OS/2 to fail from the beginning, this data point did fit the brief-lived official line that OS/2 was for high-end machines, Windows for low-end machines. Yet the real reasons behind it were more subtle. Partially due to a global chip shortage that made all sorts of computers more expensive in the late 1980s and briefly threatened to derail the inexorable march of Moore’s Law, users hadn’t flocked to the 80386-based machines quite as quickly as Microsoft had anticipated when the OS/2 debate was raging back in 1986. The fattest part of the market’s bell curve circa 1989 was still the 80286 generation of computers, with a smattering of pace-setting 80386s and laggardly 8088s on either side of them. Microsoft thus ironically judged the 80386 to be exactly the bridge too far in 1989 that IBM had claimed it to be in 1986. Even before Windows 3.0 came out, the chip shortage was easing and Moore’s Law was getting back on track; Intel started producing their fourth-generation microprocessor, the 80486, in the last weeks of 1989. [1]The 80486 was far more efficient than its predecessor, boasting roughly twice the throughput when clocked at the same speed. But, unlike the 80286 and 80386, it didn’t sport any new operating modes or fundamentally new capabilities, and thus didn’t demand any special consideration from software like Windows/386 and Windows 3.0 that was already utilizing the 80386 to its full potential. For the time being, though, Windows was expected to support the full range of MS-DOS-based computers, reaching all the way back to the beginning.

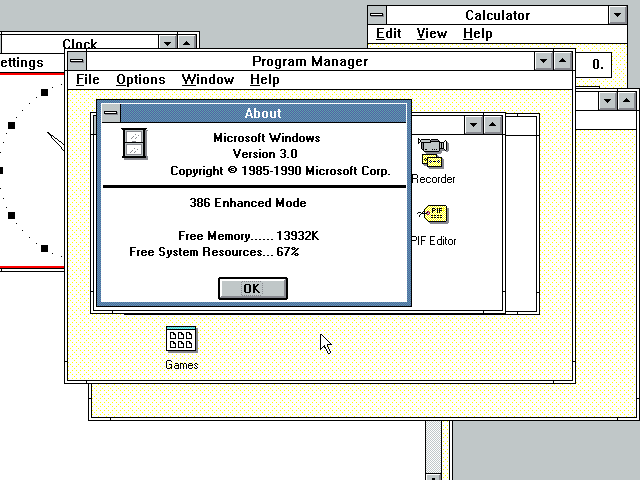

And yet, as we’ve seen, DPMI was just too brilliant an innovation to give up in the name of maintaining compatibility with antiquated 8088-based machines. MS-DOS had for years been forcing owners of higher-end hardware to use their machines in a neutered fashion, and Microsoft wasn’t willing to continue that dubious tradition in the dawning era of Windows. So, they decided to ship three different versions of Windows in every box. When started on an 8088-class machine, or on any machine without memory beyond 640 K, Windows ran in “real mode.” When started on an 80286 with more than 640 K of memory, or on an 80386 with more than 640 K but less than 2 MB of memory, it ran in “standard mode.” And when started on an 80386 with at least 2 MB of memory, it ran in its ultimate incarnation: “386 enhanced mode.”

In both of the latter modes, Windows 3.0 could offer what had long been the Holy Grail for any MS-DOS-hosted GUI environment: an application could simply request as much memory as it needed, without having to worry about what physical addresses that memory included or whether it added up to more than 640 K. [2]This wasn’t quite the “32-bit flat memory model” which Microsoft had explicitly promised Windows would never include in the joint statement with IBM. That referred to an addressing mode unique to the 80386 and its successors, which allowed them to access up to 4 GB of memory in a very flexible way. Having been written to support the 80286, Windows 3.0, even in 386 enhanced mode, was still limited to 16 MB of memory, and had to use a somewhat more cumbersome form of addressing known as a segmented memory model. Still, it was close enough that it arguably went against the spirit of the statement, something that wouldn’t be lost on IBM. No earlier GUI environment, from Microsoft or anyone else, had met this standard.

In the 386-enhanced mode, Windows 3.0 also incorporated elements of the earlier Windows/386 for running vanilla MS-DOS applications. Such applications ran in the 80386’s virtual mode; thus Windows 3.0 used all three operating modes of the 80386 in tandem, maximizing the potential of a chip whose specifications owed a lot to Microsoft’s own suggestions. When running on an 8088 or 80286, Windows still served as little more than a task launcher for MS-DOS applications, but on an 80386 with enough memory they multitasked as seamlessly as native Windows applications — or perhaps more so: vanilla MS-DOS applications running inside their virtual machines actually multitasked preemptively, while normal Windows applications only multitasked cooperatively. So, on an 80386 in particular, Windows 3.0 had a lot going for it even for someone who couldn’t care less about Susan Kare’s slick new icons. It was much, much more than just a pretty face. [3]Memory management on MS-DOS-based versions of Windows is an extremely complicated subject, one which alone has filled thick technical manuals. This article has presented by no means a complete picture, only the most cursory of overviews intended to convey the importance of Windows 3.0’s central innovation of DPMI. In addition to that innovation, though, Windows 3.0 and its successors employed plenty of other tricks, many of them making yet more clever use of the 80386’s virtual mode, Intel’s gift that kept on giving. For truly dedicated historians of a technical bent, I recommend a book such as Unauthorized Windows 95 by Andrew Schulman (which does cover memory management under earlier versions of Windows as well), Windows Internals by Matt Pietrek, and/or DOS and Windows Protected Mode by Al Williams.

Which isn’t to say that the improved aesthetics weren’t hugely significant in their own right. While the full technical import of Windows 3.0’s new underpinnings would take some time to fully grasp, it was immediately obvious that it was slicker and far more usable than what had come before. Macintosh zealots would continue to scoff, at times with good reason, at the clunkier aspects of the environment, but it unquestionably came far closer than anything yet to that vision which Bill Gates had expressed in an unguarded moment back in 1984 — the vision of “the Mac on Intel hardware.”

A Quick Tour of Windows 3.0

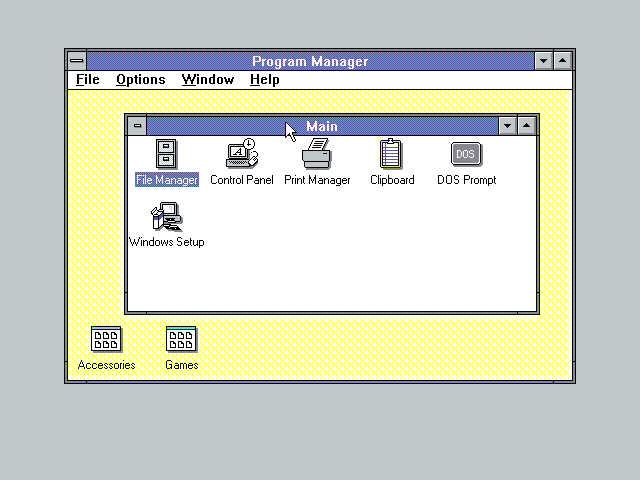

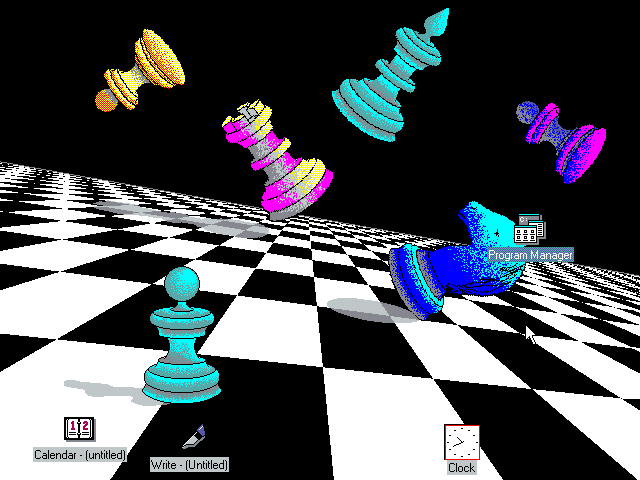

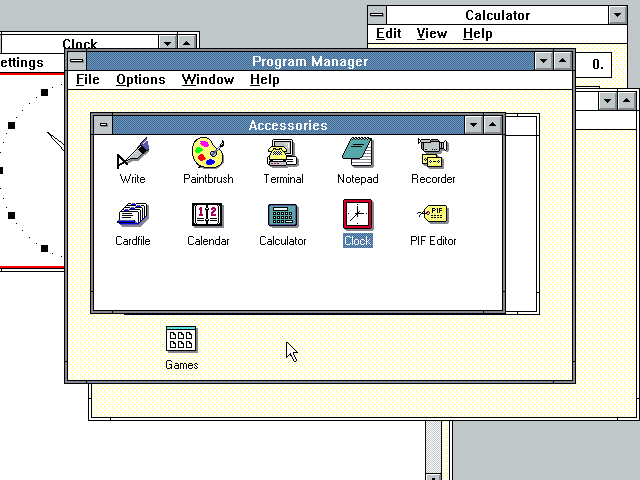

Windows 3.0 really is a dramatic leap compared to what came before. The text-based “MS-DOS Executive” — just the name sounds clunky, doesn’t it? — has been replaced by the “Program Manager.” Applications are now installed, and are represented as icons; we’re no longer forced to scroll through long lists of filenames just to start our word processor. Indeed, the whole environment is much more attractive in general, having finally received some attention from real visual designers like Susan Kare of Macintosh fame.

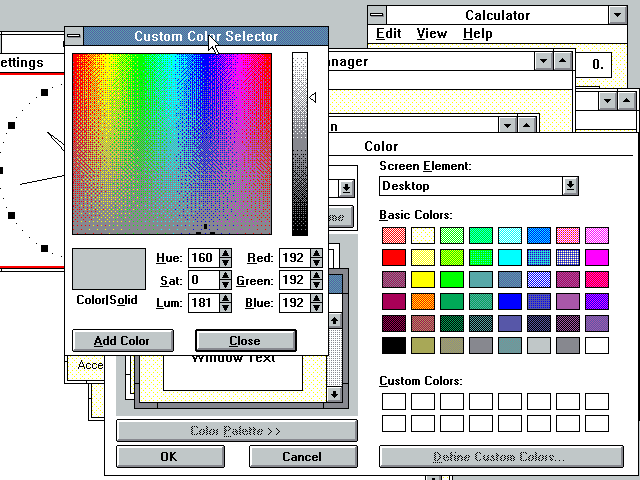

One area that’s gotten a lot of attention from the standpoint of both usability and aesthetics is the Control Panel. Much of this part of Windows 3.0 is lifted directly from the OS/2 Presentation Manager — with just enough differences introduced to frustrate.

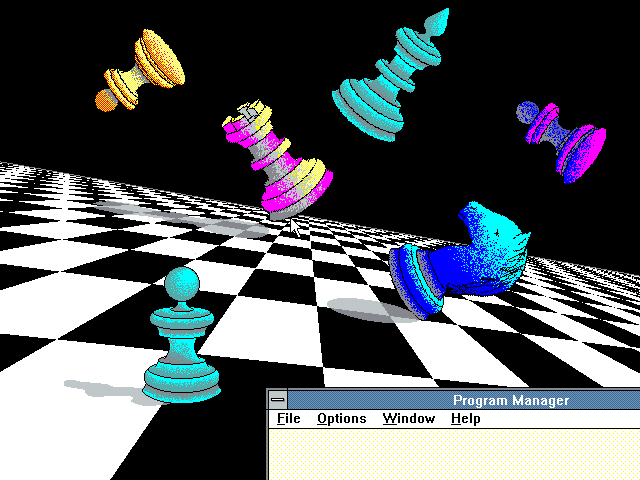

In one of the countless new customization and personalization options, we can now use images as our desktop background, .

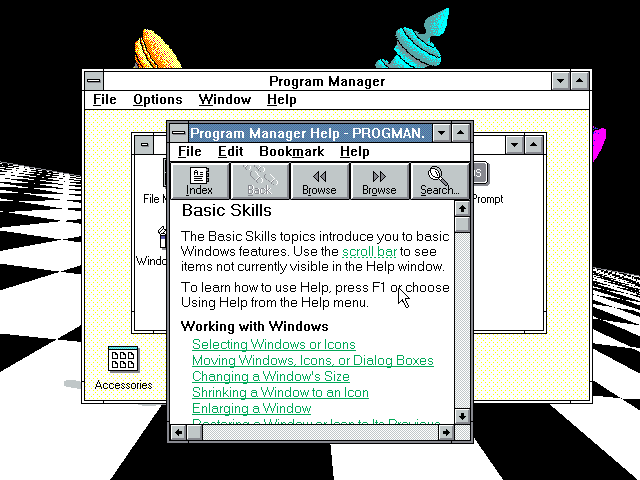

The help system is extensive and comprehensive. Years before a web browser became a standard Windows component, Windows Help was a full-fledged hypertext reader, a maze of twisty little links complete with embedded images and sounds.

The icons on the desktop still represent only running applications that have been minimized. We would have to wait until Windows 95 for the desktop-as-general-purpose-workspace concept to reach fruition.

For all the aesthetic improvements, the most important leap made by Windows 3.0 is its shattering of the 640 K barrier. When run on an 80286 or 80386, it uses Microsoft’s new DPMI technology to run in those processors’ protected mode, leaving the user and (for the most part) the programmer with just one heap of memory to think about; no more “conventional” and “extended” and “expanded” memory to scratch your head over. It’s difficult to exaggerate what a miracle this felt like after all the years of struggle. Finally, the amount of memory you had in your machine was the amount of memory you had to run Windows and its applications — end of story.

In contrast to all of the improvements in the operating environment itself, the set of standard applets that shipped with Windows 3.0 is almost unchanged since the days of Windows 1.

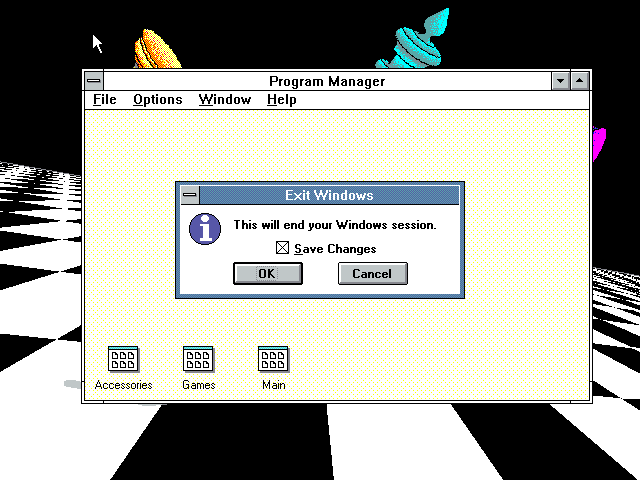

The Program Manager, like the MS-DOS Executive before it, in a sense is Windows; we close it to exit the operating environment itself and return to the MS-DOS prompt.

A consensus emerged well ahead of Windows 3.0’s release that this was the GUI which corporate America could finally embrace — that the GUI’s time had come, and that this GUI was the one destined to become the standard. One overheated pundit declared that “this is probably the most anticipated product in the history of the world.” Microsoft did everything possible to stoke those fires of anticipation. Rather than aligning the launch with a Comdex show, they opted to put-on a glitzy Apple-style self-standing media event to mark the beginning of the Windows 3.0 era. In fact, one might even say that they rather outdid the famously showy Apple.

The big rollout took place on May 22, 1990, at New York’s Center City at Columbus Circle. A hundred third-party publishers showed up with Windows 3.0 applications, along with fifty hardware makers who were planning to ship it pre-installed on every machine they sold. Closed-circuit television feeds beamed the proceedings to big-screen theaters in half a dozen other cities in the United States, along with London, Paris, Madrid, Singapore, Stockholm, Milan, and Mexico City. Everywhere standing-room-only crowds clustered, made up of those privileged influence-wielders who could score a ticket to what Bill Gates himself described as “the most extravagant, extensive, and elaborate software introduction ever,” to the tune of a $3 million price tag. Microsoft had tried to go splashy from time to time before, but never had they indulged in anything like this. It was, Gates’s mother reckoned, the “happiest day of Bill’s life” to date.

The industry press was carried away on Microsoft’s river of hype, manifesting on their behalf a messianic complex that was as worthy of Apple as had been the big unveiling. “If you think technology has changed the world in the last few years, hold on to your seats,” wrote one pundit. Gates made the rounds of talk shows like Good Morning America, as Microsoft spent another $10 million on an initial advertising campaign and carpet-bombed the industry with 400,000 demonstration copies of Windows 3.0, sent to anyone who was or might conceivably become a technology taste-maker.

The combination of wall-to-wall hype and a truly compelling product was a winning one; this time, Microsoft wouldn’t have to fudge their Windows sales numbers. When they announced that they had sold 1 million boxed copies of Windows 3.0 in the first four months, each for $80, no one doubted them. “There is nothing that even compares or comes close to the success of this product,” said industry analyst Tim Bajarin. He went on to note in a more ominous vein that “Microsoft is on a path to continue dominating everything in desktop computing when it comes to software. No one can touch or even slow them down.”

Windows 3.0 inevitably won “Best Business Program” for 1990 from the Software Publishers Association, an organization that ran on the hype generated by its members. More persuasive were the endorsements from other sources. For example, after years of skepticism toward previous versions of Windows, the hardcore tech-heads at Byte magazine were effusive in their praise of this latest one, titling their first review thereof simply “Three’s the One.” “On both technical and strategic grounds,” they wrote, “Windows 3.0 succeeds brilliantly. After years of twists and turns, Microsoft has finally nailed this product. Try it. You’ll like it.” PC Computing put an even more grandiose spin on things, straining toward a scriptural note (on the Second Day, Microsoft created the MS-DOS GUI, and it was Good):

When the annals of the PC are written, May 22, 1990, will mark the first day of the second era of IBM-compatible PCs. On that day, Microsoft released Windows 3.0. And on that day, the IBM-compatible PC, a machine hobbled by an outmoded, character-based operating system and 1970s-style programs, was transformed into a computer that could soar in a decade of multitasking graphical operating environments and powerful new applications. Windows 3.0 gets right what its predecessors — Visi On, GEM, earlier versions of Windows, and OS/2 Presentation Manager — got wrong. It delivers adequate performance, it accommodates existing DOS applications, and it makes you believe that it belongs on a PC.

Windows 3.0 sold and sold and sold, like no piece of software had ever sold before, transforming in a matter of months the picture that sprang to most people’s minds when they thought of personal computing from a green screen with a blinking command prompt to a mouse pointer, icons, and windows — thus accomplishing the mainstream computing revolution that Apple had never been able to manage, despite the revolutionary rhetoric of their old “1984” advertisement. Windows became so ubiquitous so quickly that the difficult questions that had swirled around Microsoft prior to its launch — the question of Apple’s legal case and the question of Microsoft’s ongoing relationship with IBM and OS/2 — faded into the background noise, just as Bill Gates had hoped they would.

Sure, Apple zealots and others could continue to scoff, could note that Windows crashed all too easily, that too many things were still implemented clunkily in comparison to MacOS, that the inefficiencies that came with building on such a narrow foundation as MS-DOS meant that it craved far better hardware than it ought to in order to run decently. None of it mattered. All that mattered was that Windows 3.0 was a usable, good-enough GUI that ran on cheap commodity hardware, was free of the worst drawbacks that came with MS-DOS, and had plenty of software available for it — enough native software, in fact, to make its compatibility with vanilla MS-DOS software, once considered so vital for any GUI hoping to make a go of it, almost moot. The bet Bill Gates had first put down on something called the Interface Manager before the IBM PC even officially existed, which he had doubled down on again and again only to come up dry every time, had finally paid off on a scale even he hadn’t ever imagined. Microsoft would sell 2.75 million copies of Windows 3.0 by the end of 1990 — and then the surge really began. Sales hit 15 million copies by the end of 1991. And yet if anything such numbers underestimate its ubiquity at the end of its first eighteen months on the market. Thanks to widespread piracy which Microsoft did virtually nothing to prevent, estimates were that at least two copies of Windows had been installed for every one boxed copy that had been purchased. Windows was the new standard for mainstream personal computing in the United States and, increasingly, all over the world.

At the Comdex show in November of 1990, Bill Gates stepped onstage to announce that Windows 3.0 had already gotten so big that no general-purpose trade show could contain it. Instead Microsoft would inaugurate the Windows World Exposition Conference the following May. Then, after that and the other big announcements were all done, he lapsed into a bit of uncharacteristic (albeit carefully scripted) reminiscing. He remembered coming onstage at the Fall Comdex of seven years before to present the nascent first version of Windows, infamously promising that it would be available by April of 1984. Everyone at that show had talked about how relentlessly Microsoft laid on the Windows hype, how they had never seen anything quite like it. Yet, looking back, it all seemed so unbearably quaint now. Gates had spent all of an hour preparing his big speech to announce Windows 1.0, strolled onto a bare stage carrying his own slide projector, and had his father change the slides for him while he talked. Today, the presentation he had just completed had consisted of four big screens, each featuring people with whom he had “talked” in a carefully choreographed one-man show — all in keeping with the buzzword du jour of 1990, “multimedia.”

The times, they were indeed a-changing. An industry, a man, a piece of software, and, most of all, a company had grown up. Gates left no doubt that it was only the beginning, that he intended for Microsoft to reign supreme over the glorious digital future.

All these new technologies await us. Unless they are implemented in standard ways on standard platforms, any technical benefits will be wasted by the further splintering of the information base. Microsoft’s role is to move the current generation of PC software users, which is quickly approaching 60 million, to an exciting new era of improved desktop applications and truly portable PCs in a way that keeps users’ current applications, and their huge investment in them, intact. Microsoft is in a unique position to unify all those efforts.

Once upon a time, words like these could have been used only by IBM. But now Microsoft’s software, not IBM’s hardware, was to define the new “standard platform” — the new safe choice in personal computing. The PC clone was dead. Long live the Wintel standard.

(Sources: the books The Making of Microsoft: How Bill Gates and His Team Created the World’s Most Successful Software Company by Daniel Ichbiah and Susan L. Knepper, Hard Drive: Bill Gates and the Making of the Microsoft Empire by James Wallace and Jim Erickson, Gates: How Microsoft’s Mogul Reinvented an Industry and Made Himself the Richest Man in America by Stephen Manes and Paul Andrews, Computer Wars: The Fall of IBM and the Future of Global Technology by Charles H. Ferguson and Charles R. Morris, and Apple Confidential 2.0: The Definitive History of the World’s Most Colorful Company by Owen W. Linzmayer; New York Times of March 18 1989 and July 22 1989; PC Magazine of February 12 1991; Byte of June 1990 and January 1992; InfoWorld of May 20 1991; Computer Gaming World of June 1991. Finally, I owe a lot to Nathan Lineback for the histories, insights, comparisons, and images found at his wonderful online “GUI Gallery.”)

Footnotes

| ↑1 | The 80486 was far more efficient than its predecessor, boasting roughly twice the throughput when clocked at the same speed. But, unlike the 80286 and 80386, it didn’t sport any new operating modes or fundamentally new capabilities, and thus didn’t demand any special consideration from software like Windows/386 and Windows 3.0 that was already utilizing the 80386 to its full potential. |

|---|---|

| ↑2 | This wasn’t quite the “32-bit flat memory model” which Microsoft had explicitly promised Windows would never include in the joint statement with IBM. That referred to an addressing mode unique to the 80386 and its successors, which allowed them to access up to 4 GB of memory in a very flexible way. Having been written to support the 80286, Windows 3.0, even in 386 enhanced mode, was still limited to 16 MB of memory, and had to use a somewhat more cumbersome form of addressing known as a segmented memory model. Still, it was close enough that it arguably went against the spirit of the statement, something that wouldn’t be lost on IBM. |

| ↑3 | Memory management on MS-DOS-based versions of Windows is an extremely complicated subject, one which alone has filled thick technical manuals. This article has presented by no means a complete picture, only the most cursory of overviews intended to convey the importance of Windows 3.0’s central innovation of DPMI. In addition to that innovation, though, Windows 3.0 and its successors employed plenty of other tricks, many of them making yet more clever use of the 80386’s virtual mode, Intel’s gift that kept on giving. For truly dedicated historians of a technical bent, I recommend a book such as Unauthorized Windows 95 by Andrew Schulman (which does cover memory management under earlier versions of Windows as well), Windows Internals by Matt Pietrek, and/or DOS and Windows Protected Mode by Al Williams. |

Alex Smith

August 3, 2018 at 4:36 pm

wasn’t much inclined to differ to them anymore

defer

Jimmy Maher

August 3, 2018 at 5:43 pm

Thanks!

Ben L.

August 3, 2018 at 5:03 pm

Once again a brilliant article. Well done and keep up the good work!

Sniffnoy

August 3, 2018 at 5:34 pm

So did this apply even to DOS programs that had been written to use high memory via one hack or another?

Jimmy Maher

August 3, 2018 at 5:51 pm

No, a program had to be compiled with the appropriate libraries and coded from the start to use a DOS extender. The Watcom C compiler included everything you needed right in the box. For this reason, it became very popular among game developers, albeit late in the MS-DOS gaming era. id famously pioneered its use in Doom.

Sniffnoy

August 3, 2018 at 10:45 pm

Oh, huh. So existing DOS applications didn’t get to go past 640K, but they did at least run with their own address spaces? I see. And I guess Tomber answered the obvious followup question, that Windows did know how to handle it if those existing programs tried to use XMS or EMS. Which gets me wondering if there were other custom hacks it didn’t know how to handle… given Microsoft’s famous dedication to backwards-compatibility at the time, I imagine they would have covered everything, but…

Jimmy Maher

August 4, 2018 at 7:35 am

MS-DOS applications in 386 enhanced mode ran inside what amounted to a virtual 8088-based IBM PC with a 1 MB address space (640 K of which was available as easily accessible memory), thanks to the 80386’s virtual mode. If they tried to use extended or expanded memory, Windows had them covered by emulating the way those technologies worked under MS-DOS. I’m sure there were edge cases — there always are — but on the whole the system worked quite well. It was, needless to say, a non-trivial feat of software engineering.

Sniffnoy

August 3, 2018 at 10:46 pm

(Odd, my reply here seems to have been caught by the spam filter?)

Jimmy Maher

August 4, 2018 at 7:37 am

You used a different email address in your previous comment. A first comment from any given email address is always flagged for moderation.

Sniffnoy

August 4, 2018 at 2:45 pm

I see, thanks!

Tomber

August 3, 2018 at 7:51 pm

Just in case you were asking a different question from the one Jimmy answered, Windows 3 (at least in 386 enhanced mode) could deliver emulated extended (XMS) or enhanced (EMS) memory to a regular real-mode DOS app that knew how to use XMS or EMS.

Rowan Lipkovits

August 3, 2018 at 5:38 pm

“wasn’t much inclined to differ to them anymore.”

Defer?

tedder

August 3, 2018 at 6:41 pm

Some things I’d love to hear more about:

1: chip shortage- on all chips, on CPUs, ‘just’ on memory?

2: what “industry dinner” was Gates drunk at?

3: cooperative multitasking in Windows. How did it work, how did work poorly, etc.

Jimmy Maher

August 4, 2018 at 7:21 am

1. Memory chips were the most obviously affected, but it seems that the problems did spread to other kinds of chips as well. In the book Gates, Microsoft insiders indicate that they do believe it to have had a significant effect on uptake of the 80386, by keeping those machines more expensive than they otherwise would have been. It may also have caused Intel to delay the rollout of the 80486.

2. This was after the Second Annual Computer Bowl, a clone of the College Bowl trivia competition. It took place in Boston in May of 1990, just days before the big Windows 3.0 rollout, and pitted prominent East Coast industry figures against their counterparts in the West. Hyper-competitive as always, Gates apparently studied hard for the event — he’s reported to have boarded the airplane with “an impressive stack of books” — and wound up being named Most Valuable Player after the West squeaked out a victory, 300 to 290. So, he was feeling pretty good about himself at the shindig afterward…

3. The multitasking system in Windows, as on the Macintosh, expected applications to be good citizens, to voluntarily relinquish control to the operating system periodically. They should do this in the obvious case where they were simply waiting for input, but also, and less obviously, should periodically pause and do so when involved in any sort of big, time-consuming calculation. Some applications were better at adhering to these guidelines than others. And of course an application that got stuck in an infinite loop could bring down the whole house of cards. The end result was that Windows as a whole only ran as well as the most badly written application that was currently running.

MS-DOS programs multitasked preemptively in 386 enhanced mode because they used the 80386’s virtual mode, which did a lot of the necessary work for the operating system. This sounds a little weird, but you can think of 386 enhanced mode as Windows and all of its native applications running as one preemptively-multitasked task, and each MS-DOS application running as another task. Effectively the only way to multitask MS-DOS software was preemptively because MS-DOS lacked the concept of a program going to sleep and telling the operating system, “Okay, wake me up when the user does something.” Instead software had to busy-wait: cycle through a loop again and again reading the keyboard buffer and the mouse, to see if anything had changed.

Brandon

August 6, 2018 at 4:24 am

3. So then I have to wonder, if they had preemptive-multitasking implemented, why not use it on Windows applications as well? Did cooperative have less computational overhead in some way?

Jimmy Maher

August 6, 2018 at 5:17 am

Preemptive multitasking of MS-DOS tasks relied heavily on the 80386’s virtual mode. That wasn’t practical for native Windows applications, one of whose primary advantages was to be able to break out of the 8088’s (and 80386 virtual mode’s) 1 MB address space, thanks to the magic of DPMI.

Aula

August 7, 2018 at 2:43 pm

That can’t be right; after all, Linux running on an 80386 is perfectly capable of pre-emptive multitasking of protected mode applications without any memory restrictions. My guess is that Microsoft simply didn’t want the multitasking code to diverge too much between the different Windows modes.

Tomber

August 6, 2018 at 9:24 pm

It is an interesting question; I think it reduces down to the original design of Windows. It was designed to run in very tight memory, it was designed for real mode, it was designed with a shared un-policed address space, and it wasn’t designed to support concurrency or to be re-entrant. That ties you down to a single thread of execution. The exceptions prove this out: NT could run each Win16 app in a separate ‘session’ but the apps then lost visibility to each other, and you paid a price in memory for each one you started.

The Win32 API corrected those problems.

Patrick Lam

August 27, 2018 at 4:28 pm

To expand on Tomber’s point: the Windows API is event-driven, so Windows programs sit around and ask whether there is an event ready for them (e.g. “the user clicked on your window!”). Under such a system, cooperative multitasking is sort of defensible: you just declare that it shouldn’t take longer than X to handle an event. And you put all of the tasks in the same address space. (Early MacOS was like this too.) If you put different Windows programs in different address spaces, then sharing resources needs more virtualization. DOS programs aren’t in on the system event queue so of course you have to preemptively multitask them and you were up for virtualizing anyway.

Tomber

August 3, 2018 at 7:46 pm

For those who remember, seeing that screen with the Free System Resources percentage will bring back the memories of the next infamous memory barrier in Windows world. What were system resources, and when did they stop being a problem?

Well, they weren’t a problem in NT. I think they became a reduced problem in Windows 95 or with Win32 apps in general. One thing they were: memory selectors. The HANDLE type in Win16 programming was usually just the memory selector to access a chunk of memory that had been allocated to a program. (Think segments). There could only be so many of these, and so it was possible to exhaust all of them before you ran out of memory. (Like running out of checks, but still having cash in the bank.) If apps tended to make many small allocations, these ‘resources’ would run down and Windows itself could crash.

And this problem did effect the 386 Enhanced Mode, because 16-bit compatibility required a HANDLE/selector be used for every 64kilobytes allocated, at minimum. At least at the end, I think there were a maximum of 8192, enough for 512MB, which I think was the max memory supported on Windows 9x. There were 8192, because selectors were 16-bit values, but only every 8th was a valid selector, possibly they used the other 3-bits for flags.

Perhaps someone else can fill in here or correct?

Patrick Lam

August 27, 2018 at 4:38 pm

2 bits for privilege level, 1 bit for local vs global: https://stackoverflow.com/questions/9113310/segment-selector-in-ia-32

So that leaves 2^12 = 4096 possible selectors in each of the local and global tables.

Lisa H.

August 4, 2018 at 5:00 am

I wonder who made that chess background.

Tomber

August 4, 2018 at 6:10 pm

Since this might be the ideal point in the series, I also wanted to mention floppy disk formatting, which was a popular example of how Windows 3.x/9x was still built on top of MS-DOS.

When you format a floppy disk on those systems, the entire GUI becomes laggy. Even the mouse cursor would jump around the screen. The popular comparison was OS/2 2.0, where this did not happen. Later on, Windows NT 3.1 also could format a floppy without stalling.

The reason for the stalling is that formatting a floppy was done, for compatibility reasons, by calling a BIOS routine. Because of potential timing sensitivities, Windows had to restore timers and interrupts to their usual MS-DOS quantities, even as Windows continued to run. OS/2 and NT made no pretense to being completely compatible with the 16-bit DOS/BIOS device layer, so they did not need to do this and can format a disk without disruption. Raymond Chen discussed this here, with all the details: https://blogs.msdn.microsoft.com/oldnewthing/20090102-00/?p=19623/

Lt. Nitpicker

August 4, 2018 at 6:39 pm

I think it’s worth briefly mentioning that Microsoft temporarily put out an aggressive upgrade offer to owners of any (current) version of Windows, including the runtime versions that came with programs like Excel, to making demos of Windows and Microsoft’s own applications available. This is mentioned on a article from neozeed

Derek Fawcus

August 4, 2018 at 8:10 pm

Matt Pietrek describes the free system resources in his books.

For 3.1 (and presumably earlier), it is the lowest value of several other percentages; those being the amount of free space in the USER DGROUP heap, the USER menu heap, the USER string heap, and the GDI DGROUP heap. Of those the heap with the smallest percentage free became the free system resources.

This was improved in win 95; over and above that the kludged the reported numbers by biasing them based upon resources free once Explorer and other programs have started.

As to the old “MSDOS Executive”, an updated version was provided with windows 3.0, just look for msdos.exe

Nate

August 4, 2018 at 9:18 pm

“Gates wasn’t willing to cut bait completely with IBM”

I found this colloquial expression confusing. I’m familiar with the older meaning of “fish or cut bait”, which was a call to make a decision now.

I see the Wikipedia description has a section on the newer meaning, “act now or stand down”:

https://en.wikipedia.org/wiki/Fish_or_cut_bait#The_changing_meaning_of_%22cut_bait%22

However, I find “cut bait” as a standalone phrase a bit jarring, even if you intend the latter meaning of “stand down”.

Did you mean “cut ties” instead?

Jimmy Maher

August 5, 2018 at 7:46 am

“Cut bait” when used as a standalone expression means to “abandon an activity or enterprise”: https://idioms.thefreedictionary.com/cut+bait. However, the diction used in the article was perhaps slightly mangled, and I don’t see a great way of making it better. Went with the clearer, if more boring, “cut ties” instead. Thanks!

Martin

August 5, 2018 at 12:31 am

How can you do a walkthrough of Windows 3.0 without mentioning Solitaite or Minesweeper?

Jimmy Maher

August 5, 2018 at 7:46 am

I’m planning an article that will deal only with the games included with Windows. It’s for that reason that I’ve rather been avoiding the subject in these ones.

Joe

August 5, 2018 at 1:09 am

One of the ironies of Windows, as we approach this era of digital history, is how much less accessible it seems to us now than before. As a guy that spends his evenings playing and writing about old video games, the transition to Windows in the early 90s fills me with dread. Because of the relatively simple APIs and system architecture, every DOS game and application ever made (as well as those for Apple II, Commodore, etc.) is easily emulatable.

Windows will change all that. I don’t have any way to play “well” original Windows 3.x or 95 software. Options like WINE exist, but are buggy. I have a Windows 98 VM that I have done some simple games in, but that’s quite a chore to get working (and updates to my emulator may not support it). The next period of digital history will be harder to study until and unless new tools are built that makes it easier to emulate early generations of Windows as well as they emulate early DOS systems.

Gnoman

August 5, 2018 at 2:19 am

Those tools exist today. There is an emulator by the name of PCem that emulates period hardware quite well. Configured properly, and with a beefy enough host computer (always a problem with emulation), you can set it up as anything up to a late Pentium I MMX processor with a early 3D card.

Jan

August 9, 2018 at 8:06 am

Seconding PCem here.

Fantastic software (if you have the host machine to support it), and probably the best in emulating actual original hardware. Which is something that either Dosbox or Virtual Machine applications do not do.

Running a Windows system in Dosbox is kind of hacky by comparison, because Dosbox is not an actual DOS environment. It works, but it creaks.

In DOS terms, PCem is also great for giving you for example the actual 8088 IBM PC experience. Or running an actual 386 system. More of a curiosity than actually practical – if running games is your primary goal, for the love of god stick with DOSBox unless you fancy hours of freeing that last kb of memory again – but still. It’s the real deal and I am glad it exists.

Jimmy Maher

August 5, 2018 at 7:57 am

I find that VirtualBox works surprisingly well for me. Remarkably, Windows 3.x is even officially supported, indicating that perhaps someone at Oracle is sympathetic to the needs of digital historians. There’s a young man on YouTube who’s made a very good tutorial on getting a complete Windows 3.11 for Workgroups installation set up: https://www.youtube.com/watch?v=4n61RDzSk2E. I followed his instructions, and now have a virtual machine with 1024×768 graphics, sound, CD-ROM support, and even Internet access. I haven’t done much with it yet beyond Windows itself. The only problem I can anticipate might be speed; VirtualBox doesn’t give you any way to throttle the virtual machine. On the other hand, the event-oriented Windows programming approach may make that a non-issue.

All that said, we’re still firmly in the MS-DOS era for the most part when it comes to gaming. It will be another five years or so of historical time before non-casual game developers begin to abandon MS-DOS en masse in favor of Windows 95 and DirectX. So, DOSBox will still rule the day for a long time to come.

Martin

August 5, 2018 at 1:25 pm

I created a DOS Box environment and installed a copy of Windows 3.1 in it. I did the same for Windows 95. OK you need copies of each out there but, hmmm, they are out there. The only thing I am looking for is a PDF printer program for Windows 95, if anyone knows one.

Stefan Ring

August 6, 2018 at 2:58 pm

RedMon – http://pages.cs.wisc.edu/~ghost/redmon/redmon17.htm

Gnoman

August 5, 2018 at 9:25 pm

Speed is a problem with getting an accurate experience, and there’s also another problem. Well into the Windows 95 era, there were still cases of “you must program your software to work with this bit of hardware, because a generic driver won’t cut it”. This was a particular problem for early 3D cards, and I’m certain I remember running into a few other cases that I can’t remember right now.

That’s why I reccomend PCem. Because it emulates the underlying hardware, getting those specific bits of hardware is increasingly easy.

Jason Lefkowitz

August 6, 2018 at 8:34 pm

I feel like the big hurdle here is that, while DOSBox includes an entire DOS-compatible environment out of the box, running Windows 3.x on VirtualBox (legally, anyway) requires separately tracking down both a copy of the Windows software and a valid license to use it. Microsoft hasn’t made the software freely available for download as far as I know, and they won’t sell you a license even if you manage to track down the software from (ahem) some other source. So whereas all you need to do to run DOS software is download DOSBox, for Windows you need to answer all these separate questions before you can even get started.

Alex Freeman

August 6, 2018 at 8:02 pm

I still have trouble understanding why Windows 3.0 was so much more popular than Windows 2.0 when the latter was apparently much more stable. My family got Windows 3.1 in 1994, and I still remember to this day what a buggy mess it was. I also have trouble understanding why it was less stable than its predecessor.

Gnoman

August 6, 2018 at 8:24 pm

Windows 3.0 was really unstable because it could run in three different modes (depending on your processor/memory configuration). This was a major kludge of a design, and resulted in a lot of half-baked ideas.

It was really popular because it could run in three different modes (depending on your processor/memory configuration). This was a big selling point because you could use it on a huge variety of systems, which greatly simplified learning and encouraged people with older hardware to take the plunge, since they’d be able to upgrade seamlessly.

Apart from that, 3.0 really was better than 2.x in every way except stability. It was a straight upgrade.

3.1 fixed the majority of the stability problems with Windows itself – most 3.1x crashes were the result of bad drivers or DOS programs not playing nice.

Alex Freeman

August 7, 2018 at 6:10 pm

So did Microsoft ever fix those bad drivers? I remember Gates’s copy of Windows 95 crashing when he was giving a presentation.

Gnoman

August 8, 2018 at 4:54 am

That wasn’t something Microsoft had much control over. The problem was from third-party manufacturers, who were often really sloppy with their code.

Eventually, Windows did fix the driver problem by requiring them to be authorized by Microsoft before Windows would allow the driver to install. This is why so many devices lost compatibility with later versions of Windows, as the manufacturers didn’t care enough (or didn’t exist enough) to go through the signing process.

Alex Freeman

August 8, 2018 at 5:15 am

Oh, that explains it! I still remember Windows having stability problems until Windows XP, though.

Tomber

August 13, 2018 at 3:47 pm

Stability increased rapidly around the Windows 2000/XP (so, 2000-2003) timeframe because of the automated crash reporting via Internet. The reports were sorted, and those which rose to the ‘top’ were referred to engineers, who then had hundreds of crash dumps to look at. If code was signed, MS could even refer it to the third party so that they could figure out what was wrong with their code.

The instability of Dos and Win3.x/9x was always centered around the integration problem: random hardware, random third party software, thrown into one box. When it crashed, the messenger was Windows, and so it took a lot of the blame. What changed was the Internet, NT, Microsoft tightening 3rd party standards, and developer education. Even on NT/XP, there was a final flurry of blue screen crashes when hyper-threading deployed with the Pentium 4, because of the number of hidden driver bugs that didn’t show up until there was actually more than one processor.

JudgeDeadd

August 31, 2018 at 1:52 pm

@Tomber: Huh!! I can’t be the only one who always ignored Windows’s automated crash reporting, assuming that it was just false reassurance that only the most naive computer newbie would put faith in. It’s uplifting to hear that Microsoft employees actually did use these convoluted reports to improve the system.

Jason Stevens

August 7, 2018 at 4:36 am

Shame it’s just an image, but the help system introduced in 3.0 sparked the wave of the coming multimedia wave, allowing for a web 1.0 offline experience with hyperlinked documents with embedded graphics, audio (wave and MIDI) and later on with video.

The multimedia version of 3.00 really gave the future direction of Windows at that point of not just a GUI API, but soon centralised APIs for all kinds of things that traditionally were up to the programmer. And it was so much easier to get people to install/update drivers for Windows and experience the boost on all their applications than doing this over and over for each.

Besides adopting the OS/2 1.2 look and feel from 1989, Windows 3.0 also brought us the separation of the UI from a file explorer to the introduction of the program manager along with the massive improvements to the File Manager. Not to mention a working print manager as well!

As mentioned, under the hood was nothing short of amazing. But that leaves a weird void created in this DPMI utopia

When it came to 32bit programs, Microsoft C 5.1 for Xenix and the yet to be released OS/2 2.0 (and under development OS/2 NT) was capable of generating 32bit code. However when it came to creating extended DOS applications Microsoft C was nowhere to be found. This opened the door to Zortech, Watcom and others but oddly Microsoft sat this round out. The most Windows 3.0 centric extender came from Watcom in the form of win386, a Windows extender, that operates in pretty much the same way as a DOS extender, but for Windows! This let you write large applications in full 33bits long before the arrival of Windows NT 3.1 and the later game changer, Win32s.

On the 16bit front, many 286 DOS Extenders relied on the OS/2 protected mode interfaces to create DPMI exe’s. Just as the barely mentioned “bind.exe” would allow certain well defined OS/2 apps to be bound with the “Family API” allowing them to run on both MS-DOS real mode and OS/2 protected mode. The extenders would take it one further and emulate many of the OS/2 APIs allowing the ap to run in protected mode.

Windows 3.0 also brought us Windows hosted compilers including QuickC for Windows, and the “COBOL of the 90’s”, Visual Basic.

Jimmy Maher

August 7, 2018 at 9:10 am

That’s a great point about the help system. I hadn’t ever really thought about it in that way before. Added a bit to the article. Thanks!

Tomber

August 7, 2018 at 6:27 pm

The Help system was important. I recall a program HC.EXE (Help Compiler) that turned properly marked RTF files into HLP files.

Windows 3 did lead to a blooming of development tools, as everybody tried to figure out how to move their established methods to the GUI of Windows. The Windows C API was a pretty big mountain to climb. Paul Allen’s Asymetrix Toolbook was a hypercard-like system. Whitewater Group’s Actor was a smalltalk-like system. Borland’s Turbo Pascal for Windows brought in their own object-oriented (OOP!) wrapper of the Windows API to try to reduce the learning curve. I think it ended up being used as a windowed-console tool, mostly. As games attempted to move, performance problems in GDI caused Microsoft to produce WinG, a forerunner of DirectX.

DZ-Jay

August 9, 2018 at 9:57 am

My, how times have changed. This overly generous excitement over DOS and Windows technology and praise for their engineering qualifications is quite strange to me. They may have been “non-trivial feats of engineering” in their own way, as Jimmy put it, but let us not forget that they were hacks to work around specific limitations and bad design of the old MS-DOS heritage. So, it was incidental complexity added to address mostly self-inflicted issues.

They were implemented as part of a business strategy that valued maintaining backwards compatibility and market breadth at the expense of stability, usability, and future-proofing. (Some may claim that they included a measure of future-proofing, but it was only insofar as what could guarantee lock-in and market penetration, and we’re always tainted by the antiquated technology it tried to keep alive.)

For all the clever DPMI and protected mode hacks, let us not forget that DOS and Windows were a mess to actual users — they were arcane, difficult, buggy, unstable, ugly and intimidating. It crashed all the time, which impacted severely on productivity.

Every hack that persisted an old and outmoded interface or supported a past design decision of dubious quality, only served to delayed the implementation of newer and better technologies, sometimes at the expense of new market participants; and had a real impact on the march of progress of the computing industry.

It is why it took 3 versions of Windows to get to an actual workable one; why it took almost 10 years for Windows to get usability and user experience on par with the Macintosh; and why it took another several years for it to reach a proper level of stability in which people actually enjoyed, more than tolerated, using it.

dZ.

Leo Vellés

April 19, 2020 at 10:15 pm

“As I’ve had occasion to describe in multiple articles by now, the most crippling single disadvantage of MS-DOS had always been the its inability to directly access more than 640 K…”

I think either “the” or “its” after “always been” must be removed.

By the way, this series of Windows articles are great

Jimmy Maher

April 20, 2020 at 1:06 pm

Thanks!