Microsoft Windows 3.0’s conquest of the personal-computer marketplace was bad news for a huge swath of the industry. On the software side, companies like Lotus and WordPerfect, only recently so influential that it was difficult to imagine a world that didn’t include them, would never regain the clout they had enjoyed during the 1980s, and would gradually fade away entirely. On the hardware side, it was true that plenty of makers of commodity PC clones were happier to work with a Microsoft who believed a rising tide lifted all their boats than against an IBM that was continually trying to put them out of business. But what of Big Blue themselves, still the biggest hardware maker of all, who were accustomed to dictating the direction of the industry rather than being dictated to by any mere maker of software? And what, for that matter, of Apple? Both Apple and IBM found themselves in the unaccustomed position of being the outsiders in this new Windows era of computing. Each must come to terms with Microsoft’s newfound but overwhelming power, even as each remained determined not to give up the heritage of innovation that had gotten them this far.

Having chosen to declare war on Microsoft in 1988, Apple seemed to have a very difficult road indeed in front of them — and that was before Xerox unexpectedly reentered the picture. On December 14, 1989, the latter shocked everyone by filing a $150 million lawsuit of their own, accusing Apple of ripping off the user interface employed by the Xerox Star office system before Microsoft allegedly ripped the same thing off from Apple.

The many within the computer industry who had viewed the implications of Apple’s recent actions with such concern couldn’t help but see this latest development as the perfect comeuppance for their overweening position on “look and feel” and visual copyright. These people now piled on with glee. “Apple can’t have it both ways,” said John Shoch, a former Xerox PARC researcher, to the New York Times. “They can’t complain that Microsoft [Windows has] the look and feel of the Macintosh without acknowledging the Mac has the look and feel of the Star.” In his 1987 autobiography, John Sculley himself had written the awkward words that “the Mac, like the Lisa before it, was largely a conduit for technology” developed by Xerox. How exactly was it acceptable for Apple to become a conduit for Xerox’s technology but unacceptable for Microsoft to become a conduit for Apple’s? “Apple is running around persecuting Microsoft over things they borrowed from Xerox,” said one prominent Silicon Valley attorney. The Xerox lawsuit raised uncomfortable questions of the sort which Apple would have preferred not to deal with: questions about the nature of software as an evolutionary process — ideas building upon ideas — and what would happen to that process if everyone started suing everyone else every time somebody built a better mousetrap.

Still, before we join the contemporary commentators in their jubilation at seeing Apple hoisted with their own petard, we should consider the substance of this latest case in more detail. Doing so requires that we take a closer look at what Xerox had actually created back in the day, and take particularly careful note of which of those creations was named in their lawsuit.

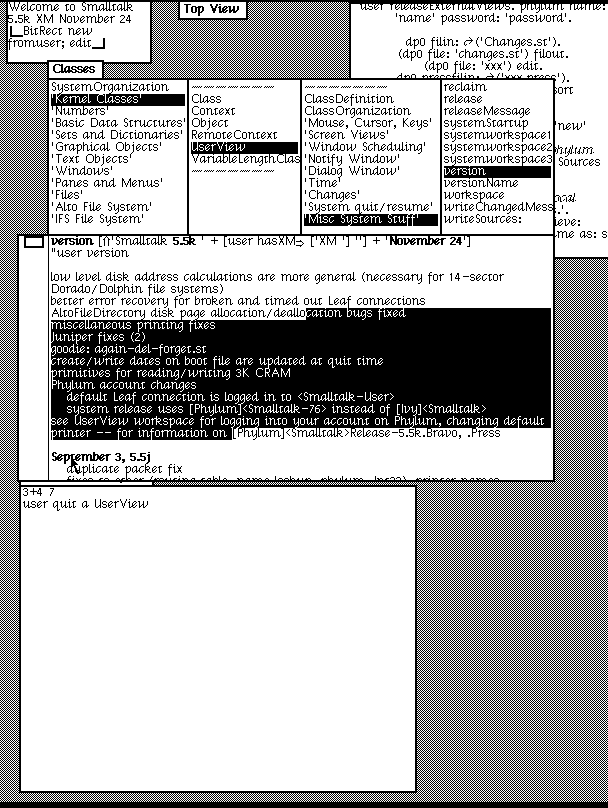

Broadly speaking, Xerox created two different GUI environments in the course of their years of experimentation in this area. The first and most heralded of these was known as the Smalltalk environment, pioneered by the researcher Alan Kay in 1975 on a machine called the Xerox Alto, which had been designed at PARC and was built only in limited quantities, without ever being made available for sale through traditional commercial channels. This was the machine and the environment which Steve Jobs so famously saw on his pair of visits to PARC in December of 1979 — visits which directly inspired first the Apple Lisa and later the Macintosh.

The Smalltalk environment running on a Xerox Alto, a machine built at Xerox PARC in the mid-1970s but never commercially released. Many of the basic ideas of the GUI are here, but much remains to be developed and much is implemented only in a somewhat rudimentary way. For instance, while windows can overlap one another, windows that are obscured by other windows are never redrawn. In this way the PARC researchers neatly avoided one of the most notoriously difficult aspects of implementing a windowing system. When Apple programmer Bill Atkinson was part of the delegation who made that December 1979 visit to PARC, he thought he did see windows that continued to update even when partially obscured by other windows. He then proceeded to find a way to give the Lisa and Macintosh’s windowing engine this capability. Seldom has a misunderstanding had such a fortuitous result.

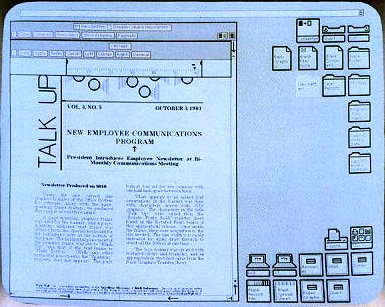

Xerox’s one belated attempt to parlay PARC’s work on the GUI into a real commercial product took the form of the Xerox Star, an integrated office-productivity system costing $16,500 per workstation upon its release in 1981. Neither Kay nor most of the other key minds behind the Alto and Smalltalk were involved in its development. Yet its GUI strikes modern eyes as far more refined than that of Smalltalk. Importantly, the metaphor of the desktop, and the soon-to-be ubiquitous idea of a skeuomorphic user interface built from stand-ins for real-world office equipment — a trash can, file folders, paper documents, etc. — were apparently the brainchildren of the product-focused Star team rather than the blue-sky researchers who worked at PARC during the 1970s.

The Xerox Star office system, which was released in 1981. This system looks much more familiar to our modern eyes than the Xerox Alto’s Smalltalk, sporting such GUI staples as menus, widgets, and icons. Yet it was still lacking in many areas compared to the GUIs that would follow. Windows were neither free-dragging nor overlapping, and its menus were one-shot commands, not drop-down lists. It most resembles VisiCorp’s Visi On among the GUIs we’ve looked at closely in this series of articles. Both products serve as a telling snapshot of the state of the art in GUIs just before Apple shook everything up with the Lisa and Macintosh.

The Star, which failed dismally due to its high price and Xerox’s lack of marketing acumen, is often reduced to little more than a footnote to the story of PARC, treated as a workmanlike translation of PARC’s grand ideas and technologies into a somewhat problematic product. Yet there’s actually an important philosophical difference between Smalltalk and the Star, born of the different engineering cultures that produced them. Smalltalk emphasized programming, to the point that the environment could literally be re-programmed on the fly as you used it. This was very much in keeping with the early ethos of home computing as well, when all machines booted into BASIC and an ability to program was considered key for every young person’s future — when every high school, it seemed, was instituting classes in BASIC or Pascal. The Star, on the other hand, was engineered to ensure that the non-technical office worker never needed to see a line of code; this machine conformed to the human rather than asking the human to conform to it. One might say that Smalltalk was intended to make the joy of computing — of using the computer as the ultimate anything machine — as accessible as possible, while the Star was intended to make you forget that you were using a computer at all.

While I certainly don’t wish to dismiss or minimize the visionary work down at PARC in the 1970s, I do believe that historians of early microcomputer GUIs have tended to somewhat over-emphasize the innovations of Smalltalk and the Alto while selling the Xerox Star’s influence rather short. Steve Jobs’s early visits to PARC are given much weight in the historical record, but it’s sometimes forgotten that anything Apple wished to copy from Smalltalk had to be done from memory; they had no regular access to the PARC technology after those visits. The Star, on the other hand, did ship as a commercial product some two years before the Lisa. Notably, the Star’s philosophy of hiding the “computery” aspects of computing from the user would turn out to be much more in line with the one that guided the Lisa and Macintosh than was Smalltalk’s approach of exposing its innards for all to see and modify. The Star was a closed black box, capable of running only the software provided for it by Xerox. Similarly, the Lisa couldn’t be programmed at all except by buying a second Lisa and chaining the two machines together, and even the Macintosh never had the reputation of being a hacker’s plaything in the way of the earlier, more hobbyist-oriented Apple II. The Lisa and Macintosh thus joined the Star in embracing a clear divide between coding professionals, who wrote the software, and end users, who bought it and used it to get stuff done. One could thus say that they resemble the Star much more than Smalltalk not only visually but philosophically.

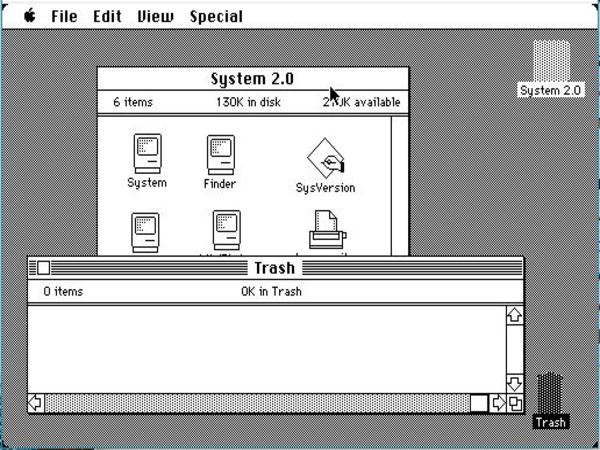

Counter-intuitive though it is to the legend of the Macintosh being a direct descendant of the work Steve Jobs saw at PARC, Xerox sued Apple over the interface elements they had allegedly stolen from the Star rather than Smalltalk. In evaluating the merits of their claim today, I’m somewhat hamstrung by the fact that no working emulators of the original Star exist,[1]This has changed since this article was written; see Ian Crossfield’s comment below. forcing me to rely on screenshots, manuals, and contemporary articles about the system. Nevertheless, those sources are enough to identify an influence of the Star upon the Macintosh that’s every bit as clear-cut as that of the Macintosh upon Microsoft Windows. It strains the bounds of credibility to believe that the Mac team coincidentally developed a skeuomorphic interface using many of the very same metaphors — including the central metaphor of the desktop — without taking the example of the Star to heart. To this template they added much innovation, including such modern GUI staples as free-dragging and overlapping windows, drop-down menus, and draggable icons, along with staple mouse gestures like the hold-and-drag and the double-click. Nonetheless, the foundations of the Mac can be seen in the Star much more obviously than they can in Smalltalk. Crudely put, Apple copied the Star while adding a whole lot of original ideas to the mix, and then Microsoft copied Apple, adding somewhat fewer ideas of their own. The people rejoicing over the Xerox lawsuit, in other words, had this aspect of the story basically correct, even if they did have a tendency to confuse Smalltalk and the Star and misunderstand which of them Xerox was actually suing over.

MacOS started with the skeuomorphic desktop model of the Xerox Star and added it to such fundamental modern GUI concepts as pull-down menus, hold-and-drag, the double-click, and free-dragging, overlapping windows that update themselves even when partially occluded by others.

Of course, the Xerox lawsuit against Apple was legally suspect for all the same reasons as the Apple lawsuit against Microsoft. If anything, there were even more reasons to question the good faith of Xerox’s lawsuit than Apple’s. The source of Xerox’s sudden litigiousness was none other than Bill Lowe, the former IBM executive whose disastrous PS/2 brainchild had already made his attitude toward intellectual property all too clear. Lowe had made a soft landing at Xerox after leaving IBM, and was now telling the press about the “aggressive stand on copyright and patent issues” his new company would be taking from now on. It certainly sounded like he intended to weaponize the long string of innovations credited to Xerox PARC and the Star — using these ideas not to develop products, but to sue others who dared to do so. Lowe’s hoped-for endgame was weirdly similar to his misbegotten hopes for the PS/2’s Micro Channel Architecture: Xerox would eventually license the right to make GUIs and other products to companies like Apple and Microsoft, profiting off their innovations of the past without having to do much of anything in the here and now. This understandably struck many of the would-be licensees as a less than ideal outcome. That, at least, was something on which Apple, Microsoft, and just about everyone else in the computer industry could agree.

Apple’s legal team was left in one heck of an awkward fix. They would seemingly have to argue against Xerox’s broad interpretation of visual copyright while arguing for that same broad interpretation in their own lawsuit against Microsoft — and all in the same court in front of the same judge. Any victory against Xerox could lead to their own words being used against them to precipitate a loss against Microsoft, and vice versa.

It was therefore extremely fortunate for Apple that Judge Vaughn R. Walker struck down Xerox’s lawsuit almost before it had gotten started. At the time of their court filing, Xerox was already outside the statute of limitations for a copyright-infringement claim of the type that Apple had filed against Microsoft. They had thus been forced to make a claim of “unfair competition” instead — a claim which carried with it a much higher evidentiary standard. On March 24, 1990, Judge Walker tossed the Xerox lawsuit, saying it didn’t meet this standard and making the unhelpful observation to Xerox that it would have made a lot more sense as a copyright claim. Apple had dodged a bullet, and Bill Lowe would have to find some other way to make money for his new company.

With the Xerox sideshow thus dispensed with, Apple’s lawyers could turn their attention back to the main event, their case against Microsoft. The same Judge Walker who had decided in their favor against Xerox had taken over from Judge William Schwarzer in the other case as well. No longer needing to worry about protecting their flank from Xerox, Apple’s lawyers pushed for what they called “total concept” or “gestalt” look and feel as the metric for deciding whether Windows infringed upon MacOS. But on March 6, 1991, Judge Walker agreed with Microsoft’s contention that the case should be decided on a “function by function” basis instead. Microsoft began assembling reels of video demonstrating what they claimed to be pre-Macintosh examples of each one of the ten interface elements that were at issue in the case.

So, even as Windows 3.0 was conquering the world outside the courtroom, both sides remained entrenched in their positions inside it, and the case, already three years old, ground on and on through motion after counter-motion. “We’re going to trial,” insisted Edward B. Stead, Apple’s general counsel, but it wasn’t at all clear when that trial would take place. Part of the problem was the sheer pace of external events. As Windows 3.0 became the fastest-selling piece of commercial software the world had ever seen, the scale and scope of Apple’s grievances just kept growing to match. From the beginning, a key component of Microsoft’s strategy had been to gum up the works in court while Windows 3.0 became a fait accompli, the new standard in personal computing, too big for any court to dare attack. That strategy seemed to be working beautifully. Meanwhile Apple’s motions grew increasingly far-fetched, beginning to take on a distinct taint of desperation.

In May of 1991, for example, Apple’s lawyers surprised everyone with a new charge. Still looking for a way to expand the case beyond those aspects of Windows 2 and 3 which hadn’t existed in Windows 1, they now claimed that the 1985 agreement which had been so constantly troublesome to them in that respect was invalid. Microsoft had allegedly defrauded Apple by saying they wouldn’t make future versions of Windows any more similar to the Macintosh than the first was, and then going against their word. This new charge was a hopeful exercise at best, especially given that the agreement Apple claimed Microsoft had broken had been, if it ever existed, strictly a verbal one; absolutely no language to this effect was to be found in the text of the 1985 agreement. Microsoft’s lawyers, once they picked their jaws up off the floor, were left fairly spluttering with indignation. Attorney David T. McDonald labeled the argument “desperate” and “preposterous”: “We’re on the five-yard line, the goal is in sight, and Apple now shows up and says, ‘How about lacrosse instead of football?'” Thankfully, Judge Walker found Apple’s argument to be as ludicrous as McDonald did, thus sparing us all any more sports metaphors.

On April 14, 1992 — now more than four years on from Apple’s original court filing, in a computing climate transformed almost beyond recognition by the rise of Windows — Judge Walker ruled against Apple’s remaining contentions in devastating fashion. Much of the 1985 agreement was indeed invalid, he said, but not for the reason Apple had claimed. What Microsoft had licensed in that agreement were largely “generic ideas” that should never be susceptible to copyright protection in the first place. Apple was entitled to protect very specific visual elements of their displays, such as the actual icons they used, but they weren’t entitled to protect the notion of a screen with icons in the abstract, nor even that of icons representing specific real-world objects, such as a disk, a folder, or a trash can. Microsoft or anyone else could, in other words, make a GUI with a trash-can icon if they wished; they just couldn’t transplant Apple’s specific rendering of a trash can into their own work. Applying the notion of visual copyright any more broadly than this “would afford too much protection and yield too little competition,” said the judge. Apple’s slippery notion of look and feel, it appeared, was dead as a basis for copyright. After all the years of struggle and at least $10 million in attorney fees on both sides, Judge Walker ruled that Apple’s case was too weak to even present before a jury. “Through five years, there were many points where the case got continuously refined and focused and narrowed,” said a Microsoft spokesman. “Eventually, there was nothing left.”

Still, one can’t accuse Apple of giving up without a fight. They dragged the case out for almost three more years after this seemingly definitive defeat. When the Ninth Circuit Court of Appeals upheld Judge Walker’s judgment in 1994, Apple tried to take the case all the way to the Supreme Court. That august body announced that they would not hear it on February 21, 1995, thus finally putting an end to the whole tortuous odyssey.

The same press which had been so consumed by the case circa 1988 barely noticed its later developments. The narrative of Microsoft’s utter dominance and Apple’s weakness had become so prevalent by the early 1990s that it had become difficult to imagine any outcome other than a Microsoft victory. Yet the case’s anticlimactic ending obscured how dangerous it had once been, not only for Microsoft but for the software industry as a whole. Whatever one thinks in general of the products and business practices of the opposing sides, a victory for Apple would have been a terrible result for the personal-computer industry. The court got this one right in striking all of Apple’s claims down so thoroughly — something that can’t always be said about collisions between technology and the law. Bill Gates could walk away knowing the long struggle had struck an important blow for an ongoing culture of innovation in the software industry. Indeed, like the victory of his hero Henry Ford over a group of automotive patent trolls eighty years before, his victory would benefit his whole industry along with his company — which isn’t to say, of course, that he would have fought the war purely for the sake of altruism.

John Sculley, for his part, was gone from Apple well before the misguided lawsuit he had fostered came to its final conclusion. He was ousted by his board of directors in 1993, after it became clear that Apple would post a loss of close to $200 million for the year. Yet his departure brought no relief to the problems of dwindling market share, dwindling focus, and, most worrisome of all, a dwindling sense of identity. Apple languished, embittered about the ideas Microsoft had “stolen” from them, while Windows conquered the world. One could certainly argue that they deserved a better fate on the basis of a Macintosh GUI that still felt far slicker and more intuitive than Microsoft’s, but the reality was that their own poor decisions, just as much as Microsoft’s ruthlessness, had led them to this sorry place. The mid-1990s saw them mired in the greatest crisis of confidence of their history, licensing the precious Macintosh technology to clone makers and seriously considering breaking themselves up into two companies to appease their angriest shareholder contingents. For several years to come, there would be a real question of whether any part of the company would survive to see the new millennium. Gone were the Jobsian dreams of changing the world through better computing; Apple was reduced to living on Microsoft’s scraps. Microsoft had won in the marketplace as thoroughly as they had in court.

But the full story of Apple’s 1990s travails is one to take up at another time. Now, we should turn to IBM, to see how they coped after the MS-DOS-based Windows, rather than the OS/2-based Presentation Manager, made the world safe for the GUI.

Throughout 1990, that year of wall-to-wall hype over Windows 3.0, Microsoft persisted in dampening expectations for OS/2 in a way that struck IBM as deliberate. The agreement that MS-DOS and Windows were for low-end computers, OS/2 and the Presentation Manager for high-end ones, seemed to have been forgotten by Microsoft as soon as Bill Gates and Steve Ballmer left the Fall 1989 Comdex at which it had been announced. Gates now said that it could take OS/2 another three or four years to inherit the throne from MS-DOS, and by that time it would probably be running Windows rather than Presentation Manager anyway. Ballmer said that OS/2 was really meant to compete with high-end client/server operating systems like Unix, not with desktop operating systems like MS-DOS. They both said that “there will be a DOS 5, 6, and 7, and a Windows 4 and 5.” Meanwhile IBM was predictably incensed by Windows 3.0’s use of protected mode and the associated shattering of the 640 K barrier; that sort of thing was supposed to have been the purview of the more advanced OS/2.

Back in late 1988, Microsoft had hired a system-software architect from DEC named David Cutler to oversee the development of OS/2 2.0. No shrinking violet, he promptly threw out virtually all of the existing OS/2 code, which he pronounced a bloated mess, and started over from scratch on an operating system that would fulfill Microsoft’s original vision for OS/2, being targeted at machines with an 80386 or better processor. The scope and ambition of this project, along with the fact that Microsoft wished to keep it entirely in-house, had turned into yet one more source of tension between the two companies; it could be years still before Cutler’s OS/2 2.0 was ready. There remained little semblance of any coordinated strategy between the two companies, in public or in private.

And yet, in September of 1990, IBM and Microsoft announced a new roadmap for OS/2’s future. The two companies together would finish up one more version of the first-generation OS/2 — OS/2 1.3, which was scheduled to ship the following month — and that would be the end of that lineage. Then IBM would develop an OS/2 2.0 alone — a project they hoped to have done in a year or so — while Cutler’s team at Microsoft continued with the complete rewrite that was now to be marketed as OS/2 3.0.

The announcement, whose substance amounted to a tacit acknowledgement that the two companies simply couldn’t work together anymore on the same project, caused heated commentary in the press. It seemed a convoluted way to evolve an operating system at best, and it was happening at the same time that Microsoft seemed to be charging ahead — and with massive commercial success at that — on MS-DOS and Windows as the long-term face of personal computing in the 1990s. InfoWorld wrote of a “deepening rift” between Microsoft and IBM, characterizing the latest agreement as IBM “seizing control of OS/2’s future.” “Although in effect IBM and Microsoft will say they won’t divorce ‘for the sake of the children,'” said an inside source to the magazine, “in fact they are already separated, and seeking new relationships.” Microsoft pushed back against the “divorce” meme only in the most tepid fashion. “You may not understand our marriage,” said Steve Ballmer, “but we’re not getting divorced.” (One might note that when a couple have to start telling friends that they aren’t getting a divorce, it usually isn’t a good sign about the state of their relationship…)

Charles Petzold, writing in PC Magazine, summed up the situation created by all the mixed messaging: “The key words in operating systems are confusion, uncertainty, anxiety, and doubt. Unfortunately, the two guiding lights of this industry — IBM and Microsoft — are part of the problem rather than part of the solution.” If anything, this view of IBM as an ongoing “guiding light” was rather charitable. OS/2 was drowning in the Windows hype. “The success of Windows 3.0 has already caused OS/2 acceptance to go from dismal to cataclysmic,” wrote InfoWorld. “Analysts have now pushed back their estimates of when OS/2 will gain broad popularity to late this decade, with some predicting that the so-called next-generation operating system is all but dead.”

The final divorce of Microsoft from IBM came soon after to give the lie to all of the denials. In July of 1991, Microsoft announced that the erstwhile OS/2 3.0 was to become its own operating system, separate from both OS/2 and MS-DOS, called Windows NT. With this news, which barely made an impression in the press — it took up less than one quarter of page 87 of that week’s InfoWorld — a decade of cooperation came to an end. From now on, Microsoft and IBM would exist strictly as competitors in a marketplace where Microsoft enjoyed all the advantages. In the final divorce settlement, IBM gave up all rights to the upcoming Windows NT and agreed to pay a small royalty on all future sales of OS/2 (whatever those might amount to), while Microsoft paid a lump sum of around $30 million to be free and clear of their last obligations to the computing giant that had made them what they now were. They greeted this watershed moment with no sentimentality whatever. In a memo that leaked to the press, Bill Gates instead rejoiced that Microsoft was finally free of IBM’s “poor code, poor design, and other overhead.”

Even as the unlikely partnership’s decade of dominance was passing away, Microsoft’s decade of sole dominion was just beginning. The IBM PC and its clones had become the Wintel standard, and would require no further input from Big Blue, thank you very much. IBM’s share of the standard’s sales was already down to 17 percent, and would just keep on falling from there. “Microsoft is now driving the industry, not IBM,” wrote the newsletter Software Publishing by way of stating the obvious.

Which isn’t to say that IBM was going away. While Microsoft was celebrating their emancipation, IBM continued plodding forward with OS/2 2.0, which, like the aborted version 3.0 that was now to be known as Windows NT, ran only on an 80386 or better. They made a big deal of the work-in-progress at the Fall 1991 Comdex without managing to change the narrative around it one bit. The total bill for OS/2 was approaching an astonishing $1 billion, and they had very little to show for it. One Wall Street analyst pronounced OS/2 “the greatest disaster in IBM’s history. The reverberations will be felt throughout the decade.”

At the end of that year, IBM had to report — incredibly, for the very first time in their history — an annual loss. And it was no trivial loss either. The deficit was $2.8 billion, on revenues that had fallen 6.1 percent from the year before. The following year would be even worse, to the tune of a $5 billion loss. No company in the history of the world had ever lost this much money this quickly; by the last quarter of 1993, IBM would be losing $45 million every day. Microcomputers were continuing to replace the big mainframes and minicomputers that had once been the heart of IBM’s business. Now, though, fewer and fewer of those replacement machines were IBM personal computers; whole segments of their business were simply evaporating. The vague distrust IBM had evinced toward Microsoft for most of the 1980s now seemed amply justified, as all of their worst nightmares came true. IBM seemed old, bloated, and, worst of all, irrelevant next to the fresh-faced young Microsoft.

OS/2 2.0 started reaching consumers in May of 1992. It was a surprisingly impressive piece of work; perhaps the relationship with Microsoft had been as frustrating for IBM’s programmers as it had been for their counterparts. Certainly OS/2 2.0 was a far more sophisticated environment than Windows 3.0. Being designed to run only on 32-bit microprocessors like the 80386 and 80486, it utilized them to their maximum potential, which was much more than one could say for Windows, while also being much more stable than Microsoft’s notoriously crash-prone environment. In addition to native OS/2 software, it could run multiple MS-DOS applications at the same time with complete compatibility, and, in a new wrinkle added to the mix by IBM, could now run many Windows applications as well. IBM called it “a better DOS than DOS and a better Windows than Windows,” a claim which carried a considerable degree of truth. They pointedly cut its suggested list price of $140 to just $50 for Windows users looking to “upgrade.”

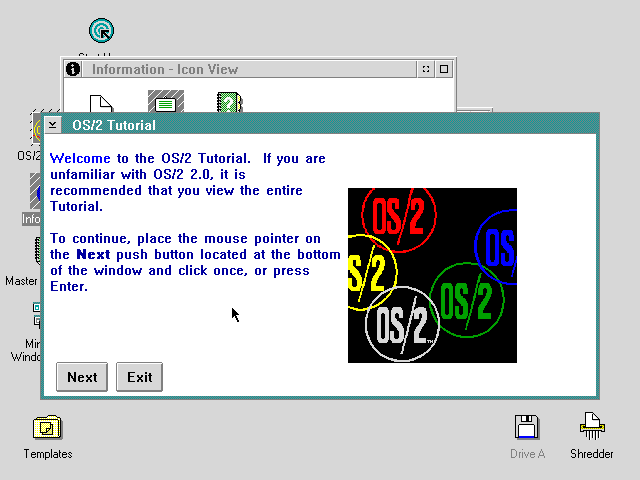

A Quick Tour of OS/2 2.0

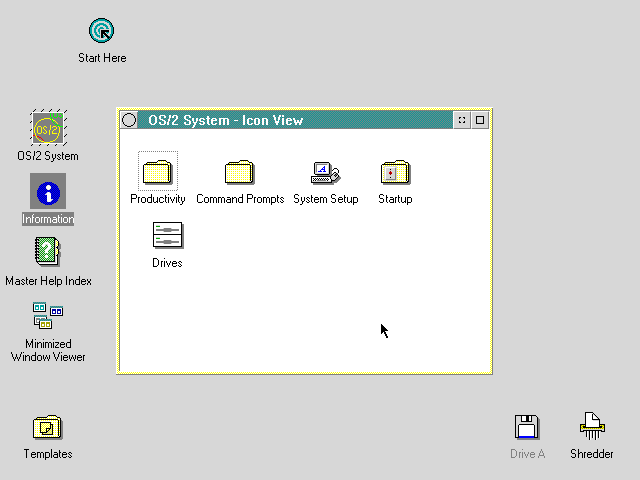

Shipping on more than twenty 3.5-inch diskettes, OS/2 2.0 was by far more the most elaborate operating system yet made for its family of personal computers. When we boot it up for the first time, we’re given a lengthy interactive tutorial of a sort that was seldom seen in software of 1992 vintage.

The notion of a “Presentation Manager” GUI that’s separate from the core OS/2 operating system has been dropped; OS/2 is now simply OS/2, with a GUI as the standard, built-in interface. From the opening tutorial to the look of its desktop, the whole package reminds one of nothing of so much as the much later Windows 95. We have a full-fledged, functioning desktop workspace here, with icons representing folders and disks, and a “shredder” to replace the usual trash can.

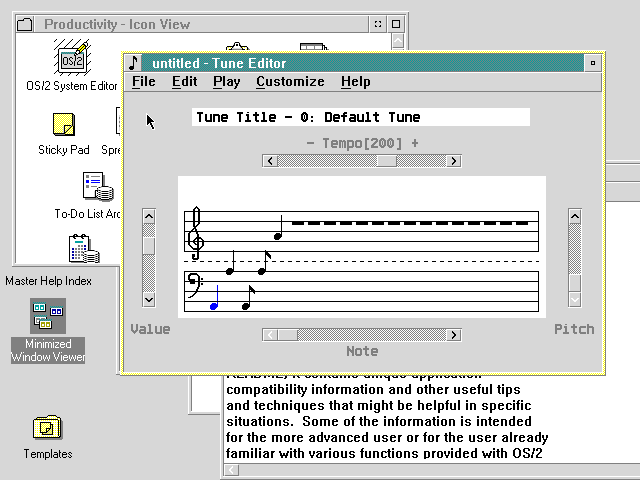

After shipping earlier versions of OS/2 with no extra tools or applets whatsoever, IBM got wise this time around and included plenty of stuff to play with, like this neat little music editor.

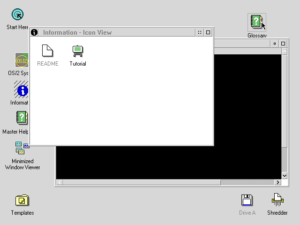

Some aspects of the interface are a little strange. Dragging with the mouse is accomplished using the right button rather than the left — a fine example of OS/2’s superficial similarity and granular dissimilarity to Windows, which so many users who had to move back and forth between the environments found so frustrating.

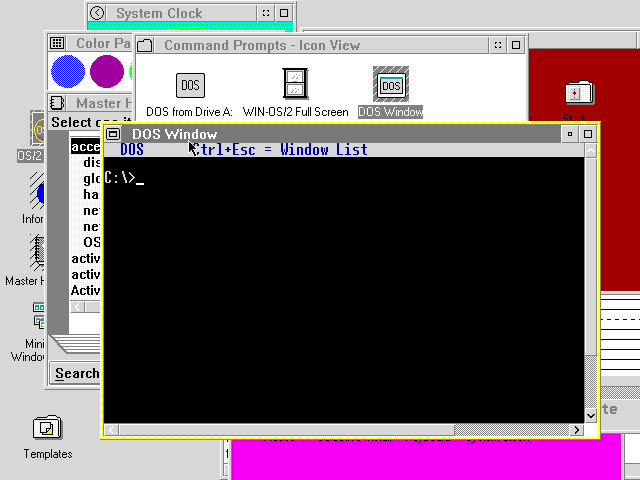

Of course, MS-DOS is still around if you need it. Unlike in OS/2 1.x, here you can have as many MS-DOS windows and applications open as you like.

But, despite its many merits, OS/2 2.0 was a lost cause from the start, at least if one’s standard for success was Windows. Windows 3.1 rolled out of Microsoft at almost the same instant, and no amount of comparisons in techie magazines pointing out the alternative operating system’s superiority could have any impact on a mass market that was now thoroughly conditioned to accept Windows as the standard. Giant IBM’s operating system had become, as the New York Times put it, “an unlikely underdog.”

In truth, the contest was so lopsided by this point as to be laughable. Microsoft, who had long-established relationships with the erstwhile clone makers — now known as makers of hardware conforming to the Wintel standard — understood early, as IBM did only much too late, that the best and perhaps only way to get your system software widely accepted was to sell it pre-installed on the computers that ran it. Thus, by the time OS/2 2.0 shipped, Windows already came pre-installed on nine out of ten personal computers on the market, thanks to a smart and well-funded “original equipment manufacturer” sales team that was overseen personally by Steve Ballmer. And thus, simply by buying a new computer, one automatically became a Windows user. Running OS/2, on the other hand, required that the purchaser of one of these machines decide to go out and buy an alternative to the perfectly good Microsoft software already on her hard drive, and then go through all the trouble of installing and configuring it. Very few people had the requisite combination of motivation and technical skill for an exercise like that.

As a final indignity, IBM themselves had to bow to customer demand and offer MS-DOS and Windows as an optional alternative to OS/2 on their own machines. People wanted the system software that they used at the office, that their friends had, that could run all of the products on the shelves of their local computer store with 100-percent fidelity (with the exception of that oddball Mac stuff off in the corner, of course). Only the gearheads were going to buy OS/2 because it was a 32-bit instead of a 16-bit operating system or because it offered preemptive instead of cooperative multitasking, and they were a tiny slice of an exploding mass market in personal computing.

That said, OS/2 did have a better fate than many another alternative operating system during this period of Windows, Windows everywhere. It stayed around for years even in the face of that juggernaut, going through two more major revisions and many minor ones, the very last coming as late as December of 2001. It remained always a well-respected operating system that just couldn’t break through Microsoft’s choke hold on mainstream computing, having to content itself with certain niches — powering automatic teller machines was a big one for a long time — where its stability and robustness served it well.

So, IBM, and Apple as well, had indeed become the outsiders of personal computing. They would retain that dubious status for the balance of the decade of the 1990s, offering alternatives to the monoculture of Windows computing that appealed only to the tech-obsessed, the idealistic, or the just plain contrarian. Even as much of what I’ve related in this article was taking place, they were being forced into one another’s arms for the sake of sheer survival. But the story of that second unlikely IBM partnership — an awkward marriage of two corporate cultures even more dissimilar than those of Microsoft and IBM — must, like so much else, be told at another time. All that’s left to tell in this series is the story of how Windows, with the last of its great rivals bested, finished the job of conquering the world.

(Sources: the books The Making of Microsoft: How Bill Gates and His Team Created the World’s Most Successful Software Company by Daniel Ichbiah and Susan L. Knepper, Hard Drive: Bill Gates and the Making of the Microsoft Empire by James Wallace and Jim Erickson, Gates: How Microsoft’s Mogul Reinvented an Industry and Made Himself the Richest Man in America by Stephen Manes and Paul Andrews, Computer Wars: The Fall of IBM and the Future of Global Technology by Charles H. Ferguson and Charles R. Morris, and Apple Confidential 2.0: The Definitive History of the World’s Most Colorful Company by Owen W. Linzmayer; PC Week of September 24 1990 and January 15 1991; InfoWorld of September 17 1990, May 29 1991, July 29 1991, October 28 1991, and September 6 1993; New York Times of December 29 1989, March 24 1990, March 7 1991, May 24 1991, January 18 1992, August 8 1992, January 20 1993, April 19 1993, and June 2 1993; Seattle Times of June 2 1993. Finally, I owe a lot to Nathan Lineback for the histories, insights, comparisons, and images found at his wonderful online “GUI Gallery.”)

Footnotes

| ↑1 | This has changed since this article was written; see Ian Crossfield’s comment below. |

|---|