In 1854, an Austrian priest and physics teacher named Gregor Mendel sought and received permission from his abbot to plant a two-acre garden of pea plants on the grounds of the monastery at which he lived. Over the course of the next seven years, he bred together thousands upon thousands of the plants under carefully controlled circumstances, recording in a journal the appearance of every single offspring that resulted, as defined by seven characteristics: plant height, pod shape and color, seed shape and color, and flower position and color. In the end, he collected enough data to formulate the basis of the modern science of genetics, in the form of a theory of dominant and recessive traits passed down in pairs from generation to generation. He presented his paper on the subject, “Experiments on Plant Hybridization,” before the Natural History Society of Austria in 1865, and saw it published in a poorly circulated scientific journal the following year.

And then came… nothing. For various reasons — perhaps due partly to the paper’s unassuming title, perhaps due partly to the fact that Mendel was hardly a known figure in the world of biology, undoubtedly due largely to the poor circulation of the journal in which it was published — few noticed it at all, and those who did dismissed it seemingly without grasping its import. Most notably, Charles Darwin, whose On the Origin of Species had been published while Mendel was in the midst of his own experiments, seems never to have been aware of the paper at all, thereby missing this key gear in the mechanism of evolution. Mendel was promoted to abbot of his monastery shortly after the publication of his paper, and the increased responsibilities of his new post ended his career as a scientist. He died in 1884, remembered as a quiet man of religion who had for a time been a gentleman dabbler in the science of botany.

But then, at the turn of the century, the German botanist Carl Correns stumbled upon Mendel’s work while conducting his own investigations into floral genetics, becoming in the process the first to grasp its true significance. To his huge credit, he advanced Mendel’s name as the real originator of the set of theories which he, along with one or two other scientists working independently, was beginning to rediscover. Correns effectively shamed those other scientists as well into acknowledging that Mendel had figured it all out decades before any of them even came close. It was truly a selfless act; today the name of Carl Correns is unknown except in esoteric scientific circles, while Gregor Mendel’s has been done the ultimate honor of becoming an adjective (“Mendelian”) and a noun (“Mendelism”) locatable in any good dictionary.

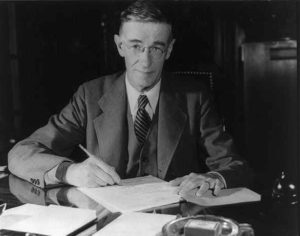

So, all’s well that ends well, right? Well, maybe, but maybe not. Some 30 years after the rediscovery of Mendel’s work, an American named Vannevar Bush, dean of MIT’s School of Engineering, came to see the 35 years that had passed between the publication of Mendel’s theory and the affirmation of its importance as a troubling symptom of the modern condition. Once upon a time, all knowledge had been regarded as of a piece, and it had been possible for a great mind to hold within itself huge swathes of this collective knowledge of humanity, everything informing everything else. Think of that classic example of a Renaissance man, Leonardo da Vinci, who was simultaneously a musician, a physicist, a mathematician, an anatomist, a botanist, a geologist, a cartographer, an alchemist, an astronomer, an engineer, and an inventor. Most of all, of course, he was a great visual artist, but he used everything else he was carrying around in that giant brain of his to create paintings and drawings as technically meticulous as they were artistically sublime.

By Bush’s time, however, the world had long since entered the Age of the Specialist. As the sheer quantity of information in every field exploded, those who wished to do worthwhile work in any given field — even those people gifted with giant brains — were increasingly being forced to dedicate their intellectual lives entirely to that field and only that field, just to keep up. The intellectual elite were in danger of becoming a race of mole people, closeted one-dimensionals fixated always on the details of their ever more specialized trades, never on the bigger picture. And even then, the amount of information surrounding them was so vast, and existing systems for indexing and keeping track of it all so feeble, that they could miss really important stuff within their own specialties; witness the way the biologists of the late nineteenth century had missed Gregor Mendel’s work, and the 35-years head start it had cost the new science of genetics. “Mendel’s work was lost,” Bush would later write, “because of the crudity with which information is transmitted between men.” How many other major scientific advances were lying lost in the flood of articles being published every year, a flood that had increased by an order of magnitude just since Mendel’s time? “In this are thoughts,” wrote Bush, “certainly not often as great as Mendel’s, but important to our progress. Many of them become lost; many others are repeated over and over.” “This sort of catastrophe is undoubtedly being repeated all around us,” he believed, “as truly significant attainments become lost in the sea of the inconsequential.”

Bush’s musings were swept aside for a time by the rush of historical events. As the prospect of another world war loomed, he became President Franklin Delano Roosevelt’s foremost advisor on matters involving science and engineering. During the war, he shepherded through countless major advances in the technologies of attack and defense, culminating in the most fearsome weapon the world had ever known: the atomic bomb. It was actually this last that caused Bush to return to the seemingly unrelated topic of information management, a problem he now saw in a more urgent light than ever. Clearly the world was entering a new era, one with far less tolerance for the human folly, born of so much context-less mole-person ideology, that had spawned the current war.

Practical man that he was, Bush decided there was nothing for it but to roll up his sleeves and make a concrete proposal describing how humanity could solve the needle-in-a-haystack problem of the modern information explosion. Doing so must entail grappling with something as fundamental as “how creative men think, and what can be done to help them think. It is a problem of how the great mass of material shall be handled so that the individual can draw from it what he needs — instantly, correctly, and with utter freedom.”

As revolutionary manifestos go, Vannevar Bush’s “As We May Think” is very unusual in terms of both the man that wrote it and the audience that read it. Bush was no Karl Marx, toiling away in discontented obscurity and poverty. On the contrary, he was a wealthy upper-class patrician who was, as a member of the White House inner circle, about as fabulously well-connected as it was possible for a man to be. His article appeared first in the July 1945 edition of the Atlantic Monthly, hardly a bastion of radical thought. Soon after, it was republished in somewhat abridged form by Life, the most popular magazine on the planet. Thereby did this visionary document reach literally millions of readers.

With the atomic bomb still a state secret, Bush couldn’t refer directly to his real reasons for wanting so urgently to write down his ideas now. Yet the dawning of the atomic age nevertheless haunts his article.

It is the physicists who have been thrown most violently off stride, who have left academic pursuits for the making of strange destructive gadgets, who have had to devise new methods for their unanticipated assignments. They have done their part on the devices that made it possible to turn back the enemy, have worked in combined effort with the physicists of our allies. They have felt within themselves the stir of achievement. They have been part of a great team. Now, as peace approaches, one asks where they will find objectives worthy of their best.

Seen in one light, Bush’s essay is similar to many of those that would follow from other Manhattan Project alumni during the uncertain interstitial period between the end of World War II and the onset of the Cold War. Bush was like many of his colleagues in feeling the need to advance a utopian agenda to counter the apocalyptic potential of the weapon they had wrought, in needing to see the ultimate evil that was the atomic bomb in almost paradoxical terms as a potential force for good that would finally shake the world awake.

Bush was true to his engineer’s heart, however, in basing his utopian vision on technology rather than politics. The world was drowning in information, making the act of information synthesis — intradisciplinary and interdisciplinary alike — ever more difficult.

The difficulty seems to be, not so much that we publish unduly in view of the extent and variety of present-day interests, but rather that publication has been extended far beyond our present ability to make real use of the record. The summation of human experience is being expanded at a prodigious rate, and the means we use for threading through the consequent maze to the momentarily important item is the same as was used in the days of square-rigged ships.

Our ineptitude in getting at the record is largely caused by the artificiality of systems of indexing. When data of any sort are placed in storage, they are filed alphabetically or numerically, and information is found (when it is) by tracing it down from subclass to subclass. It can be in only one place, unless duplicates are used; one has to have rules as to which path will locate it, and the rules are cumbersome. Having found one item, moreover, one has to emerge from the system and reenter on a new path.

The human mind does not work that way. It operates by association. With one item in its grasp, it snaps instantly to the next that is suggested by the association of thoughts, in accordance with some intricate web of trails carried by the cells of the brain. It has other characteristics, of course; trails that are not frequently followed are prone to fade, items are not fully permanent, memory is transitory. Yet the speed of action, the intricacy of trails, the detail of mental pictures, is awe-inspiring beyond all else in nature.

Man cannot hope fully to duplicate this mental process artificially, but he certainly ought to be able to learn from it. In minor ways he may even improve it, for his records have relative permanency. The first idea, however, to be drawn from the analogy concerns selection. Selection by association, rather than indexing, may yet be mechanized. One cannot hope thus to equal the speed and flexibility with which the mind follows an associative trail, but it should be possible to beat the mind decisively in regard to the permanence and clarity of the items resurrected from storage.

Bush was not among the vanishingly small number of people who were working in the nascent field of digital computing in 1945. His “memex,” the invention he proposed to let an individual free-associate all of the information in her personal library, was more steampunk than cyberpunk, all whirring gears, snickering levers, and whooshing microfilm strips. But really, those things are just details; he got all of the important stuff right. I want to quote some more from “As We May Think,” and somewhat at length at that, because… well, because its vision of the future is just that important. This is how the memex should work:

When the user is building a trail, he names it, inserts the name in his code book, and taps it out on his keyboard. Before him are the two items to be joined, projected onto adjacent viewing positions. At the bottom of each there are a number of blank code spaces, and a pointer is set to indicate one of these on each item. The user taps a single key, and the items are permanently joined. In each code space appears the code word. Out of view, but also in the code space, is inserted a set of dots for photocell viewing; and on each item these dots by their positions designate the index number of the other item.

Thereafter, at any time, when one of these items is in view, the other can be instantly recalled merely by tapping a button below the corresponding code space. Moreover, when numerous items have been thus joined together to form a trail, they can be reviewed in turn, rapidly or slowly, by deflecting a lever like that used for turning the pages of a book. It is exactly as though the physical items had been gathered together from widely separated sources and bound together to form a new book. It is more than this, for any item can be joined into numerous trails.

The owner of the memex, let us say, is interested in the origin and properties of the bow and arrow. Specifically he is studying why the short Turkish bow was apparently superior to the English long bow in the skirmishes of the Crusades. He has dozens of possibly pertinent books and articles in his memex. First he runs through an encyclopedia, finds an interesting but sketchy article, leaves it projected. Next, in a history, he finds another pertinent item, and ties the two together. Thus he goes, building a trail of many items. Occasionally he inserts a comment of his own, either linking it into the main trail or joining it by a side trail to a particular item. When it becomes evident that the elastic properties of available materials had a great deal to do with the bow, he branches off on a side trail which takes him through textbooks on elasticity and tables of physical constants. He inserts a page of longhand analysis of his own. Thus he builds a trail of his interest through the maze of materials available to him.

And his trails do not fade. Several years later, his talk with a friend turns to the queer ways in which a people resist innovations, even of vital interest. He has an example, in the fact that the outraged Europeans still failed to adopt the Turkish bow. In fact he has a trail on it. A touch brings up the code book. Tapping a few keys projects the head of the trail. A lever runs through it at will, stopping at interesting items, going off on side excursions. It is an interesting trail, pertinent to the discussion. So he sets a reproducer in action, photographs the whole trail out, and passes it to his friend for insertion in his own memex, there to be linked into the more general trail.

Wholly new forms of encyclopedias will appear, ready-made with a mesh of associative trails running through them, ready to be dropped into the memex and there amplified. The lawyer has at his touch the associated opinions and decisions of his whole experience, and of the experience of friends and authorities. The patent attorney has on call the millions of issued patents, with familiar trails to every point of his client’s interest. The physician, puzzled by a patient’s reactions, strikes the trail established in studying an earlier similar case, and runs rapidly through analogous case histories, with side references to the classics for the pertinent anatomy and histology. The chemist, struggling with the synthesis of an organic compound, has all the chemical literature before him in his laboratory, with trails following the analogies of compounds, and side trails to their physical and chemical behavior.

The historian, with a vast chronological account of a people, parallels it with a skip trail which stops only on the salient items, and can follow at any time contemporary trails which lead him all over civilization at a particular epoch. There is a new profession of trail blazers, those who find delight in the task of establishing useful trails through the enormous mass of the common record. The inheritance from the master becomes, not only his additions to the world’s record, but for his disciples the entire scaffolding by which they were erected.

There is no record of what all those millions of Atlantic Monthly and Life readers made of Bush’s ideas in 1945 — or for that matter if they made anything of them at all. In the decades that followed, however, the article became a touchstone of the burgeoning semi-underground world of creative computing. Among its discoverers was Ted Nelson, who is depending on whom you talk to either one of the greatest visionaries in the history of computing or one of the greatest crackpots — or, quite possibly, both. Born in 1937 to a Hollywood director and his actress wife, then raised by his wealthy and indulgent grandparents following the inevitable Hollywood divorce, Nelson’s life would largely be defined by, as Gary Wolf put it in his classic profile for Wired magazine, his “aversion to finishing.” As in, finishing anything at all, or just the concept of finishing in the abstract. Well into middle-age, he would be diagnosed with attention-deficit disorder, an alleged malady he came to celebrate as his “hummingbird mind.” This condition perhaps explains why he was so eager to find a way of forging permanent, retraceable associations among all the information floating around inside and outside his brain.

Nelson coined the terms “hypertext” and “hypermedia” at some point during the early 1960s, when he was a graduate student at Harvard. (Typically, he got a score of Incomplete in the course for which he invented them, not to mention an Incomplete on his PhD as a whole.) While they’re widely used all but interchangeably today, in Nelson’s original formulation the former term was reserved for purely textual works, the later for those incorporating others forms of media, like images and sound. But today we’ll just go with the modern flow, call them all hypertexts, and leave it at that. In his scheme, then, hypertexts were texts capable of being “zipped” together with other hypertexts, memex-like, wherever the reader or writer wished to preserve associations between them. He presented his new buzzwords to the world at a conference of the Association for Computing Machinery in 1965, to little impact. Nelson, possessed of a loudly declamatory style of discourse and all the rabble-rousing fervor of a street-corner anarchist, would never be taken all that seriously by the academic establishment.

Instead, it being the 1960s and all, he went underground, embracing computing’s burgeoning counterculture. His eventual testament, one of the few things he ever did manage to complete — after a fashion, at any rate — was a massive 1200-page tome called Computer Lib/Dream Machines, self-published in 1974, just in time for the heyday of the Altair and the Homebrew Computer Club, whose members embraced Nelson as something of a patron saint. As the name would indicate, Computer Lib/Dream Machines was actually two separate books, bound back to back. Theoretically, Computer Lib was the more grounded volume, full of practical advice about gaining access to and using computers, while Dream Machines was full of the really out-there ideas. In practice, though, they were often hard to distinguish. Indeed, it was hard to even find anything in the books, which were published as mimeographed facsimile copies filled with jotted marginalia and cartoons drafted in Nelson’s shaky hand, with no table of contents or page numbers and no discernible organizing principle beyond the stream of consciousness of Nelson’s hummingbird mind. (I trust that the irony of a book concerned with finding new organizing principles for information itself being such an impenetrable morass is too obvious to be worth belaboring further.) Nelson followed Computer Lib/Dream Machines with 1981’s Literary Machines, a text written in a similar style that dwelt, when it could be bothered, at even greater length on the idea of hypertext.

The most consistently central theme of Nelson’s books, to whatever extent one could be discerned, was an elaboration of the hypertext concept he called Xanadu, after the pleasure palace in Samuel Taylor Coleridge’s poem “Kubla Khan.” The product of an opium-fueled hallucination, the 54-line poem is a mere fragment of a much longer work Coleridge had intended to write. Problem was, in the course of writing down the first part of his waking dream he was interrupted; by the time he returned to his desk he had simply forgotten the rest.

So, Nelson’s Xanadu was intended to preserve information that would otherwise be lost, which goal it would achieve through associative linking on a global scale. Beyond that, it was almost impossible to say precisely what Xanadu was or wasn’t. Certainly it sounds much like the World Wide Web to modern ears, but Nelson insists adamantly that the web is a mere bad implementation of the merest shadow of his full idea. Xanadu has been under allegedly active development since the late 1960s, making it the most long-lived single project in the history of computer programming, and by far history’s most legendary piece of vaporware. As of this writing, the sum total of all those years of work are a set of web pages written in Nelson’s inimitable declamatory style, littered with angry screeds against the World Wide Web, along with some online samples that either don’t work quite right or are simply too paradigm-shattering for my poor mind to grasp.

In my own years on this planet, I’ve come to reserve my greatest respect for people who finish things, a judgment which perhaps makes me less than the ideal critic of Ted Nelson’s work. Nevertheless, even I can recognize that Nelson deserves huge credit for transporting Bush’s ideas to their natural habitat of digital computers, for inventing the term “hypertext,” for defining an approach to links (or “zips”) in a digital space, and, last but far from least, for making the crucial leap from Vannevar Bush’s concept of the single-user memex machine to an interconnected global network of hyperlinks.

But of course ideas, of which both Bush and Nelson had so many, are not finished implementations. During the 1960s, 1970s, and early 1980s, there were various efforts — in addition, that is, to the quixotic effort that was Xanadu — to wrestle at least some of the concepts put forward by these two visionaries into concrete existence. Yet it wouldn’t be until 1987 that a corporation with real financial resources and real commercial savvy would at last place a reasonably complete implementation of hypertext before the public. And it all started with a frustrated programmer looking for a project.

Had he never had anything to do with hypertext, Bill Atkinson’s place in the history of computing would still be assured. Coming to Apple Computer in 1978, when the company was only about eighteen months removed from that famous Cupertino garage, Atkinson was instrumental in convincing Steve Jobs to visit the Xerox Palo Alto Research Center, thereby setting in motion the chain of events that would lead to the Macintosh. A brilliant programmer by anybody’s measure, he eventually wound up on the Lisa team. He wrote the routines to draw pixels onto the Lisa’s screen — routines on which, what with the Lisa being a fundamentally graphical machine whose every display was bitmapped, every other program depended. Jobs was so impressed by Atkinson’s work on what he named LisaGraf that he recruited him to port his routines over to the nascent Macintosh. Atkinson’s routines, now dubbed QuickDraw, would remain at the core of MacOS for the next fifteen years. But Atkinson’s contribution to the Mac went yet further: after QuickDraw, he proceeded to design and program MacPaint, one of the two applications included with the finished machine, and one that’s still justifiably regarded as a little marvel of intuitive user-interface design.

Atkinson’s work on the Mac was so essential to the machine’s success that shortly after its release he became just the fourth person to be named an Apple Fellow — an honor that carried with it, implicitly if not explicitly, a degree of autonomy for the recipient in the choosing of future projects. The first project that Atkinson chose for himself was something he called the Magic Slate, based on a gadget called the Dynabook that had been proposed years ago by Xerox PARC alum (and Atkinson’s fellow Apple Fellow) Alan Kay: a small, thin, inexpensive handheld computer controlled via a touch screen. It was, as anyone who has ever seen an iPhone or iPad will attest, a prescient project indeed, but also one that simply wasn’t realizable using mid-1980s computer technology. Having been convinced of this at last by his skeptical managers after some months of flailing, Atkinson wondered if he might not be able to create the next best thing in the form of a sort of software version of the Magic Slate, running on the Macintosh desktop.

In a way, the Magic Slate had always had as much to do with the ideas of Bush and Nelson as it did with those of Kay. Atkinson had envisioned its interface as a network of “pages” which the user navigated among by tapping links therein — a hypertext in its own right. Now he transported the same concept to the Macintosh desktop, whilst making his metaphorical pages into metaphorical stacks of index cards. He called the end result, the product of many months of design and programming, “Wildcard.” Later, when the trademark “Wildcard” proved to be tied up by another company, it turned into “HyperCard” — a much better name anyway in my book.

By the time he had HyperCard in some sort of reasonably usable shape, Atkinson was all but convinced that he would have to either sell the thing to some outside software publisher or start his own company to market it. With Steve Jobs now long gone and with him much of the old Jobsian spirit of changing the world through better computing, Apple was heavily focused on turning the Macintosh into a practical business machine. The new, more sober mood in Cupertino — not to mention Apple’s more buttoned-down public image — would seem to indicate that they were hardly up for another wide-eyed “revolutionary” product. It was Alan Kay, still kicking around Cupertino puttering with this and that, who convinced Atkinson to give CEO John Sculley a chance before he took HyperCard elsewhere. Kay brokered a meeting between Sculley and Atkinson, in which the latter was able to personally demonstrate to the former what he’d been working on all these months. Much to Atkinson’s surprise, Sculley loved HyperCard. Apparently at least some of the old Jobsian fervor was still alive and well after all inside Apple’s executive suite.

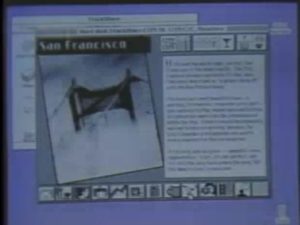

At its most basic, a HyperCard stack to modern eyes resembles nothing so much as a PowerPoint presentation, albeit one which can be navigated non-linearly by tapping links on the slides themselves. Just as in PowerPoint, the HyperCard designer could drag and drop various forms of media onto a card. Taken even at this fairly superficial level, HyperCard was already a full-fledged hypertext-authoring (and hypertext-reading) tool — by no means the first specimen of its kind, but the first with the requisite combination of friendliness, practicality, and attractiveness to make it an appealing environment for the everyday computer user. One of Atkinson’s favorite early demo stacks had many cards with pictures of people wearing hats. If you clicked on a hat, you were sent to another card showing someone else wearing a hat. Ditto for other articles of fashion. It may sound banal, but this really was revolutionary, organization by association in action. Indeed, one might say that HyperCard was Vannevar Bush’s memex, fully realized at last.

But the system showed itself to have much, much more to offer when the author started to dig into HyperTalk, the included scripting language. All sorts of logic, simple or complex, could be accomplished by linking scripts to clicks on the surface of the cards. At this level, HyperCard became an almost magical tool for some types of game development, as we’ll see in future articles. It was also a natural fit for many other applications: information kiosks, interactive tutorials, educational software, expert systems, reference libraries, etc.

John Sculley himself premiered HyperCard at the August 1987 MacWorld show. Showing unusual largess in his determination to get HyperCard into the hands of as many people as possible as quickly as possible, he announced that henceforward all new Macs would ship with a free copy of the system, while existing owners could buy copies for their machines for just $49. He called HyperCard the most important product Apple had released during his tenure there. Considering that Sculley had also been present for the launch of the original Macintosh, this was certainly saying something. And yet he wasn’t clearly in the wrong either. As important as the Macintosh, the realization in practical commercial form of the computer-interface paradigms pioneered at Xerox PARC during the 1970s, has been to our digital lives of today, the concept of associative indexing — hyperlinking — has proved at least as significant. But then, the two do go together like strawberries and cream, the point-and-click paradigm providing the perfect way to intuitively navigate through a labyrinth of hyperlinks. It was no coincidence that an enjoyable implementation of hypertext appeared first on the Macintosh; the latter almost seemed a prerequisite for the former.

The full import of the concept of hypertext was far from easy to get across in advertising copy, but Apple gave it a surprisingly serious go, paying due homage to Vannevar Bush in the process.

In the wake of that MacWorld presentation, a towering tide of HyperCard hype rolled from one side of the computer industry to the other, out into the mainstream media, and then back again, over and over. Hypertext’s time had finally come. In 1985, it was an esoteric fringe concept known only to academics and a handful of hackers, being treated at real length and depth in print only in Ted Nelson’s own sprawling, well-nigh impenetrable tomes. Four years later, every bookstore in the land sported a shelf positively groaning with trendy paperbacks advertising hypertext this and hypertext that. By then the curmudgeons had also begun to come out in force, always a sure sign that an idea has truly reached critical mass. Presentations showed up in conference catalogs with snarky titles like “Hypertext: Will It Cook Me Breakfast Too?.”

The curmudgeons had plenty of rabid enthusiasm to push back against. HyperCard, even more so than the Macintosh itself, had a way of turning the most sober-minded computing veterans into starry-eyed fanatics. Jan Lewis, a long time business-computing analyst, declared that “HyperCard is going to revolutionize the way computing is done, and possibly the way human thought is done.” Throwing caution to the wind, she abandoned her post at InfoWorld to found HyperAge, the first magazine dedicated to the revolution. “There’s a tremendous demand,” she said. “If you look at the online services, the bulletin boards, the various ad hoc meetings, user groups — there is literally a HyperCulture developing, almost a cult.” To judge from her own impassioned statements, she should know. She recruited Ted Nelson himself — one of the HyperCard holy trinity of Bush, Nelson, and Atkinson — to write a monthly column.

HyperCard effectively amounted to an entirely new computing platform that just happened to run atop the older platform that was the Macintosh. As Lewis noted, user-created HyperCard stacks — this new platform’s word for “programs” or “software” — were soon being traded all over the telecommunications networks. The first commercial publisher to jump into the HyperCard game was, somewhat surprisingly, Mediagenic. [1]Mediagenic was known as Activision until mid-1988. To avoid confusion, I just stick with the name “Mediagenic” in this article. Bruce Davis, Mediagenic’s CEO, has hardly gone down into history as a paradigm of progressive thought in the realms of computer games and software in general, but he defied his modern reputation in this one area at least by pushing quickly and aggressively into “stackware.” One of the first examples of same that Mediagenic published was Focal Point, a collection of business and personal-productivity tools written by one Danny Goodman, who was soon to publish a massive bible called The Complete HyperCard Handbook, thus securing for himself the mantle of the new ecosystem’s go-to programming guru. Focal Point was a fine demonstration that just about any sort of software could be created by the sufficiently motivated HyperCard programmer. But it was another early Mediagenic release, City to City, that was more indicative of the system’s real potential. It was a travel guide to most major American cities — an effortlessly browsable and searchable guide to “the best food, lodgings, and other necessities” to be found in each of the metropolises in its database.

Other publishers — large, small, and just starting out — followed Mediagenic’s lead, releasing a bevy of fascinating products. The people behind The Whole Earth Catalog — themselves the inspiration for Ted Nelson’s efforts in self-publication — converted their current edition into a HyperCard stack filling a staggering 80 floppy disks. A tiny company called Voyager combined HyperCard with a laser-disc player — a very common combination among ambitious early HyperCard developers — to offer an interactive version of the National Gallery of Art which could be explored using such associative search terms as “Impressionist landscapes with boats.” Culture 1.0 let you explore its namesake through “3700 years of Western history — over 200 graphics, 2000 hypertext links, and 90 essays covering topics from the Black Plague to Impressionism,” all on just 7 floppy disks. Mission: The Moon, from the newly launched interactive arm of ABC News, gathered together details of every single Mercury, Gemini, and Apollo mission, including videos of each mission hosted on a companion laser disc. A professor of music converted his entire Music Appreciation 101 course into a stack. The American Heritage Dictionary appeared as stackware. And lots of what we might call “middlestackware” appeared to help budding programmers with their own creations: HyperComposer for writing music in HyperCard, Take One for adding animations to cards.

Just two factors were missing from HyperCard to allow hypertext to reach its full potential. One was a storage medium capable of holding lots of data, to allow for truly rich multimedia experiences, combining the lavish amounts of video, still pictures, music, sound, and of course text that the system clearly cried out for. Thankfully, that problem was about to be remedied via a new technology which we’ll be examining in my very next article.

The other problem was a little thornier, and would take a little longer to solve. For all its wonders, a HyperCard stack was still confined to the single Macintosh on which it ran; there was no provision for linking between stacks running on entirely separate computers. In other words, one might think of a HyperCard stack as equivalent to a single web site running locally off a single computer’s hard drive, without the ability to field external links alongside its internal links. Thus the really key component of Ted Nelson’s Xanadu dream, that of a networked hypertext environment potentially spanning the entire globe, remained unrealized. In 1990, Bill Nisen, the developer of a hypertext system called Guide that slightly predated HyperCard but wasn’t as practical or usable, stated the problem thus:

The one thing that is precluding the wide acceptance of hypertext and hypermedia is adequate broadcast mechanisms. We need to find ways in which we can broadcast the results of hypermedia authoring. We’re looking to in the future the ubiquitous availability of local-area networks and low-cost digital-transmission facilities. Once we can put the results of this authoring into the hands of more users, we’re going to see this industry really explode.

Already at the time Nisen made that statement, a British researcher named Tim Berners-Lee had started to experiment with something he called the Hypertext Transfer Protocol. The first real web site, the beginning of the World Wide Web, would go online in 1991. It would take a few more years even from that point, but a shared hypertextual space of a scope and scale the likes of which few could imagine was on the way. The world already had its memex in the form of HyperCard. Now — and although this equivalency would scandalize Ted Nelson — it was about to get its Xanadu.

Associative indexing permeates our lives so thoroughly today that, as with so many truly fundamental paradigm shifts, the full scope of the change it has wrought can be difficult to fully appreciate. A century ago, education was still largely an exercise in retention: names, dates, Latin verb cognates. Today’s educational institutions — at least the more enlightened ones — recognize that it’s more important to teach their pupils how to think than it is to fill their heads with facts; facts, after all, are now cheap and easy to acquire when you need them. That such a revolution in the way we think about thought happened in just a couple of decades strikes me as incredible. That I happened to be present to witness it strikes me as amazing.

What I’ve witnessed has been a revolution in humanity’s relationship to information itself that’s every bit as significant as any political revolution in history. Some Singularity proponents will tell you that it marks the first step on the road to a vast worldwide consciousness. But even if you choose not to go that far, the ideas of Vannevar Bush and Ted Nelson are still with you every time you bring up Google. We live in a world in which much of the sum total of human knowledge is available over an electronic connection found in almost every modern home. This is wondrous. Yet what’s still more wondrous is the way that we can find almost any obscure fact, passage, opinion, or idea we like from within that mass, thanks to selection by association. Mama, we’re all cyborgs now.

(Sources: the books Hackers: Heroes of the Computer Revolution and Insanely Great: The Life and Times of the Macintosh, the Computer That Changed Everything by Steven Levy; Computer Lib/Dream Machines and Literary Machines by Ted Nelson; From Memex to Hypertext: Vannevar Bush and the Mind’s Machine, edited by James M. Nyce and Paul Kahn; The New Media Reader, edited by Noah Wardrip-Fruin and Nick Montfort; Multimedia and Hypertext: The Internet and Beyond by Jakob Nielsen; The Making of the Atomic Bomb by Richard Rhodes. Also the June 1995 Wired magazine profile of Ted Nelson; Andy Hertzfeld’s website Folklore; and the Computer Chronicles television episodes entitled “HyperCard,” “MacWorld Special 1988,” “HyperCard Update,” and “Hypertext.”)

Footnotes

| ↑1 | Mediagenic was known as Activision until mid-1988. To avoid confusion, I just stick with the name “Mediagenic” in this article. |

|---|