I have this idea of a living world, which I have never achieved. It’s based upon this picture in my head, and I can see what it’s like to play that game. Every time I do it, then it maybe gets closer to that ideal. But it’s an ambitious thing.

— Peter Molyneux

One day as a young boy, Peter Molyneux stumbled upon an ant hill. He promptly did what young boys do in such situations: he poked it with a stick, watching the inhabitants scramble around as destruction rained down from above. But then, Molyneux did something that set him apart from most young boys. Feeling curious and maybe a little guilty, he gave the ants some sugar for energy and watched quietly as they methodically undid the damage to their home. Just like that, he woke up to the idea of little living worlds with lots of little living inhabitants — and to the idea of he himself, the outsider, being able to affect the lives of those inhabitants. The blueprint had been laid for one of the most prominent and influential careers in the history of game design. “I have always found this an interesting mechanic, the idea that you influence the game as opposed to controlling the game,” he would say years later. “Also, the idea that the game can continue without you.” When Molyneux finally grew bored and walked away from the ant hill on that summer day in his childhood, it presumably did just that, the acts of God that had nearly destroyed it quickly forgotten. Earth — and ants — abide.

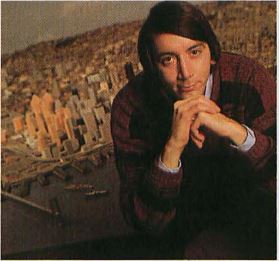

Peter Molyneux was born in the Surrey town of Guildford (also hometown of, read into it what you will, Ford Prefect) in 1959, the son of an oil-company executive and a toy-shop proprietor. To hear him tell it, he was qualified for a career in computer programming largely by virtue of being so hopeless at everything else. Being dyslexic, he found reading and writing extremely difficult, a handicap that played havoc with his marks at Bearwood College, the boarding school in the English county of Berkshire to which his family sent him for most of his teenage years. Meanwhile his less than imposing physique boded ill for a career in the military or manual labor. Thankfully, near the end of his time at Bearwood the mathematics department acquired a Commodore PET, while the student union almost simultaneously installed a Space Invaders machine. Seeing a correspondence between these two pieces of technology that eluded his fellow students, Molyneux set about trying to program his own Space Invaders on the PET, using crude character glyphs to represent the graphics that the PET, being a text-only machine, couldn’t actually draw. No matter. A programmer had been born.

These events, followed shortly by Molyneux’s departure from Bearwood to face the daunting prospect of the adult world, were happening at the tail end of the 1970s. Like so many of the people I’ve profiled on this blog, Molyneux was thus fortunate enough to be born not only into a place and circumstances that would permit a career in games, but at seemingly the perfect instant to get in on the ground floor as well. But, surprisingly for a fellow who would come to wear his huge passion for the medium on his sleeve — often almost as much to the detriment as to the benefit of his games and his professional life — Molyneux took a meandering path filling fully another decade to rise to prominence in the field. Or, to put it less kindly: he failed, repeatedly and comprehensively, at every venture he tried for most of the 1980s before he finally found the one that clicked.

Perhaps inspired by his mother’s toy shop, his original dream was to be not so much a game designer as a computer entrepreneur. After earning a degree in computer science from Southampton University, he found himself a job working days as a systems analyst for a big company. By night, he formed a very small company called Vulcan in his hometown of Guildford to implement a novel scheme for selling blank disks. He wrote several simple programs: a music creator, some mathematics drills, a business simulator, a spelling quiz. (The last, having been created by a dyslexic and terrible speller in general, was a bit of a disaster.) For every ten disks you bought for £10, you would get one of the programs for free along with your blank disks. After placing his tiny advertisement in a single magazine, Molyneux was so confident of the results that he told his local post office to prepare for a deluge of mail, and bought a bigger mailbox for his house to hold it all. He got five orders in the first ten days, less than fifty in the scheme’s total lifespan — along with about fifty more inquiries from people who had no interest in the blank disks but just wanted to buy his software.

Taking their interest to heart, Molyneux embarked on Scheme #2. He improved the music creator and the business simulator and tried to sell them as products in their own right. Even years later he would remain proud of the latter in particular — his first original game, which he named Entrepreneur: “I really put loads of features into it. You ran a business and you could produce anything you liked. You had to do things like keep the manufacturing line going, set the price for your product, decide what advertising you wanted, and these random events would happen.” With contests all the rage in British games at the time, he offered £100 to the first person to make £1 million in Entrepreneur. The prize went unclaimed; the game sold exactly two copies despite being released near the zenith of the early-1980s British mania for home computers. “Everybody around me was making an absolute fortune,” Molyneux remembers. “You had to be a complete imbecile in those days not to make a fortune. Yet here I was with Entrepreneur and Composer, making nothing.” He wasn’t, it appeared, very good at playing his own game of entrepreneurship; his own £1 million remained far out of reach. Nevertheless, he moved on to the next scheme.

Scheme #3 was to crack the business and personal-productivity markets via a new venture called Taurus, initiated by Molyneux and his friend Les Edgar, who were later joined by one Kevin Donkin. Molyneux having studied accounting at one time in preparation for a possible career in the field (“the figures would look so messy that no one would ever employ me”), it was decided that Taurus would initially specialize in financial software with exciting names like Taurus Accounts, Taurus Invoicing, and Taurus Stock Control. Those products, like all the others Molyneux had created, went nowhere. But now came a bizarre story of mistaken identity that… well, it wouldn’t make Molyneux a prominent game designer just yet, but it would move him further down the road to that destination.

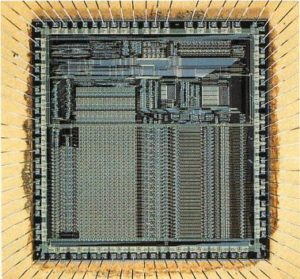

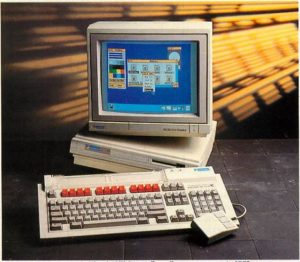

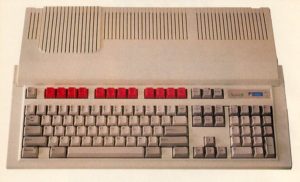

Commodore was about to launch the Amiga in Britain, and, this being early on when they still saw it as potential competition for the IBMs of the world, was looking to convince makers of productivity software to write for the machine. They called up insignificant little Taurus of all people to request a meeting to discuss porting the “new software” the latter had in the works to the Amiga. Molyneux and Edgar assumed Commodore must have somehow gotten wind of a database program they were working on. In a state of no small excitement, they showed up at Commodore UK’s headquarters on the big day and met a representative. Molyneux:

He kept talking about “the product,” and I thought they were talking about the database. At the end of the meeting, they say, “We’re really looking forward to getting your network running on the Amiga.” And it suddenly dawned on me that this guy didn’t know who we were. Now, we were called Taurus, as in the star sign. He thought we were Torus, a company that produced networking systems. I suddenly had this crisis of conscience. I thought, “If this guy finds out, there go my free computers down the drain.” So I just shook his hand and ran out of that office.

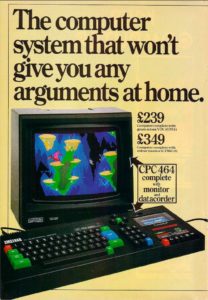

An appropriately businesslike advertisement for Taurus’s database manager gives no hint of what actually lies in the company’s future…

By the time Commodore figured out they had made a terrible mistake, Taurus had already been signed as official Amiga developers and given five free Amigas. They parlayed those things into a two-year career as makers of somewhat higher-profile but still less than financially successful productivity software for the Amiga. After the database, which they named Acquisition and declared “the most complete database system conceived on any microcomputer” — Peter Molyneux’s habit of over-promising, which gamers would come to know all too well, was already in evidence — they started on a computer-aided-design package called X-CAD Designer. Selling in the United States for the optimistic prices of $300 and $500 respectively, both programs got lukewarm reviews; they were judged powerful but kind of incomprehensible to actually use. But even had the reviews been better, high-priced productivity software was always going to be a hard sell on the Amiga. There were just three places to really make money in Amiga software: in personal-creativity software like paint programs, in video-production tools, and, most of all, in games. In spite of all of Commodore’s earnest efforts to the contrary, the Amiga had by now become known first and foremost as the world’s greatest gaming computer.

Molyneux and his colleagues therefore began to wind down their efforts in productivity software in favor of a new identity. They renamed their company Bullfrog after a ceramic figurine they had lying around in the “squalor” of what Molyneux describes as their “absolutely shite” office in a Guildford pensioner’s attic. Under the new name, they planned to specialize in games — Scheme #4 for Peter Molyneux. “We had a simple choice of hitting our head against a brick wall with business software,” he remembers, “or doing what I really wanted to do with my life anyway, which was write games.” Having made the choice to make Bullfrog a game developer, their first actual product was not a game but a simple drum sequencer for the Amiga called A-Drum. Hobgoblins and little minds and all the rest. When A-Drum duly flopped, they finally got around to games.

A friend of Molyneux’s had written a budget-priced action-adventure for the Commodore 64 called Druid II: Enlightenment, and was looking for someone to do an Amiga conversion. Bullfrog jumped at the chance, even though Molyneux, who would always persist in describing himself as a “rubbish” programmer, had very little idea how to program an action game. When asked by Enlightenment‘s publisher Firebird whether he could do the game in one frame — i.e., whether he could update everything onscreen within a single pass of the electron gun painting the screen to maintain the impression of smooth, fluid movement — an overeager Molyneux replied, “Are you kidding me? I can do it in ten frames!” It wasn’t quite the answer Firebird was looking for. But in spite of it all, Bullfrog somehow got the job, producing what Molyneux describes as a “technically rather poor” port of what had been a rather middling game in the first place. (Molyneux’s technique for getting everything drawn in one frame was to simply keep shrinking the size of the display until even his inefficient routines could do the job.) And then, as usual for everything Molyneux touched, it flopped. But Bullfrog did get two important things out of the project: they learned much about game programming, and they recruited as artist for the project one Glenn Corpes, who was not only a talented pixel pusher but also a talented programmer and fount of ideas almost the equal of Molyneux.

Despite the promising addition of Corpes, the first original game conjured up by the slowly expanding Bullfrog fared little better than Enlightenment. Corpes and Kevin Donkin turned out a very of-its-time top-down shoot-em-up called Fusion, which Electronic Arts agreed to release. Dismissed as “a mixture of old ideas presented in a very unexciting manner” by reviewers, Fusion was even less impressive technically than had been the Enlightenment port, being plagued by clashing colors and jittery scrolling — not at all the sort of thing to impress the notoriously audiovisually-obsessed Amiga market. Thus Fusion flopped as well, keeping Molyneux’s long record of futility intact. But then, unexpectedly from this group who’d shown so little sign of ever rising above mediocrity, came genius.

To describe Populous as a stroke of genius would be a misnomer. It was rather a game that grew slowly into its genius over a considerable period of time, a game that Molyneux himself considers more an exercise in evolution than conscious design. “It wasn’t an idea that suddenly went ‘Bang!'” he says. “It was an idea that grew and grew.” And its genesis had as much to do with Glenn Corpes as it did with Peter Molyneux.

It all began when Corpes started showing off a routine he had written which let him build isometric landscapes out of three-dimensional blocks, like a virtual Lego set. You could move the viewpoint about the landscape, raising and lowering the land by left-clicking to add new blocks, right-clicking to remove them. Molyneux was immediately sure there was a game in there somewhere. His childhood memory of the ant farm leaping to mind, he said, “Let’s have a thousand people running around on it.”

Populous thus began with those little people in lieu of ants, wandering independently over Corpes’s isometric landscapes in real time. When they found a patch they liked, they would settle down, building little huts. Since, this being a computer game, the player would obviously need something to do as well, Molyneux started adding ways for you, as a sort of God on high, to influence the people’s behavior in indirect ways. He added something he called a “Papal Magnet,” a huge ankh you could place in the world to draw your people toward a given spot. But there would come a problem if the way to the Ankh happened to be blocked by, say, a lake. Molyneux claims he added Populous‘s most basic mechanic, the thing you spend by far the most time doing when playing the game, as a response to his “incompetence” as a coder and resulting inability to write a proper path-finding algorithm: when your people get stuck somewhere, you can, subject to your mana reserves — even gods have limits — raise or lower the land to help them out. With that innovation, Populous from the player’s perspective became largely an exercise in terraforming, creating smooth, even landscapes on which your people can build their huts, villages, and eventually castles. As your people become fruitful and multiply, their prayers fuel your mana reserves.

Next, Molyneux added warfare to the picture. Now you would be erecting mountains and lakes to protect your people from their enemies, who start out walking about independently on the other side of the world. The ultimate goal of the game, of course, is to use your people to wipe out your enemy’s people before they do the same to you; this is a very Old Testament sort of religious experience. To aid in that goal, Molyneux gradually added lots of other godly powers to your arsenal, more impressive than the mere raising and lowering of land if also far more expensive in terms of precious mana: flash floods, earthquakes, volcanic eruptions, etc. You know, all your standard acts of God, as found in the Bible and insurance claims.

Lego Populous. Bullfrog had so much fun with this implementation of the idea that they seriously discussed trying to turn it into a commercial board game.

Parts of Populous were prototyped on the tabletop. Bullfrog used Lego bricks to represent the landscapes, a handy way of implementing the raising-and-lowering mechanic in a physical space. They went so far as to discuss a license with Lego, only to be told that Lego didn’t support “violent games.” Molyneux admits that the board game, while playable, was very different from the computerized Populous, playing out as a slow-moving, chess-like exercise in strategy. The computer Populous, by contrast, can get as frantic as any action game, especially in the final phase when all the early- and mid-game maneuvering and feinting comes down to the inevitable final genocidal struggle between Good and Evil.

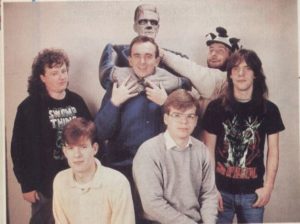

Bullfrog. From left: Glenn Corpes (artist and programmer), Shaun Cooper (artist and tester), Peter Molyneux (designer and programmer), Kevin Donkin (designer and programmer), Les Edgar (office manager), Andy Jones (artist and tester).

Ultimately far more important to the finished product than Bullfrog’s Lego Populous were the countless matches Molyneux played on the computer against Glenn Corpes. Apart from all of its other innovations in helping to invent the god-game and real-time-strategy genres, Populous was also a pioneering effort in online gaming. Multi-player games — the only way to play Populous for many months — took place between two people seated at two separate Amigas, connected together via modem or, if together in the same room as Molyneux and Corpes were, via a cable. Vanishingly few other designers were working in this space at the time, for understandable reasons: even leaving aside the fact that the majority of computer owners didn’t own modems, running a multi-player game in real-time over a connection as slow as 1200 baud was hardly a programming challenge for the faint-hearted. The fact that it works at all in Populous rather puts the lie to Molyneux’s self-deprecating description of himself as a “rubbish” coder.

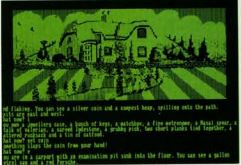

You draw your people toward different parts of the map by placing the Papal Magnet. The first one to touch it becomes the leader. There are very few words in the game, which only made it that much easier for Electronic Arts to localize and popularize across Europe. Everything is instead done using the initially incomprehensible suite of icons near the bottom of the screen. Populous does become intuitive in time, but it’s not without a learning curve.

Development of Populous fell into a comfortable pattern. Molyneux and Corpes would play together for several hours every evening, then nip off to the pub to talk about their experiences. Next day, they’d tweak the game, then they’d go at it again. It’s here that we come to the beating heart of Molyneux’s description of Populous as a game evolved rather than designed. Almost everything in the finished game beyond the basic concept was added in response to Molyneux and Corpes’s daily wars. For instance, Molyneux initially added knights, super-powered individuals who can rampage through enemy territory and cause a great deal of havoc in a very short period of time, to prevent their games from devolving into endless stalemates. “A game could get to the point where both players had massive populations,” he says, “and there was just no way to win.” With knights, the stronger player “could go and massacre the other side and end the game at a stroke.”

A constant theme of all the tweaking was to make a more viscerally exciting game that played more quickly. For commercial as well as artistic reasons — Amiga owners weren’t particularly noted for their patience with slow-paced, cerebral games — this was considered a priority. Over the course of development, the length of the typical game Molyneux played with Corpes shrank from several hours to well under one.

Even tweaked to play quickly and violently, Populous was quite a departure from the tried-and-true Amiga fare of shoot-em-ups, platformers, and action-adventures. The unenviable task of trying to sell the thing to a publisher was given to Les Edgar. After visiting about a dozen publishers, he convinced Electronic Arts to take a chance on it. Bullfrog promised EA a finished Populous in time for Christmas 1988. By the time that deadline arrived, however, it was still an online multiplayer-only game, a prospect EA knew to be commercially untenable. Molyneux and his colleagues thus spent the next few months creating Populous‘s single-player “Conquest Mode.”

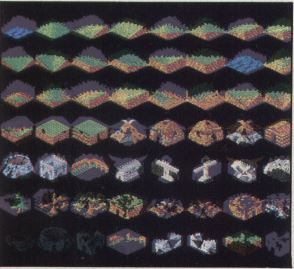

In addition to the green and pleasant land of the early levels, there are also worlds of snow and ice, desert worlds, and even worlds of fire and lava to conquer.

Perilously close to being an afterthought to the multi-player experience though it was, Conquest Mode would be the side of the game that the vast majority of its eventual players would come to know best if not exclusively. Rather than design a bunch of scenarios by hand, Bullfrog wrote an algorithm to procedurally generate 500 different “worlds” for play against a computer opponent whose artificial intelligence also had to be created from scratch during this period. This method of content creation, used most famously by Ian Bell and David Braben in Elite, was something of a specialty and signpost of British game designers, who, plagued by hardware limitations far more stringent than their counterparts in the United States, often used it as a way to minimize the space their games consumed in memory and on disk. Most recently, Geoff Crammond’s hit game The Sentinel, published by Firebird, had used a similar scheme. Glenn Corpes believes it may have been an EA executive named Joss Ellis who first suggested it to Bullfrog.

Populous‘s implementation is fairly typical of the form. Each of the 500 worlds except the first is protected by a password that is, like everything else, itself procedurally generated. When you win at a given level, you’re given the password to a higher, harder level; whether and how many levels you get to skip is determined by how resounding a victory you’ve just managed. It’s a clever scheme, packing a hell of a lot of potential gameplay onto a single floppy disk and even making an effort to avoid boring the good player — and all without forcing Bullfrog to deal with the complications of actually storing any state whatsoever onto disk.

It inevitably all comes down to a frantic final free-for-all between your people and those of your enemy.

Given their previous failures, Bullfrog understandably wasn’t the most confident group when a well-known British games journalist named Bob Wade, who had already played a pre-release version of the game, came by for a visit. For hours, Molyneux remained too insecure to actually ask Wade the all-important question of what he thought of the game. At last, after Wade had joined the gang for “God knows how many” pints at their local, Molyneux worked up the courage to pop the question. Wade replied that it was the best game he’d ever played, and he couldn’t wait to get back to it — prompting Molyneux to think he must have made some sort of mistake, and that under no circumstances should he be allowed to play another minute of it in case his opinion should change. It was Wade and the magazine he was writing for at the time, ACE (Advanced Computer Entertainment), who coined the term “god game” in the glowing review that followed, the first trickle of a deluge of praise from the gaming press in Britain and, soon enough, much of the world.

Bullfrog’s first royalty check for Populous was for a modest £13,000. Their next was for £250,000, prompting a naive Les Edgar to call Electronic Arts about it, sure it was a mistake. It was no mistake; Populous alone reportedly accounted for one-third of EA’s revenue during its first year on the market. That Bullfrog wasn’t getting even bigger checks was a sign only of the extremely unfavorable deal they’d signed with EA from their position of weakness. Populous finally and definitively ended the now 30-year-old Peter Molyneux’s long run of obscurity and failure at everything he attempted. In his words, he went overnight from “urinating in the sink” and “owing more money than I could ever imagine paying back” to “an incredible life” in games. Port after port came out for the next couple of years, each of them becoming a bestseller on its platform. Populous was selected to become one of the launch titles for the Super Nintendo console in Japan, spawning a full-blown fad there that came to encompass comic books, tee-shirts, collectibles, and even a symphony concert. When they visited Japan for the first time on a promotional tour, Molyneux and Les Edgar were treated like… well, appropriately enough, like gods. Populous sold 3 million copies in all according to some reports, an almost inconceivable figure for a game during this period.

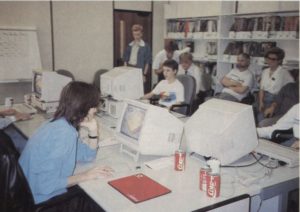

The One magazine and Electronic Arts hosted a tournament to find the best Populous player in Britain.

While a relatively small percentage of Populous players played online, those who did became pioneers of sorts in their own right. Some bulletin-board systems set up matchmaking services to pair up players looking for a game, any time, day or night; the resulting connections sometimes spanned national borders or even oceans. The matchmakers were aided greatly by Bullfrog’s forward-thinking decision to make all versions of Populous compatible with one another in terms of online play. In making it so quick and easy to find an online opponent, these services prefigured the modern world of Internet-enabled online gaming. Molyneux pronounced them “pretty amazing,” and at the time they really were. In 1992, he spoke excitedly of a recent trip to Japan, where’d he seen a town “with 10,000 homes all linked together. You can play games with anybody in the place. It’s enormous, really enormous, and it’s growing.” If only he’d known what online gaming would grow into in the next decade or two…

A youngster named Andrew Reader wound up winning the tournament, only to get trounced in an exhibition match by the master, Peter Molyneux himself. There was talk of televising a follow-up tournament on Sky TV, but it doesn’t appear to have happened.

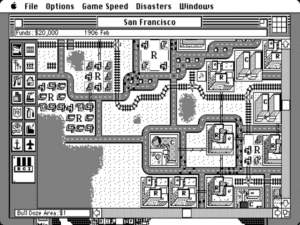

The original Amiga version of Populous had been released all but simultaneously with the Amiga version of SimCity. Press and public alike immediately linked the two games together; AmigaWorld magazine, for instance, went so far as to review them jointly in a single article. Both Will Wright of SimCity fame and Peter Molyneux were repeatedly asked in interviews whether they’d played the other’s game. Wright was polite but, one senses, a little disinterested in Populous, saying he “liked the idea of playing God and having a population follow you,” but “sort of wish they’d gone for a slightly more educational angle.” Molyneux was much more enthusiastic about his American counterpart’s work, repeatedly floating a scheme to somehow link the two games together in more literal fashion for online play. He claimed at one point that Maxis (developers of SimCity) and his own Bullfrog had agreed on a liaison “to go backwards and forwards” between their two companies to work on linking their games. The liaison, he claimed, had “the Populous landscape moving to and from SimCity,” and a finished product would be out sometime in 1992. Like quite a number of the more unbelievable schemes Molyneux has floated over the years, it never happened.

The idea of a linkage between SimCity and Populous, whether taking place online or in the minds of press and public, can seem on the face of it an exceedingly strange one today. How would the online linkage actually work anyway? Would the little Medieval warriors from Populous suddenly start attacking SimCity‘s peaceful modern utopias? Or would Wright’s Sims plop themselves down in the middle of Molyneux’s apocalyptic battles and start building stadiums and power plants? These were very different games: Wright’s a noncompetitive, peaceful exercise in urban planning with strong overtones of edutainment; Molyneux’s a zero-sum game of genocidal warfare that aspired to nothing beyond entertainment. Knowing as we do today the future paths of these two designers — i.e., ever further in the directions laid down by these their first significant works — only heightens the seeming dichotomy.

That said, there actually were and are good reasons to think of SimCity and Populous as two sides of the same coin. For us today, the list includes first of all the reasons of simple historical concordance. Each marks the coming-out party of one of the most important game designers of all time, occurring within bare weeks of one another.

But of course the long-term importance of these two designers to their field wasn’t yet evident in 1989; obviously players were responding to something else in associating their games with one another. Once you stripped away their very different surface trappings and personalities, the very similar set of innovations at the heart of each was laid bare. AmigaWorld said it very well in that joint review: “The real joy of these programs is the interlocking relationships. Sure, you’re a creator, but even more a facilitator, influencer, and stage-setter for little computer people who act on your wishes in their own time and fashion.” It’s no coincidence that, just as Peter Molyneux was partly inspired by an ant hill to create Populous, one of Will Wright’s projects of the near future would be the virtual ant farm SimAnt. In creating the first two god games, the two were indeed implementing a very similar core idea, albeit each in his own very different way.

Joel Billings of the king of American strategy games SSI had founded his company back in 1979 with the explicit goal of making computerized versions of the board games he loved. SimCity and Populous can be seen as the point when computer strategy games transcended that traditional approach. The real-time nature of these games makes them impossible to conceive of as anything other than computer-based works, while their emergent complexity makes them objects of endless fascination for their designers as much or more so than for their players.

In winning so many awards and entrancing so many players for so long, SimCity and Populous undoubtedly benefited hugely from their sheer novelty. Their flaws stand out more clearly today. With its low-resolution graphics and without the aid of modern niceties like tool tips and graphical overlays, SimCity struggles to find ways to communicate vital information about what your city is really doing and why, making the game into something of an unsatisfying black box unless and until you devote a lot of time and effort to understanding what affects what. Populous has many of the same interface frustrations, along with other problems that feel still more fundamental and intractable, especially if you, like the vast majority of players back in its day, experience it through its single-player Conquest Mode. Clever as they are, the procedurally generated levels combined with the fairly rudimentary artificial intelligence of your computer opponent introduce a lot of infelicities. Eventually you begin to realize that one level is pretty much the same as any other; you just need to execute the same set of strategies and tactics more efficiently to have success at the higher levels.

Both Will Wright and Peter Molyneux are firm adherents to the experimental, boundary-pushing school of game design — an approach that yields innovative games but not necessarily holistically good games every time out. And indeed, throughout his long career each of them has produced at least as many misses as hits, even if we dismiss the complaints of curmudgeons like me and lump SimCity and Populous into the category of the hits. Both designers have often fallen into the trap, if trap it be, of making games that are more interesting for creators and commentators than they are fun for actual players. And certainly both have, like all of us, their own blind spots: in relying so heavily on scientific literature to inform his games, Wright has often produced end results with something of the feel of a textbook, while Molyneux has often lacked the discipline and gravitas to fully deliver on his most grandiose schemes.

But you know what? It really doesn’t matter. We need our innovative experimentalists to blaze new trails, just as we need our more sober, holistically-minded designers to exploit the terrain they discover. SimCity and Populous would be followed by decades of games that built on the possibilities they revealed — many of which I’d frankly prefer to play today over these two original ground-breakers. But, again, that reality doesn’t mean we should celebrate SimCity and Populous one iota less, for both resoundingly pass the test of historical significance. The world of gaming would be a much poorer place without Will Wright and Peter Molyneux and their first living worlds inside a box.

(Sources: The Official Strategy Guide for Populous and Populous II by Laurence Scotford; Master Populous: Blueprints for World Power by Clayton Walnum; Amazing Computing of October 1989; Next Generation of November 1998; PC Review of July 1992; The One of April 1989, September 1989, and May 1991; Retro Gamer 44; AmigaWorld of December 1987, June 1989, and November 1989; The Games Machine of November 1988; ACE of April 1989; the bonus content to the film From Bedrooms to Billions. Archived online sources include features on Peter Molyneux and Bullfrog for Wired Online, GameSpot, and Edge Online. Finally, Molyneux’s postmortem on Populous at the 2011 Game Developers Conference.

Populous is available for purchase from GOG.com.)